Visualizations and more analysis with PmagPy#

This notebook demonstrates PmagPy functions that can be used to visualize data as well as those that conduct statistical tests that have associated visualizations.

Guide to PmagPy#

This notebook is one of a series of notebooks that demonstrate the functionality of PmagPy. The other notebooks are:

PmagPy_introduction.ipynb This notebook introduces PmagPy and lists the functions that are demonstrated in the other notebooks.

PmagPy_calculations.ipynb This notebook demonstrates many of the PmagPy calculation functions such as those that rotate directions, return statistical parameters, and simulate data from specified distributions

PmagPy_MagIC.ipynb This notebook demonstrates how PmagPy can be used to read and write data to and from the MagIC database format including conversion from many individual lab measurement file formats.

Customizing this notebook#

If you are running this notebook on JupyterHub and want to make changes, you should make a copy (see File menu). Otherwise, each time you update PmagPy, your changes will be overwritten.

Get started#

To use the functions in this notebook, we have to import the PmagPy modules pmagplotlib, pmag and ipmag and some other handy functions for use in the notebook. This is done in the following code block which must be executed before running any other code block. To execute, click on the code block and then click on the “Run” button in the menu.

In order to access the example data, this notebook is meant to be run in the PmagPy-data directory (PmagPy directory for developers).

Try it! Run the code block below (click on the cell and then click ‘Run’):

import pmagpy.pmag as pmag

import pmagpy.pmagplotlib as pmagplotlib

import pmagpy.ipmag as ipmag

import pmagpy.contribution_builder as cb

from pmagpy import convert_2_magic as convert

import matplotlib.pyplot as plt # our plotting buddy

import matplotlib

import numpy as np # the fabulous NumPy package

import pandas as pd # and of course Pandas

# test if cartopy is installed

has_cartopy, Cartopy = pmag.import_cartopy()

# test if xlwt is installed (allows you to export to excel)

try:

import xlwt

has_xlwt = True

except ImportError:

has_xlwt = False

# This allows you to make matplotlib plots inside the notebook.

%matplotlib inline

from IPython.display import Image

import os

print('All modules imported!')

All modules imported!

Functions demonstrated within PmagPy_plots_analysis.ipynb:#

Functions in PmagPy_plots_tests.ipynb

ani_depthplot : plots anisotropy data against depth in stratigraphic section (Xmas tree plots)

aniso_magic : makes plots of anisotropy data and bootstrapped confidences

biplot_magic : plots different columns against each other in MagIC formatted data files

chi_magic : plots magnetic susceptibility data in MagIC format as function of field, frequency or temperature

common_mean : graphical approach to testing two sets of directions for common mean using bootstrap

cont_rot : makes plots of continents after rotation to specified coordinate system

core_depthplot : plots MagIC formatted data

curie : makes plots of Curie Temperature data and provides estimates for Tc

dayplot_magic : makes Day et al. (1977) and other plots with hysteresis statistics

dmag_magic : plots remanence against demagnetization step for MagIC formatted files

eqarea and eqarea_magic : makes equal area projections for directions

eqarea_ell : makes equal area projections for directions with specified confidence ellipses

find_ei : finds the inclination unflattening factor that unsquishes directions to match TK03 distribution

fishqq: makes a Quantile-Quantile plot for directions against uniform and exponential distributions

foldtest & foldtest_magic : finds tilt correction that maximizes concentration of directions, with bootstrap confidence bounds.

forc_diagram: plots FORC diagrams for both conventional and irregular FORCs

histplot : makes histograms

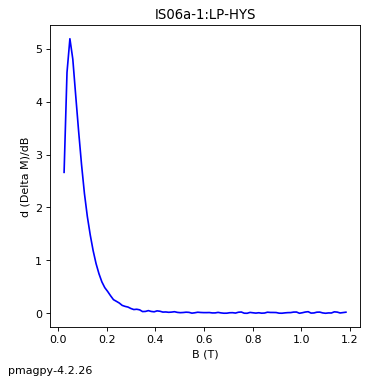

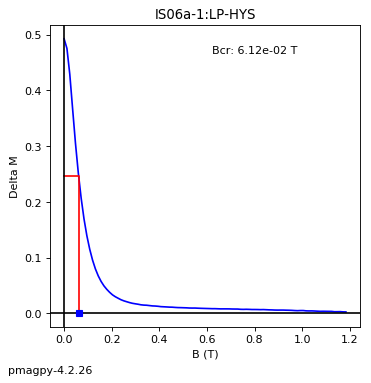

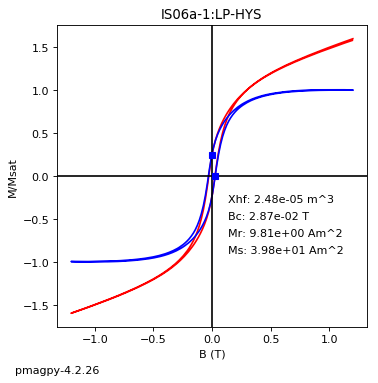

hysteresis_magic : makes plots of hysteresis data (not FORCs).

irm_unmix : analyzes IRM acquisition data in terms of coercivity distributions

irmaq_magic : plots IRM acquistion data

lnp_magic : plots lines and planes for site level data and calculates best fit mean and alpha_95

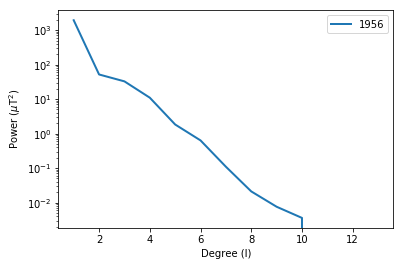

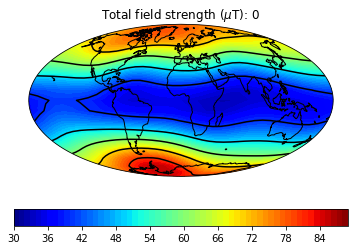

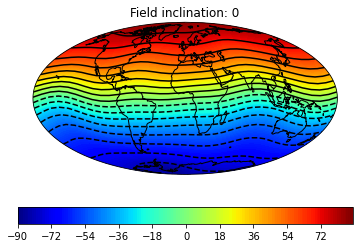

lowes : makes a plot of the Lowe’s spectrum for a geomagnetic field model

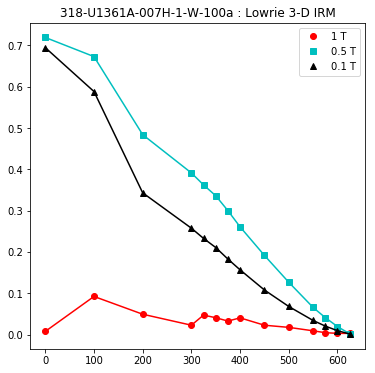

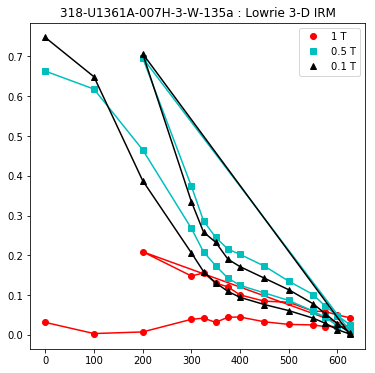

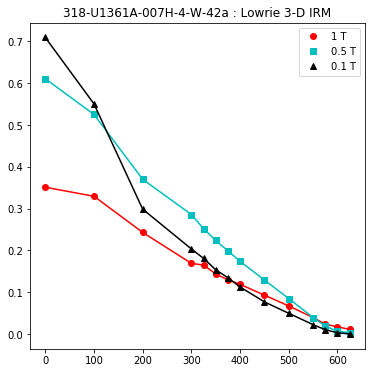

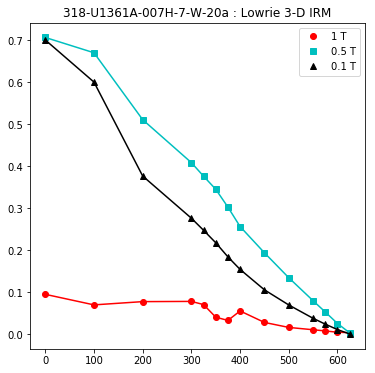

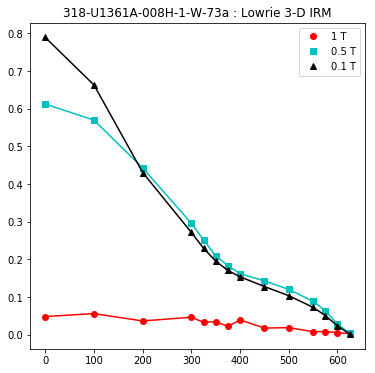

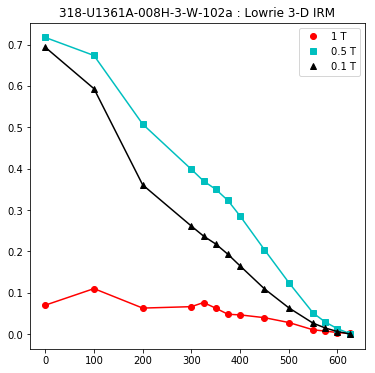

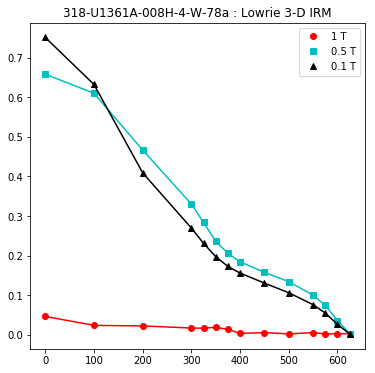

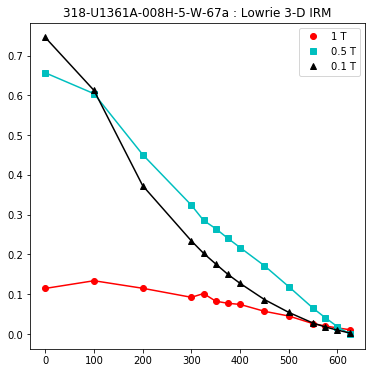

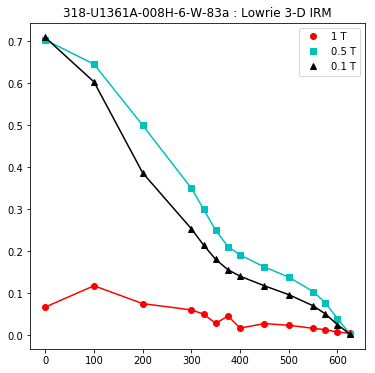

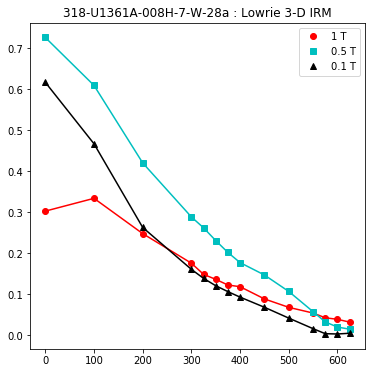

lowrie and lowrie_magic : makes plots of Lowrie’s (1990) 3D-IRM demagnetization experiments

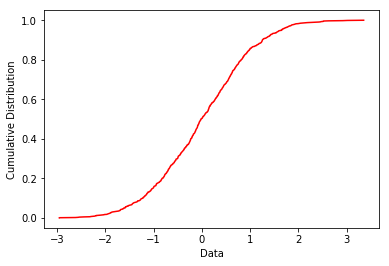

plot_cdf and plot_2cdfs : makes a cumulative distribution plot of data

plot_di_mean : makes equal area plots of directions and their \(\alpha_{95}\)s

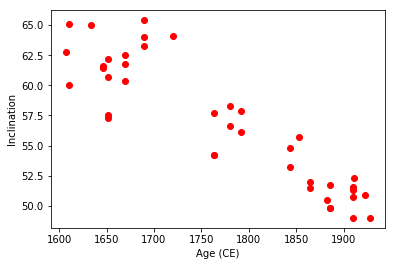

plot_geomagia : makes plots from files downloaded from the geomagia website

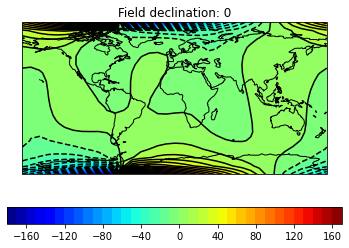

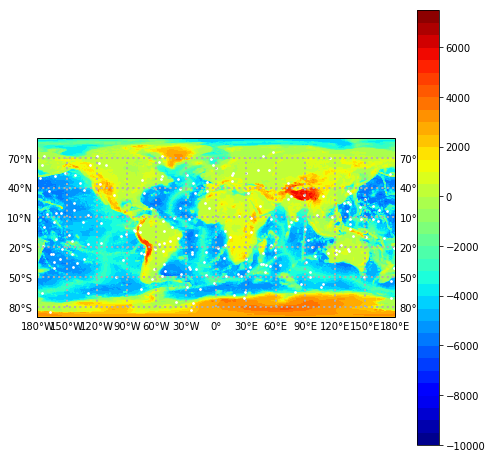

plot_mag_map : makes a color contour plot of geomagnetic field models

plot_magic_keys : plots data from MagIC formatted data files

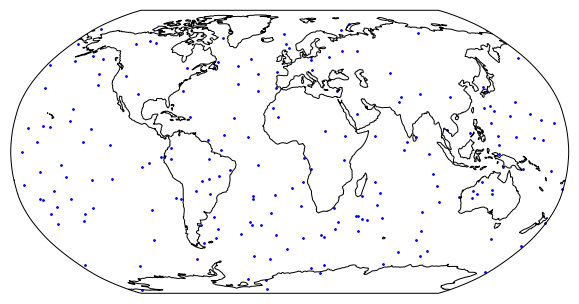

plot_map_pts : plots points on maps

plot_ts : makes a plot of the desired Geomagnetic Reversal time scale

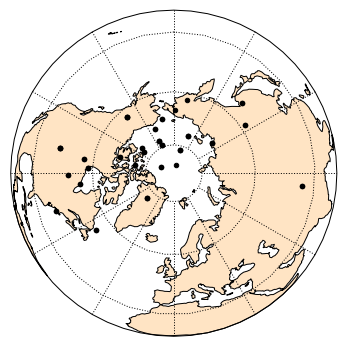

polemap_magic : reads in MagIC formatted file with paleomagnetic poles and plots them

qqplot : makes a Quantile-Quantile plot for data against a normal distribution

qqunf : makes a Quantile-Quantile plot for data against a uniform distribution

quick_hyst : makes hysteresis plots

revtest & revtest_magic : performs a bootstrap reversals test

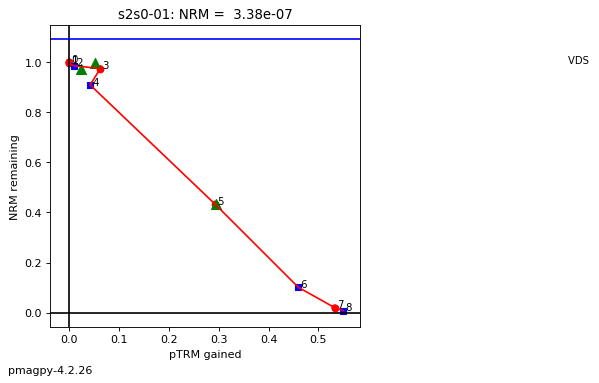

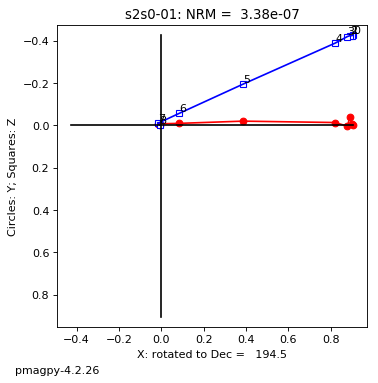

thellier_magic : makes plots of thellier-thellier data.

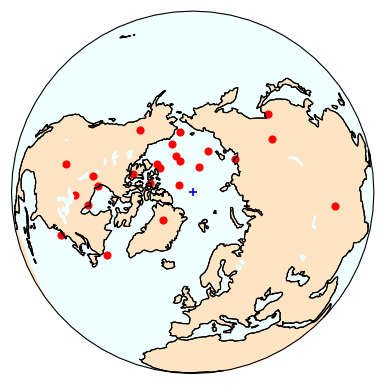

vgpmap_magic : reads in MagIC formatted file with virtual geomagnetic poles and plots them

watsons_v : makes a graph for Watson’s V test for common mean

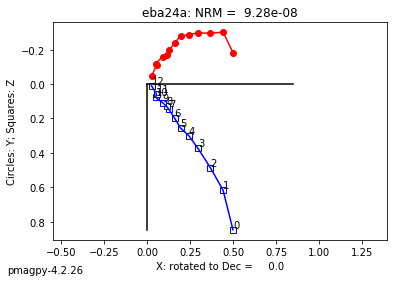

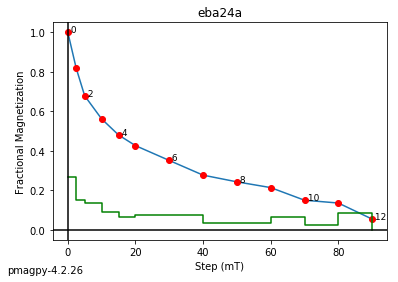

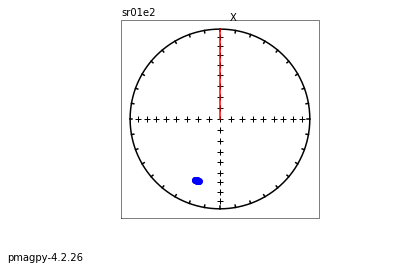

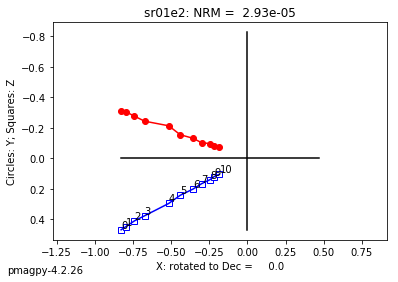

zeq and zeq_magic : makes quick zijderveld plots for measurement data

Maps:

cont_rot : makes plots of continents after rotation to specified coordinate system

plot_mag_map : makes a color contour plot of geomagnetic field models

plot_map_pts : plots points on maps

polemap_magic : reads in MagIC formatted file with paleomagnetic poles and plots them

vgpmap_magic : reads in MagIC formatted file with virtual geomagnetic poles and plots them

Figures#

The plotting functions make plots to the screen (using the

%matplotlib inlinemagic command), but all matplotlib plots can be saved with the command:

plt.savefig('PATH_TO_FILE_NAME.FMT')

and then viewed in the notebook with:

Image('PATH_TO_FILE_NAME.FMT')

ani_depthplot#

[Essentials Chapter 13] [MagIC Database] [command_line_version]

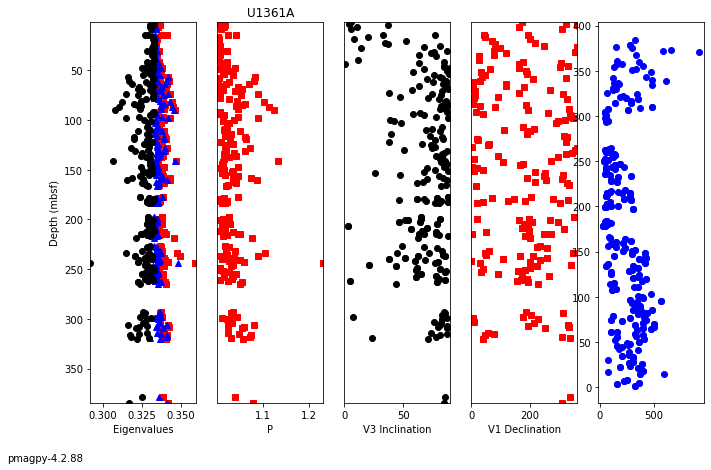

Anisotropy data can be plotted versus depth. The program ani_depthplot uses MagIC formatted data tables. Bulk susceptibility measurements can also be plotted if they are available in a measurements.txt formatted file.

In this example, we will use the data from Tauxe et al. (2015, doi:10.1016/j.epsl.2014.12.034) measured on samples obtained during Expedition 318 of the International Ocean Drilling Program. To get the entire dataset, go to the MagIC data base at: https://www2.earthref.org/MagIC/doi/10.1016/j.epsl.2014.12.034. Download the data set and unpack it with ipmag.download_magic (see the PmagPy_MagIC notebook).

We will use the ipmag.ani_depthplot() version of this program.

help(ipmag.ani_depthplot)

Help on function ani_depthplot in module pmagpy.ipmag:

ani_depthplot(spec_file='specimens.txt', samp_file='samples.txt', meas_file='measurements.txt', site_file='sites.txt', age_file='', sum_file='', fmt='svg', dmin=-1, dmax=-1, depth_scale='core_depth', dir_path='.', contribution=None)

returns matplotlib figure with anisotropy data plotted against depth

available depth scales: 'composite_depth', 'core_depth' or 'age' (you must provide an age file to use this option).

You must provide valid specimens and sites files, and either a samples or an ages file.

You may additionally provide measurements and a summary file (csv).

Parameters

----------

spec_file : str, default "specimens.txt"

samp_file : str, default "samples.txt"

meas_file : str, default "measurements.txt"

site_file : str, default "sites.txt"

age_file : str, default ""

sum_file : str, default ""

fmt : str, default "svg"

format for figures, ["svg", "jpg", "pdf", "png"]

dmin : number, default -1

minimum depth to plot (if -1, default to plotting all)

dmax : number, default -1

maximum depth to plot (if -1, default to plotting all)

depth_scale : str, default "core_depth"

scale to plot, ['composite_depth', 'core_depth', 'age'].

if 'age' is selected, you must provide an ages file.

dir_path : str, default "."

directory for input files

contribution : cb.Contribution, default None

if provided, use Contribution object instead of reading in

data from files

Returns

---------

plot : matplotlib plot, or False if no plot could be created

name : figure name, or error message if no plot could be created

And here we go:

ipmag.ani_depthplot(dir_path='data_files/ani_depthplot');

-I- Using online data model

-I- Getting method codes from earthref.org

-I- Importing controlled vocabularies from https://earthref.org

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

/var/folders/hb/m9qm0bdd13q_t59j9424w1n80000gn/T/ipykernel_74170/730097389.py in <module>

----> 1 ipmag.ani_depthplot(dir_path='data_files/ani_depthplot');

~/PmagPy/pmagpy/ipmag.py in ani_depthplot(spec_file, samp_file, meas_file, site_file, age_file, sum_file, fmt, dmin, dmax, depth_scale, dir_path, contribution)

3393 Axs.append(ax6)

3394 ax6.plot(Bulks, BulkDepths, 'bo')

-> 3395 ax6.axis([bmin - 1, 1.1 * bmax, dmax, dmin])

3396 ax6.set_xlabel('Bulk Susc. (uSI)')

3397 ax6.yaxis.set_major_locator(plt.NullLocator())

~/opt/anaconda3/envs/pmagpy_env/lib/python3.9/site-packages/matplotlib/axes/_base.py in axis(self, emit, *args, **kwargs)

1926 if ymin is None and ymax is None

1927 else False)

-> 1928 self.set_xlim(xmin, xmax, emit=emit, auto=xauto)

1929 self.set_ylim(ymin, ymax, emit=emit, auto=yauto)

1930 if kwargs:

~/opt/anaconda3/envs/pmagpy_env/lib/python3.9/site-packages/matplotlib/axes/_base.py in set_xlim(self, left, right, emit, auto, xmin, xmax)

3520

3521 self._process_unit_info([("x", (left, right))], convert=False)

-> 3522 left = self._validate_converted_limits(left, self.convert_xunits)

3523 right = self._validate_converted_limits(right, self.convert_xunits)

3524

~/opt/anaconda3/envs/pmagpy_env/lib/python3.9/site-packages/matplotlib/axes/_base.py in _validate_converted_limits(self, limit, convert)

3437 if (isinstance(converted_limit, Real)

3438 and not np.isfinite(converted_limit)):

-> 3439 raise ValueError("Axis limits cannot be NaN or Inf")

3440 return converted_limit

3441

ValueError: Axis limits cannot be NaN or Inf

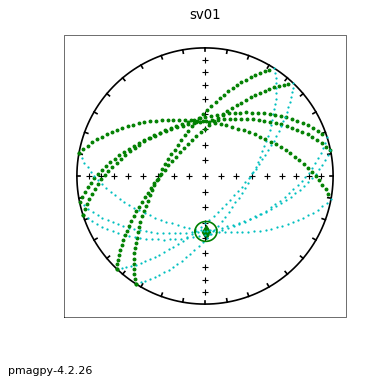

aniso_magic#

[Essentials Chapter 13] [MagIC Database] [command line version]

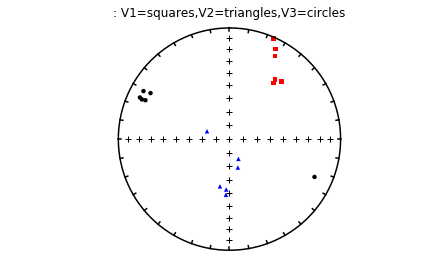

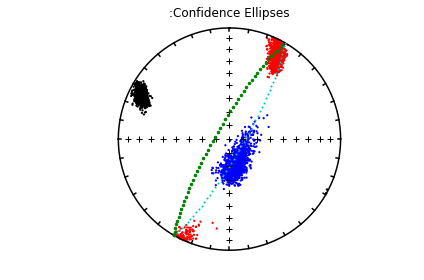

Samples were collected from the eastern margin a dike oriented with a bedding pole declination of 110∘ and dip of 2∘. The data have been imported into a MagIC (data model 3) formatted file named dike_specimens.txt.

We will make a plot of the data using ipmag.aniso_magic(), using the site parametric bootstrap option and plot out the bootstrapped eigenvectors. We will also draw on the trace of the dike.

help(ipmag.aniso_magic)

Help on function aniso_magic in module pmagpy.ipmag:

aniso_magic(infile='specimens.txt', samp_file='samples.txt', site_file='sites.txt', verbose=True, ipar=False, ihext=True, ivec=False, isite=False, iloc=False, iboot=False, vec=0, Dir=[], PDir=[], crd='s', num_bootstraps=1000, dir_path='.', fignum=1, save_plots=True, interactive=False, fmt='png', contribution=None, image_records=False)

Makes plots of anisotropy eigenvectors, eigenvalues and confidence bounds

All directions are on the lower hemisphere.

Parameters

__________

infile : specimens formatted file with aniso_s data

samp_file : samples formatted file with sample => site relationship

site_file : sites formatted file with site => location relationship

verbose : if True, print messages to output

confidence bounds options:

ipar : if True - perform parametric bootstrap - requires non-blank aniso_s_sigma

ihext : if True - Hext ellipses

ivec : if True - plot bootstrapped eigenvectors instead of ellipses

isite : if True plot by site, requires non-blank samp_file

#iloc : if True plot by location, requires non-blank samp_file, and site_file NOT IMPLEMENTED

iboot : if True - bootstrap ellipses

vec : eigenvector for comparison with Dir

Dir : [Dec,Inc] list for comparison direction

PDir : [Pole_dec, Pole_Inc] for pole to plane for comparison

green dots are on the lower hemisphere, cyan are on the upper hemisphere

crd : ['s','g','t'], coordinate system for plotting whereby:

s : specimen coordinates, aniso_tile_correction = -1, or unspecified

g : geographic coordinates, aniso_tile_correction = 0

t : tilt corrected coordinates, aniso_tile_correction = 100

num_bootstraps : how many bootstraps to do, default 1000

dir_path : directory path

fignum : matplotlib figure number, default 1

save_plots : bool, default True

if True, create and save all requested plots

interactive : bool, default False

interactively plot and display for each specimen

(this is best used on the command line only)

fmt : str, default "svg"

format for figures, [svg, jpg, pdf, png]

contribution : pmagpy contribution_builder.Contribution object, if not provided will be created

in directory (default None). (if provided, infile/samp_file/dir_path may be left blank)

ipmag.aniso_magic(infile='dike_specimens.txt',dir_path='data_files/aniso_magic',

iboot=1,ihext=0,ivec=1,PDir=[120,10],ipar=1, save_plots=False) # compare dike directions with plane of dike with pole of 120,10

desired coordinate system not available, using available: g

(True, [])

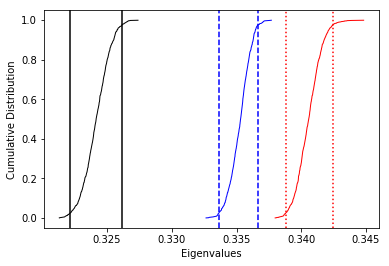

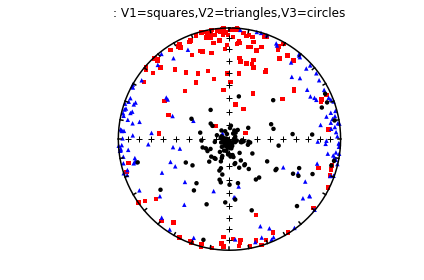

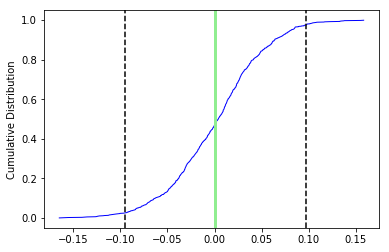

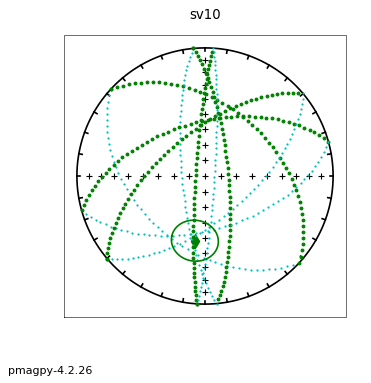

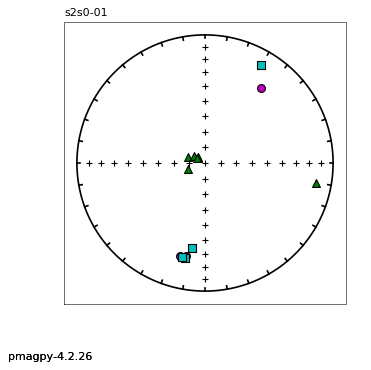

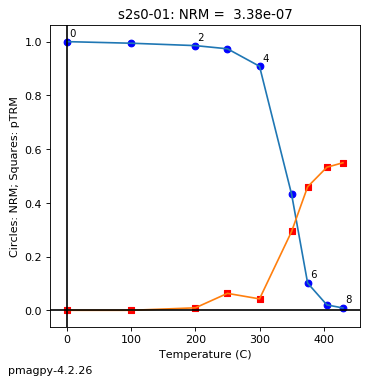

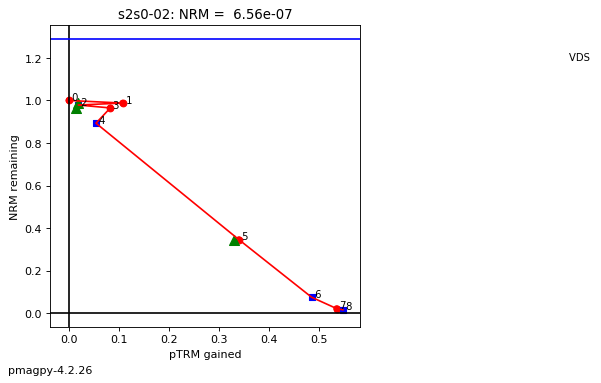

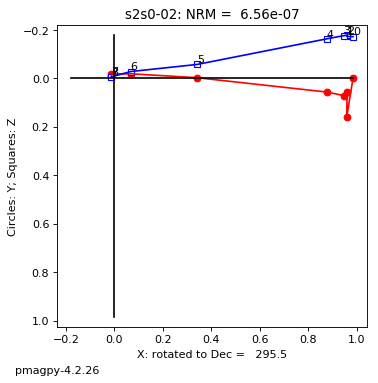

The specimen eigenvectors are plotted in the top diagram with the usual convention that squares are the V\(_1\) directions, triangles are the V\(_2\) directions and circles are the V\(_3\) directions. All directions are plotted on the lower hemisphere. The bootstrapped eigenvectors are shown in the middle diagram. Cumulative distributions of the bootstrapped eigenvalues are shown in the bottom plot with the 95% confidence bounds plotted as vertical lines. It appears that the magma was moving in the northern and slightly up direction along the dike.

There are more options to ipmag.aniso_magic_nb() that come in handy. In particular, one often wishes to test if a particular fabric is isotropic (the three eigenvalues cannot be distinguished), or if a particular eigenvector is parallel to some direction. For example, undisturbed sedimentary fabrics are oblate (the maximum and intermediate directions cannot be distinguished from one another, but are distinct from the minimum) and the eigenvector associated with the minimum eigenvalue is vertical. These criteria can be tested using the distributions of bootstrapped eigenvalues and eigenvectors.

The following session illustrates how this is done, using the data in the test file sed_specimens.txt in the aniso_magic directory.

ipmag.aniso_magic(infile='sed_specimens.txt',dir_path='data_files/aniso_magic',

iboot=1,ihext=0,ivec=1,Dir=[0,90],vec=3,ipar=1, save_plots=False) # parametric bootstrap and compare V3 with vertical

desired coordinate system not available, using available: g

(True, [])

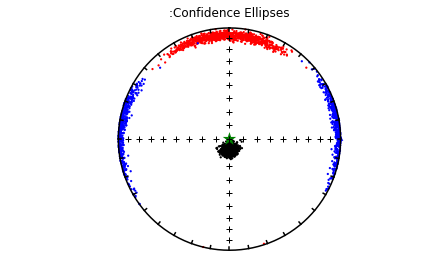

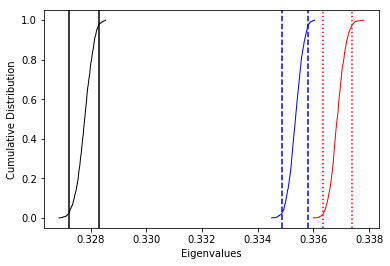

The top three plots are as in the dike example before, showing a clear triaxial fabric (all three eigenvalues and associated eigenvectors are distinct from one another. In the lower three plots we have the distributions of the three components of the chosen axis, V\(_3\), their 95% confidence bounds (dash lines) and the components of the designated direction (solid line). This direction is also shown in the equal area projection above as a red pentagon. The minimum eigenvector is not vertical in this case.

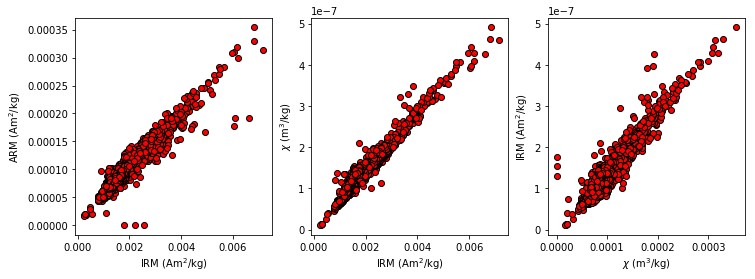

biplot_magic#

[Essentials Chapter 8] [MagIC Database] [command line version]

It is often useful to plot measurements from one experiement against another. For example, rock magnetic studies of sediments often plot the IRM against the ARM or magnetic susceptibility. All of these types of measurements can be imported into a single measurements formatted file and use the MagIC method codes and other clues (lab fields, etc.) to differentiate one measurement from another.

Data were obtained by Hartl and Tauxe (1997, doi: 10.1111/j.1365-246X.1997.tb04082.x) from a Paleogene core from 28\(^{\circ}\) S (DSDP Site 522) and used for a relative paleointensity study. IRM, ARM, magnetic susceptibility and remanence data were uploaded to the MagIC database. The MagIC measurements formatted file for this study (which you can get from https://earthref.org/MagIC/doi/10.1111/j.1365-246X.1997.tb04082.x and unpack with download_magic is saved in data_files/biplot_magic/measurements.txt.

We can create these plots using Pandas. The key to what the measurements mean is in the Magic method codes, so we can first get a unique list of all the available method_codes, then plot the ones we are interested in against each other. Let’s read in the data file in to a Pandas DataFrame and exctract the method codes to see what we have:

# read in the data

meas_df=pd.read_csv('data_files/biplot_magic/measurements.txt',sep='\t',header=1)

# get the method_codes and print

print(meas_df.method_codes.unique())

# take a look at the top part of the measurements data frame

meas_df.head()

['LT-AF-Z' 'LT-AF-I' 'LT-IRM' 'LP-X']

| citations | dir_dec | dir_inc | experiment | magn_mass | meas_temp | measurement | method_codes | quality | specimen | standard | susc_chi_mass | treat_ac_field | treat_dc_field | treat_step_num | treat_temp | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | This study | 268.5 | -41.2 | 15-1-013:LP-AF-DIR | 0.000003 | 300 | 15-1-013:LP-AF-DIR-1 | LT-AF-Z | g | 15-1-013 | u | NaN | 0.015 | 0.00000 | 1.0 | 300 |

| 1 | This study | NaN | NaN | 15-1-013:LP-ARM | 0.000179 | 300 | 15-1-013:LP-ARM-2 | LT-AF-I | g | 15-1-013 | u | NaN | 0.080 | 0.00005 | 2.0 | 300 |

| 2 | This study | NaN | NaN | 15-1-013:LP-IRM | 0.003600 | 300 | 15-1-013:LP-IRM-3 | LT-IRM | g | 15-1-013 | u | NaN | 0.000 | 1.00000 | 3.0 | 300 |

| 3 | This study | NaN | NaN | 15-1-013:LP-X | NaN | 300 | 15-1-013:LP-X-4 | LP-X | NaN | 15-1-013 | NaN | 2.380000e-07 | 0.010 | 0.00000 | 4.0 | 300 |

| 4 | This study | 181.0 | 68.6 | 15-1-022:LP-AF-DIR | 0.000011 | 300 | 15-1-022:LP-AF-DIR-5 | LT-AF-Z | g | 15-1-022 | u | NaN | 0.015 | 0.00000 | 5.0 | 300 |

These are: an AF demag step (LT-AF-Z), an ARM (LT-AF-I), an IRM (LT-IRM) and a susceptibility (LP-X). Now we can fish out data for each method, merge them by specimen, dropping any missing measurements and finally plot one against the other.

# get the IRM data

IRM=meas_df[meas_df.method_codes.str.contains('LT-IRM')]

IRM=IRM[['specimen','magn_mass']] #trim the data frame

IRM.columns=['specimen','IRM'] # rename the column

# do the same for the ARM data

ARM=meas_df[meas_df.method_codes.str.contains('LT-AF-I')]

ARM=ARM[['specimen','magn_mass']]

ARM.columns=['specimen','ARM']

# and the magnetic susceptibility

CHI=meas_df[meas_df.method_codes.str.contains('LP-X')]

CHI=CHI[['specimen','susc_chi_mass']]

CHI.columns=['specimen','CHI']

# merge IRM ARM data by specimen

RMRMs=pd.merge(IRM,ARM,on='specimen')

# add on the susceptility data

RMRMs=pd.merge(RMRMs,CHI,on='specimen')

Now we are ready to make the plots.

fig=plt.figure(1, (12,4)) # make a figure

fig.add_subplot(131) # make the first in a row of three subplots

plt.plot(RMRMs.IRM,RMRMs.ARM,'ro',markeredgecolor='black')

plt.xlabel('IRM (Am$^2$/kg)') # label the X axis

plt.ylabel('ARM (Am$^2$/kg)') # and the Y axis

fig.add_subplot(132)# make the second in a row of three subplots

plt.plot(RMRMs.IRM,RMRMs.CHI,'ro',markeredgecolor='black')

plt.xlabel('IRM (Am$^2$/kg)')

plt.ylabel('$\chi$ (m$^3$/kg)')

fig.add_subplot(133)# and the third in a row of three subplots

plt.plot(RMRMs.ARM,RMRMs.CHI,'ro',markeredgecolor='black')

plt.xlabel('$\chi$ (m$^3$/kg)')

plt.ylabel('IRM (Am$^2$/kg)');

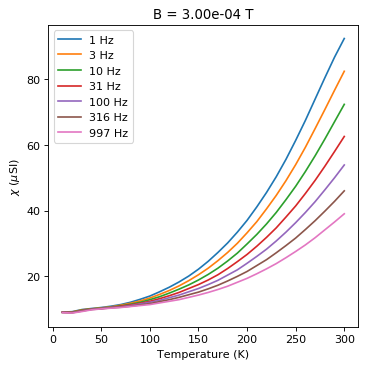

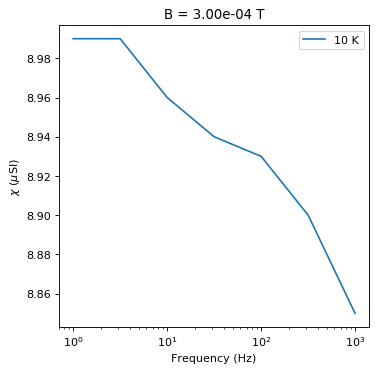

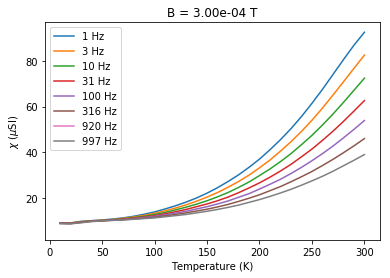

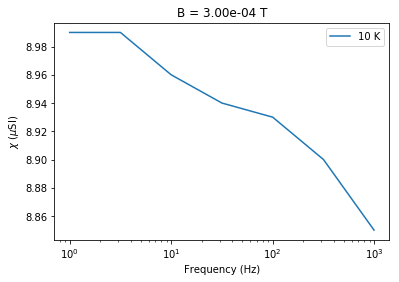

chi_magic#

[Essentials Chapter 8] [MagIC Database] [command line version]

It is sometimes useful to measure susceptibility as a function of temperature, applied field and frequency. Here we use a data set that came from the Tiva Canyon Tuff sequence (see Jackson et al., 2006, doi: 10.1029/2006JB004514).

chi_magic reads in a MagIC formatted file and makes various plots. We do this using Pandas.

# with ipmag

ipmag.chi_magic('data_files/chi_magic/measurements.txt', save_plots=False)

Not enough data to plot IRM-Kappa-2352

(True, [])

# read in data from data model 3 example file using pandas

chi_data=pd.read_csv('data_files/chi_magic/measurements.txt',sep='\t',header=1)

print (chi_data.columns)

# get arrays of available temps, frequencies and fields

Ts=np.sort(chi_data.meas_temp.unique())

Fs=np.sort(chi_data.meas_freq.unique())

Bs=np.sort(chi_data.meas_field_ac.unique())

Index(['experiment', 'specimen', 'measurement', 'treat_step_num', 'citations',

'instrument_codes', 'method_codes', 'meas_field_ac', 'meas_freq',

'meas_temp', 'timestamp', 'susc_chi_qdr_volume', 'susc_chi_volume'],

dtype='object')

# plot chi versus temperature at constant field

b=Bs.max()

for f in Fs:

this_f=chi_data[chi_data.meas_freq==f]

this_f=this_f[this_f.meas_field_ac==b]

plt.plot(this_f.meas_temp,1e6*this_f.susc_chi_volume,label='%i'%(f)+' Hz')

plt.legend()

plt.xlabel('Temperature (K)')

plt.ylabel('$\chi$ ($\mu$SI)')

plt.title('B = '+'%7.2e'%(b)+ ' T')

Text(0.5,1,'B = 3.00e-04 T')

# plot chi versus frequency at constant B

b=Bs.max()

t=Ts.min()

this_t=chi_data[chi_data.meas_temp==t]

this_t=this_t[this_t.meas_field_ac==b]

plt.semilogx(this_t.meas_freq,1e6*this_t.susc_chi_volume,label='%i'%(t)+' K')

plt.legend()

plt.xlabel('Frequency (Hz)')

plt.ylabel('$\chi$ ($\mu$SI)')

plt.title('B = '+'%7.2e'%(b)+ ' T')

Text(0.5,1,'B = 3.00e-04 T')

You can see the dependence on temperature, frequency and applied field. These data support the suggestion that there is a strong superparamagnetic component in these specimens.

common_mean#

[Essentials Chapter 12] [command line version]

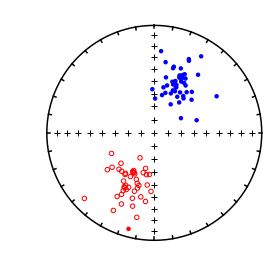

Most paleomagnetists use some form of Fisher Statistics to decide if two directions are statistically distinct or not (see Essentials Chapter 11 for a discussion of those techniques). But often directional data are not Fisher distributed and the parametric approach will give misleading answers. In these cases, one can use a boostrap approach, described in detail in [Essentials Chapter 12]. The program common_mean can be used for a bootstrap test for common mean to check whether two declination, inclination data sets have a common mean at the 95% level of confidence.

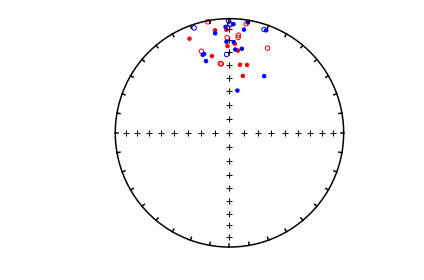

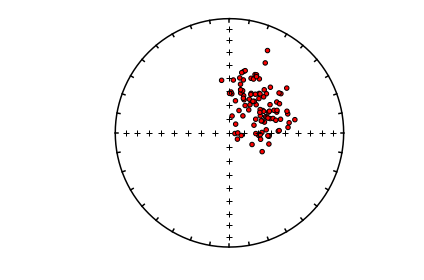

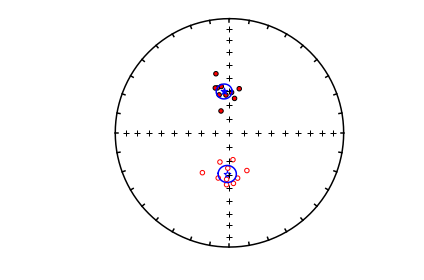

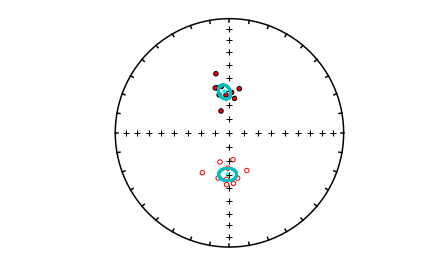

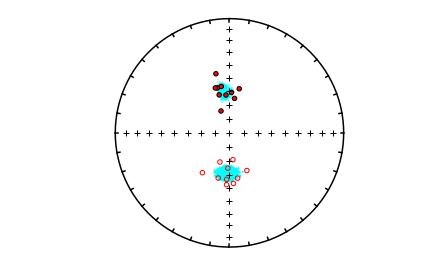

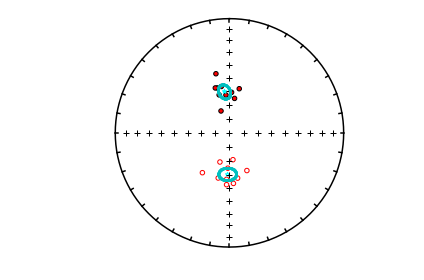

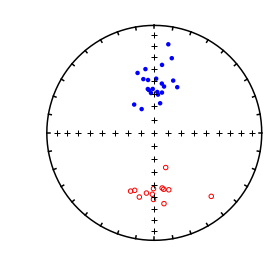

We want to compare the two data sets: common_mean_ex_file1.dat and common_mean_ex_file2.dat. But first, let’s look at the data in equal area projection using the methods outline in the section on eqarea.

directions_A=np.loadtxt('data_files/common_mean/common_mean_ex_file1.dat')

directions_B=np.loadtxt('data_files/common_mean/common_mean_ex_file2.dat')

ipmag.plot_net(1)

ipmag.plot_di(di_block=directions_A,color='red')

ipmag.plot_di(di_block=directions_B,color='blue')

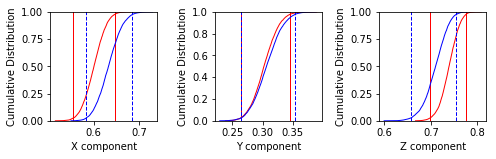

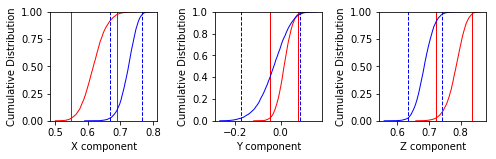

Now let’s look at the common mean problem using ipmag.common_mean_bootstrap().

help(ipmag.common_mean_bootstrap)

Help on function common_mean_bootstrap in module pmagpy.ipmag:

common_mean_bootstrap(Data1, Data2, NumSims=1000, save=False, save_folder='.', fmt='svg', figsize=(7, 2.3), x_tick_bins=4)

Conduct a bootstrap test (Tauxe, 2010) for a common mean on two declination,

inclination data sets. Plots are generated of the cumulative distributions

of the Cartesian coordinates of the means of the pseudo-samples (one for x,

one for y and one for z). If the 95 percent confidence bounds for each

component overlap, the two directions are not significantly different.

Parameters

----------

Data1 : a nested list of directional data [dec,inc] (a di_block)

Data2 : a nested list of directional data [dec,inc] (a di_block)

if Data2 is length of 1, treat as single direction

NumSims : number of bootstrap samples (default is 1000)

save : optional save of plots (default is False)

save_folder : path to directory where plots should be saved

fmt : format of figures to be saved (default is 'svg')

figsize : optionally adjust figure size (default is (7, 2.3))

x_tick_bins : because they occasionally overlap depending on the data, this

argument allows you adjust number of tick marks on the x axis of graphs

(default is 4)

Returns

-------

three plots : cumulative distributions of the X, Y, Z of bootstrapped means

Examples

--------

Develop two populations of directions using ``ipmag.fishrot``. Use the

function to determine if they share a common mean (through visual inspection

of resulting plots).

>>> directions_A = ipmag.fishrot(k=20, n=30, dec=40, inc=60)

>>> directions_B = ipmag.fishrot(k=35, n=25, dec=42, inc=57)

>>> ipmag.common_mean_bootstrap(directions_A, directions_B)

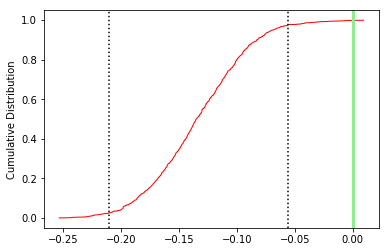

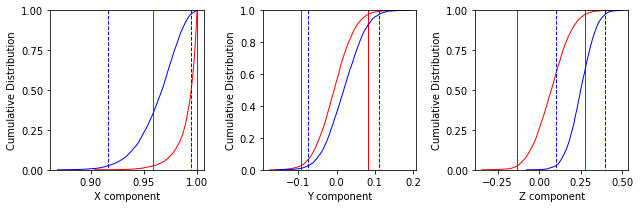

ipmag.common_mean_bootstrap(directions_A,directions_B,figsize=(9,3))

These plots suggest that the two data sets share a common mean.

Now compare the data in common_mean_ex_file1.dat with the expected direction at the 5\(^{\circ}\) N latitude that these data were collected (Dec=0, Inc=9.9).

To do this, we set the second data set to be the desired direction for comparison.

comp_dir=[0,9.9]

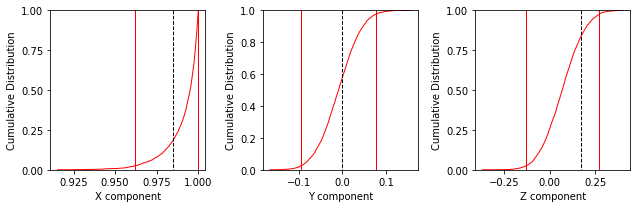

ipmag.common_mean_bootstrap(directions_A,comp_dir,figsize=(9,3))

Apparently the data (cumulative distribution functions) are entirely consistent with the expected direction (dashed lines are the cartesian coordinates of that).

cont_rot#

[Essentials Chapter 16] [command line version]

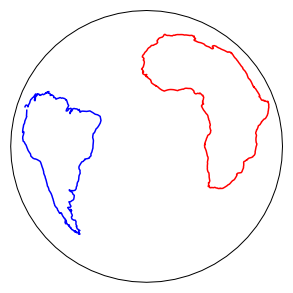

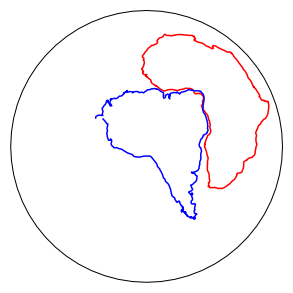

We can make an orthographic projection with latitude = -20\(^{\circ}\) and longitude = 0\(^{\circ}\) at the center of the African and South American continents reconstructed to 180 Ma using the Torsvik et al. (2008, doi: 10.1029/2007RG000227) poles of finite rotation. We would do this by first holding Africa fixed.

We need to read in in the outlines of continents from continents.get_cont(), rotate them around a rotation pole and angle as specified by the age and continent in question (from frp.get_pole() using pmag.pt_rot(). Then we can plot them using pmagplotlib.plot_map(). If the Basemap version is preferred, use pmagplotlib.plot_map_basemap(). Here we demonstrate this from within the notebook by just calling the PmagPy functions.

# load in the continents module

import pmagpy.continents as continents

import pmagpy.frp as frp

help(continents.get_continent)

Help on function get_continent in module pmagpy.continents:

get_continent(continent)

get_continent(continent)

returns the outlines of specified continent.

Parameters:

____________________

continent:

af : Africa

congo : Congo

kala : Kalahari

aus : Australia

balt : Baltica

eur : Eurasia

ind : India

sam : South America

ant : Antarctica

grn : Greenland

lau : Laurentia

nam : North America

gond : Gondawanaland

Returns :

array of [lat/long] points defining continent

help(pmagplotlib.plot_map)

Help on function plot_map in module pmagpy.pmagplotlib:

plot_map(fignum, lats, lons, Opts)

makes a cartopy map with lats/lons

Requires installation of cartopy

Parameters:

_______________

fignum : matplotlib figure number

lats : array or list of latitudes

lons : array or list of longitudes

Opts : dictionary of plotting options:

Opts.keys=

proj : projection [supported cartopy projections:

pc = Plate Carree

aea = Albers Equal Area

aeqd = Azimuthal Equidistant

lcc = Lambert Conformal

lcyl = Lambert Cylindrical

merc = Mercator

mill = Miller Cylindrical

moll = Mollweide [default]

ortho = Orthographic

robin = Robinson

sinu = Sinusoidal

stere = Stereographic

tmerc = Transverse Mercator

utm = UTM [set zone and south keys in Opts]

laea = Lambert Azimuthal Equal Area

geos = Geostationary

npstere = North-Polar Stereographic

spstere = South-Polar Stereographic

latmin : minimum latitude for plot

latmax : maximum latitude for plot

lonmin : minimum longitude for plot

lonmax : maximum longitude

lat_0 : central latitude

lon_0 : central longitude

sym : matplotlib symbol

symsize : symbol size in pts

edge : markeredgecolor

cmap : matplotlib color map

res : resolution [c,l,i,h] for low/crude, intermediate, high

boundinglat : bounding latitude

sym : matplotlib symbol for plotting

symsize : matplotlib symbol size for plotting

names : list of names for lats/lons (if empty, none will be plotted)

pltgrd : if True, put on grid lines

padlat : padding of latitudes

padlon : padding of longitudes

gridspace : grid line spacing

global : global projection [default is True]

oceancolor : 'azure'

landcolor : 'bisque' [choose any of the valid color names for matplotlib

see https://matplotlib.org/examples/color/named_colors.html

details : dictionary with keys:

coasts : if True, plot coastlines

rivers : if True, plot rivers

states : if True, plot states

countries : if True, plot countries

ocean : if True, plot ocean

fancy : if True, plot etopo 20 grid

NB: etopo must be installed

if Opts keys not set :these are the defaults:

Opts={'latmin':-90,'latmax':90,'lonmin':0,'lonmax':360,'lat_0':0,'lon_0':0,'proj':'moll','sym':'ro,'symsize':5,'edge':'black','pltgrid':1,'res':'c','boundinglat':0.,'padlon':0,'padlat':0,'gridspace':30,'details':all False,'edge':None,'cmap':'jet','fancy':0,'zone':'','south':False,'oceancolor':'azure','landcolor':'bisque'}

# retrieve continental outline

# This is the version that uses cartopy and requires installation of cartopy

af=continents.get_continent('af').transpose()

sam=continents.get_continent('sam').transpose()

#define options for pmagplotlib.plot_map

plt.figure(1,(5,5))

Opts = {'latmin': -90, 'latmax': 90, 'lonmin': 0., 'lonmax': 360., 'lat_0': -20, \

'lon_0': 345,'proj': 'ortho', 'sym': 'r-', 'symsize': 3,\

'pltgrid': 0, 'res': 'c', 'boundinglat': 0.}

if has_cartopy:

pmagplotlib.plot_map(1,af[0],af[1],Opts)

Opts['sym']='b-'

pmagplotlib.plot_map(1,sam[0],sam[1],Opts)

elif has_basemap:

pmagplotlib.plot_map_basemap(1,af[0],af[1],Opts)

Opts['sym']='b-'

pmagplotlib.plot_map_basemap(1,sam[0],sam[1],Opts)

Now for the rotation part. These are in a function called frp.get_pole()

help(frp.get_pole)

Help on function get_pole in module pmagpy.frp:

get_pole(continent, age)

returns rotation poles and angles for specified continents and ages

assumes fixed Africa.

Parameters

__________

continent :

aus : Australia

eur : Eurasia

mad : Madacascar

[nwaf,congo] : NW Africa [choose one]

col : Colombia

grn : Greenland

nam : North America

par : Paraguay

eant : East Antarctica

ind : India

[neaf,kala] : NE Africa [choose one]

[sac,sam] : South America [choose one]

ib : Iberia

saf : South Africa

Returns

_______

[pole longitude, pole latitude, rotation angle] : for the continent at specified age

# get the rotation pole for south america relative to South Africa at 180 Ma

sam_pole=frp.get_pole('sam',180)

# NB: for african rotations, first rotate other continents to fixed Africa, then

# rotate with South African pole (saf)

The rotation is done by pmag.pt_rot().

help(pmag.pt_rot)

Help on function pt_rot in module pmagpy.pmag:

pt_rot(EP, Lats, Lons)

Rotates points on a globe by an Euler pole rotation using method of

Cox and Hart 1986, box 7-3.

Parameters

----------

EP : Euler pole list [lat,lon,angle]

Lats : list of latitudes of points to be rotated

Lons : list of longitudes of points to be rotated

Returns

_________

RLats : rotated latitudes

RLons : rotated longitudes

so here we go…

plt.figure(1,(5,5))

sam_rot=pmag.pt_rot(sam_pole,sam[0],sam[1]) # same for south america

# and plot 'em

Opts['sym']='r-'

if has_cartopy:

pmagplotlib.plot_map(1,af[0],af[1],Opts)

Opts['sym']='b-'

pmagplotlib.plot_map(1,sam_rot[0],sam_rot[1],Opts)

elif has_basemap:

pmagplotlib.plot_map_basemap(1,af[0],af[1],Opts)

Opts['sym']='b-'

pmagplotlib.plot_map_basemap(1,sam_rot[0],sam_rot[1],Opts)

core_depthplot#

[Essentials Chapter 15] [command line version]

The program core_depthplot can be used to plot various measurement data versus sample depth. The data must be in the MagIC data format. The program will plot whole core data, discrete sample at a bulk demagnetization step, data from vector demagnetization experiments, and so on.

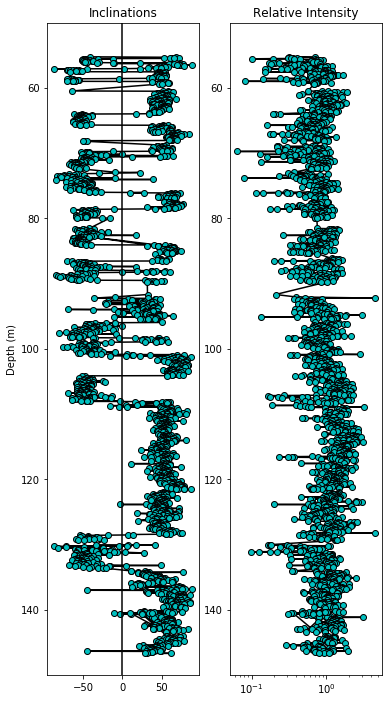

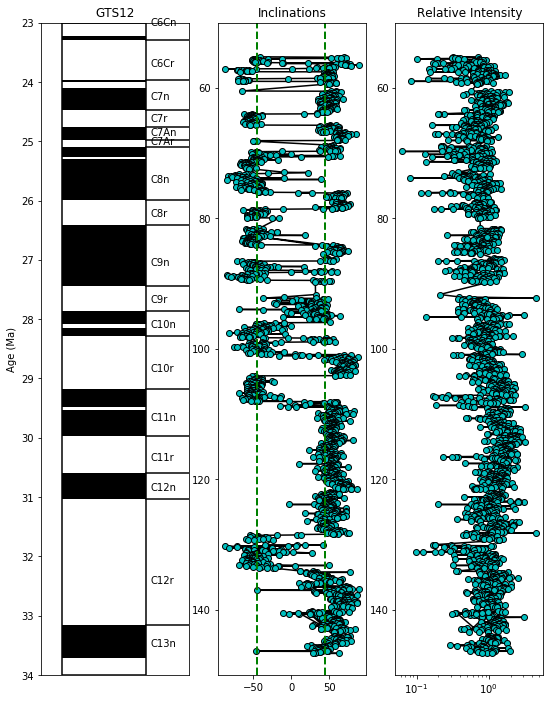

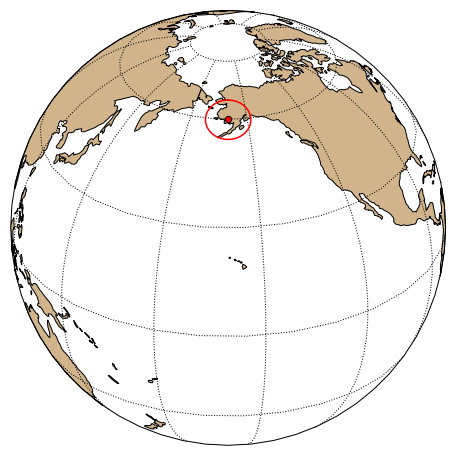

We can try this out on some data from DSDP Hole 522, (drilled at 26S/5W) and measured by Tauxe and Hartl (1997, doi: 10.1111/j.1365-246X.1997.tb04082.x). These were downloaded and unpacked in the biplot_magic example. More of the data are in the directory ../data_files/core_depthplot.

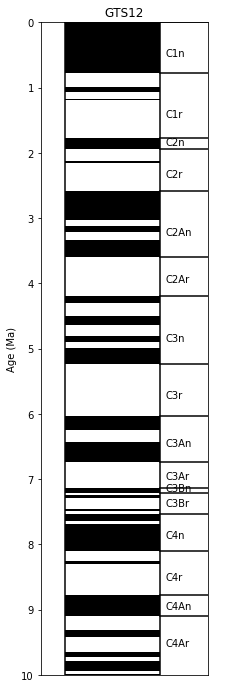

In this example, we will plot the alternating field (AF) data after the 15 mT step. The magnetizations will be plotted on a log scale and, as this is a record of the Oligocene, we will plot the Oligocene time scale, using the calibration of Gradstein et al. (2012), commonly referred to as “GTS12” for the the Oligocene. We are only interested in the data between 50 and 150 meters and we are not interested in the declinations here.

All this can be done using the wonders of Pandas data frames using the data in the data_files/core_depthplot directory.

Let’s do things this way:

read in the data from the sites and specimens files.

Drop the records with NaN for analysts, keeping one of the three lines available for each specimen.

Make a new column named site in the specdimens table that is the same as the specimen column.

(this makes sense because these are core data, so the specimen=sample=site. )

Merge the two DataFrames on the site column.

filter the data for depths between 50 and 150.

Plot dir_inc versus core_depth.

Put on GAD field inclination

plot the time scale

specimens=pd.read_csv('data_files/core_depthplot/specimens.txt',sep='\t',header=1)

sites=pd.read_csv('data_files/core_depthplot/sites.txt',sep='\t',header=1)

specimens=specimens.dropna(subset=['dir_inc']) # kill unwanted lines with duplicate or irrelevent info

specimens['site']=specimens['specimen'] # make a column with site name

data=pd.merge(specimens,sites,on='site') # merge the two data frames on site

data=data[data.core_depth>50] # all levels > 50

data=data[data.core_depth<150] # and < 150

lat=26 # we need this for the GAD INC

Plot versus core_depth

fig=plt.figure(1,(6,12)) # make the figure

ax=fig.add_subplot(121) # make the first of 2 subplots

plt.ylabel('Depth (m)') # label the Y axis

plt.plot(data.dir_inc,data.core_depth,'k-') # draw on a black line through the data

# draw the data points as cyan dots with black edges

plt.plot(data.dir_inc,data.core_depth,'co',markeredgecolor='black')

plt.title('Inclinations') # put on a title

plt.axvline(0,color='black')# make a central line at inc=0

plt.ylim(150,50) # set the plot Y limits to the desired depths

fig.add_subplot(122) # make the second of two subplots

# plot intensity data on semi-log plot

plt.semilogx(data.int_rel/data.int_rel.mean(),data.core_depth,'k-')

plt.semilogx(data.int_rel/data.int_rel.mean(),\

data.core_depth,'co',markeredgecolor='black')

plt.ylim(150,50)

plt.title('Relative Intensity');

And now versus age:

fig=plt.figure(1,(9,12)) # make the figure

ax=fig.add_subplot(131) # make the first of three subplots

pmagplotlib.plot_ts(ax,23,34,timescale='gts12') # plot on the time scale

fig.add_subplot(132) # make the second of three subplots

plt.plot(data.dir_inc,data.core_depth,'k-')

plt.plot(data.dir_inc,data.core_depth,'co',markeredgecolor='black')

plt.ylim(35,23)

# calculate the geocentric axial dipole field for the site latitude

gad=np.degrees(np.arctan(2.*np.tan(np.radians(lat)))) # tan (I) = 2 tan (lat)

# put it on the plot as a green dashed line

plt.axvline(gad,color='green',linestyle='dashed',linewidth=2)

plt.axvline(-gad,color='green',linestyle='dashed',linewidth=2)

plt.title('Inclinations')

plt.ylim(150,50)

fig.add_subplot(133) # make the third of three plots

# plot the intensity data on semi-log plot

plt.semilogx(data.int_rel/data.int_rel.mean(),data.core_depth,'k-')

plt.semilogx(data.int_rel/data.int_rel.mean(),data.core_depth,'co',markeredgecolor='black')

plt.ylim(150,50)

plt.title('Relative Intensity');

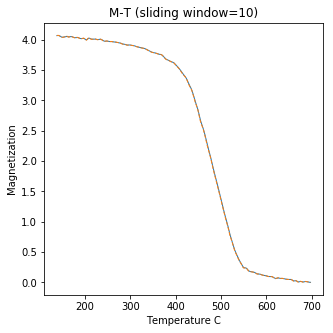

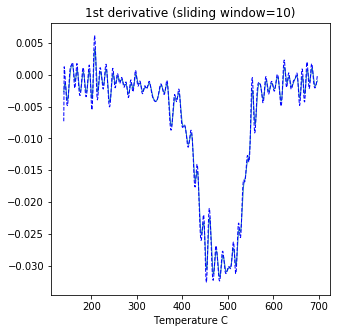

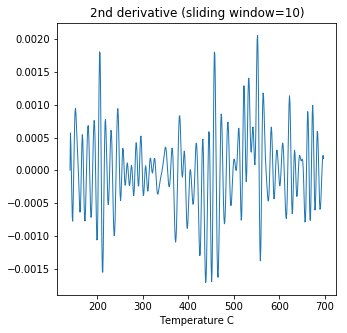

curie#

[Essentials Chapter 6] [command line version]

Curie Temperature experiments, saved in MagIC formatted files, can be plotted using ipmag.curie().

help(ipmag.curie)

Help on function curie in module pmagpy.ipmag:

curie(path_to_file='.', file_name='', magic=False, window_length=3, save=False, save_folder='.', fmt='svg', t_begin='', t_end='')

Plots and interprets curie temperature data.

***

The 1st derivative is calculated from smoothed M-T curve (convolution

with trianfular window with width= <-w> degrees)

***

The 2nd derivative is calculated from smoothed 1st derivative curve

(using the same sliding window width)

***

The estimated curie temp. is the maximum of the 2nd derivative.

Temperature steps should be in multiples of 1.0 degrees.

Parameters

__________

file_name : name of file to be opened

Optional Parameters (defaults are used if not specified)

----------

path_to_file : path to directory that contains file (default is current directory, '.')

window_length : dimension of smoothing window (input to smooth() function)

save : boolean argument to save plots (default is False)

save_folder : relative directory where plots will be saved (default is current directory, '.')

fmt : format of saved figures

t_begin: start of truncated window for search

t_end: end of truncated window for search

magic : True if MagIC formated measurements.txt file

ipmag.curie(path_to_file='data_files/curie',file_name='curie_example.dat',\

window_length=10)

second derivative maximum is at T=552

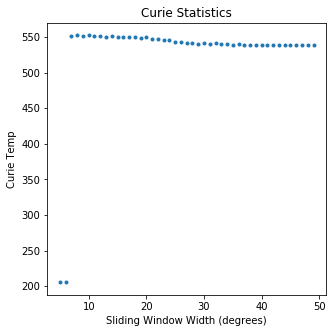

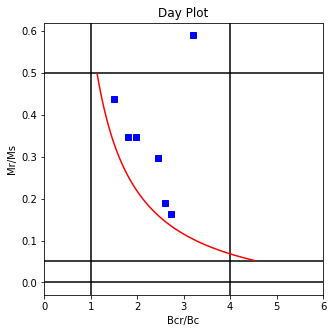

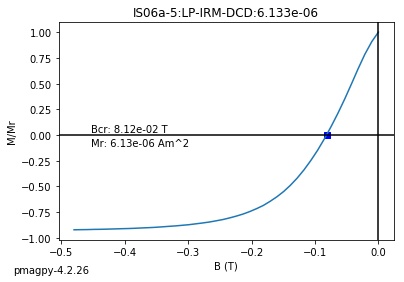

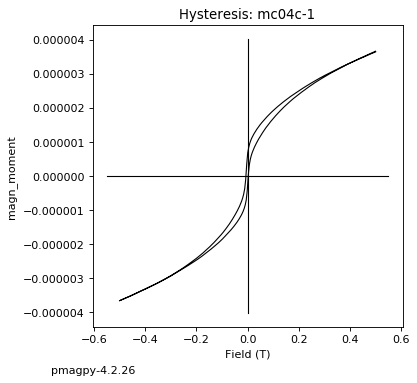

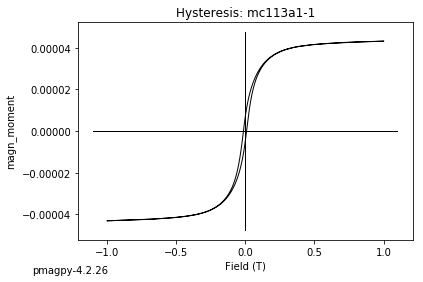

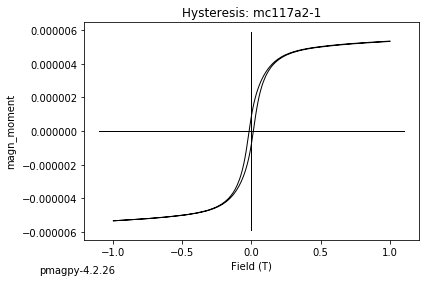

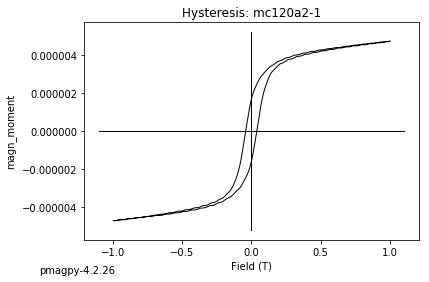

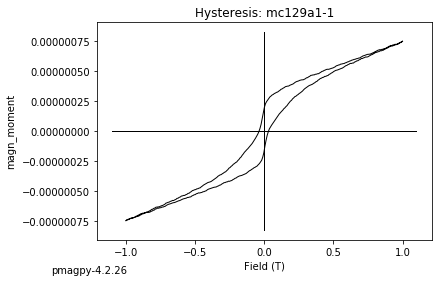

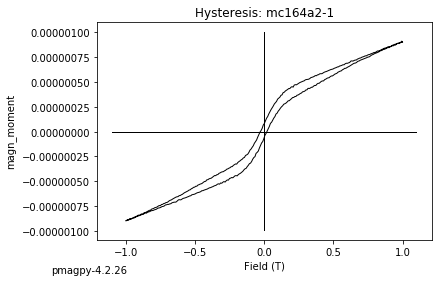

dayplot_magic#

[Essentials Chapter 5] [command line version]

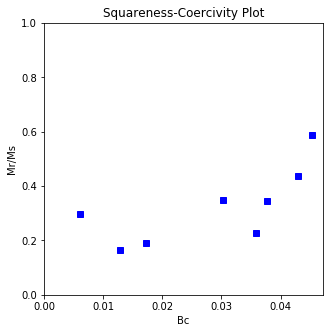

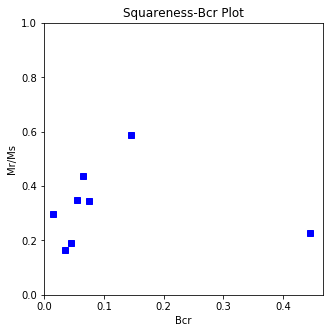

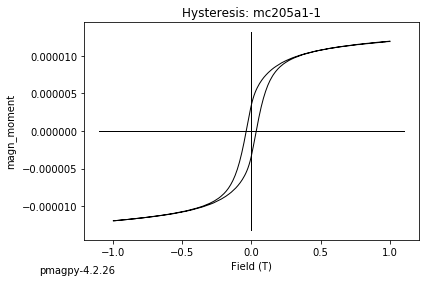

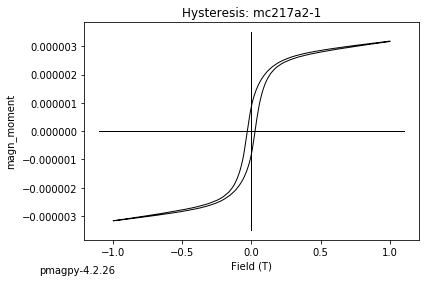

The program dayplot_magic makes Day (Day et al., 1977), or Squareness-Coercivity and Squareness-Coercivity of Remanence plots (e.g., Tauxe et al., 2002) from the MagIC formatted data. To do this, we will call ipmag.dayplot_magic().

help(ipmag.dayplot_magic)

Help on function dayplot_magic in module pmagpy.ipmag:

dayplot_magic(path_to_file='.', hyst_file='specimens.txt', rem_file='', save=True, save_folder='.', fmt='svg', data_model=3, interactive=False, contribution=None, image_records=False)

Makes 'day plots' (Day et al. 1977) and squareness/coercivity plots

(Neel, 1955; plots after Tauxe et al., 2002); plots 'linear mixing'

curve from Dunlop and Carter-Stiglitz (2006).

Optional Parameters (defaults are used if not specified)

----------

path_to_file : path to directory that contains files (default is current directory, '.')

the default input file is 'specimens.txt' (data_model=3

if data_model = 2, then must these are the defaults:

hyst_file : hysteresis file (default is 'rmag_hysteresis.txt')

rem_file : remanence file (default is 'rmag_remanence.txt')

save : boolean argument to save plots (default is True)

save_folder : relative directory where plots will be saved (default is current directory, '.')

fmt : format of saved figures (default is 'pdf')

image_records : generate and return a record for each image in a list of dicts

which can be ingested by pmag.magic_write

bool, default False

ipmag.dayplot_magic(path_to_file='data_files/dayplot_magic',hyst_file='specimens.txt',save=False)

(True, [])

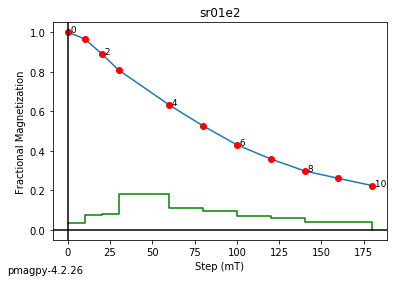

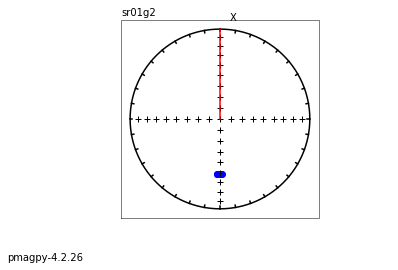

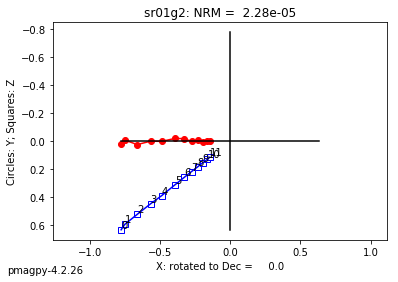

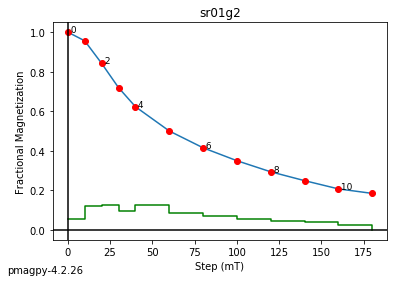

dmag_magic#

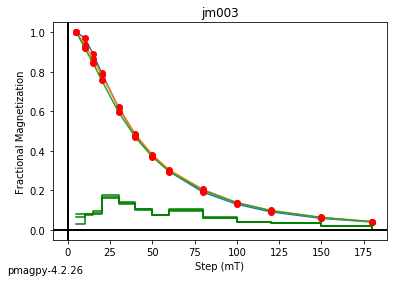

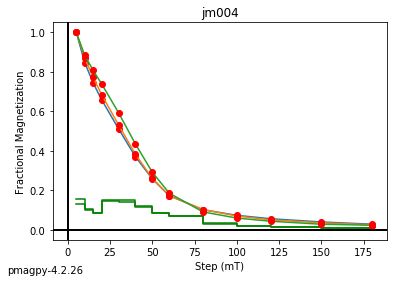

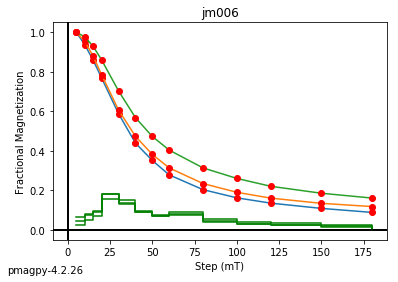

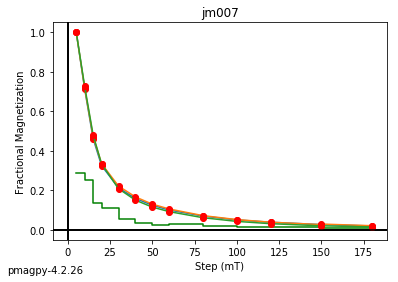

[Essentials Chapter 9] [MagIC Database] [command line version]

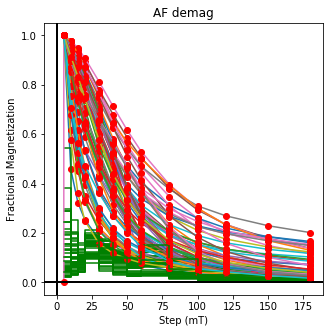

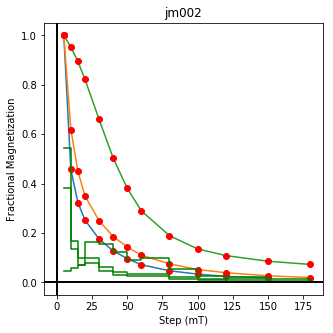

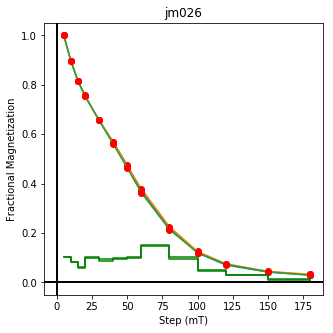

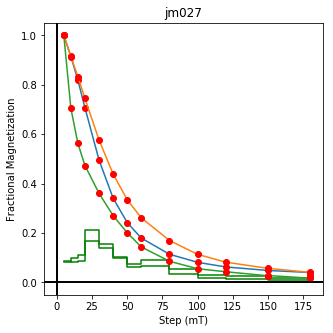

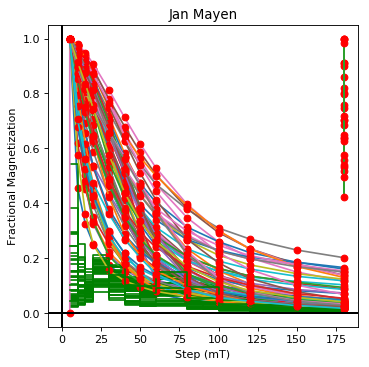

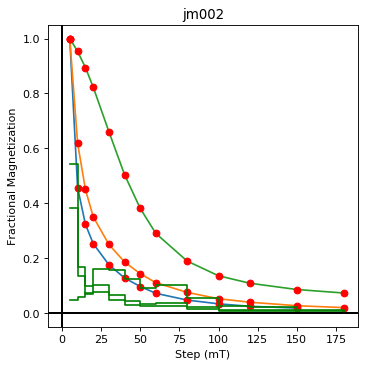

We use dmag_magic to plot out the decay of all alternating field demagnetization experiments in MagIC formatted files. Here we can take a look at some of the data from from Cromwell et al. (2013, doi: 10.1002/ggge.20174).

This program calls pmagplotlib.plot_mag() to plot the demagnetization curve for a sample, site, or entire data file interactively. There is a version that will prepare dataframes for plotting with this function called ipmag.plot_dmag(). So let’s try that:

help(ipmag.plot_dmag)

Help on function plot_dmag in module pmagpy.ipmag:

plot_dmag(data='', title='', fignum=1, norm=1, dmag_key='treat_ac_field', intensity='', quality=False)

plots demagenetization data versus step for all specimens in pandas dataframe datablock

Parameters

______________

data : Pandas dataframe with MagIC data model 3 columns:

fignum : figure number

specimen : specimen name

dmag_key : one of these: ['treat_temp','treat_ac_field','treat_mw_energy']

selected using method_codes : ['LT_T-Z','LT-AF-Z','LT-M-Z'] respectively

intensity : if blank will choose one of these: ['magn_moment', 'magn_volume', 'magn_mass']

quality : if True use the quality column of the DataFrame

title : title for plot

norm : if True, normalize data to first step

Output :

matptlotlib plot

Read in data from a MagIC data model 3 file. Let’s go ahead and read it in with the full data hierarchy.

status,data=cb.add_sites_to_meas_table('data_files/dmag_magic')

data.head()

| analysts | citations | description | dir_csd | dir_dec | dir_inc | experiment | magn_moment | meas_n_orient | meas_temp | ... | standard | timestamp | treat_ac_field | treat_dc_field | treat_dc_field_phi | treat_dc_field_theta | treat_temp | sequence | sample | site | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| measurement name | |||||||||||||||||||||

| 1 | Cromwell | This study | None | 0.3 | 190.4 | 43.5 | jm002a1:LT-NO | 5.76e-05 | 4 | 273 | ... | u | 2009-10-05T22:51:00Z | 0 | 0 | 0 | 90 | 273 | 1 | jm002a | jm002 |

| 1 | Cromwell | This study | None | 0.2 | 193.2 | 44.5 | jm002a2:LT-NO | 6.51e-05 | 4 | 273 | ... | u | 2009-10-05T22:51:00Z | 0 | 0 | 0 | 90 | 273 | 2 | jm002a | jm002 |

| 1 | Cromwell | This study | None | 0.2 | 147.5 | 50.6 | jm002b1:LT-NO | 4.97e-05 | 4 | 273 | ... | u | 2009-10-05T22:48:00Z | 0 | 0 | 0 | 90 | 273 | 3 | jm002b | jm002 |

| 1 | Cromwell | This study | None | 0.2 | 152.5 | 55.8 | jm002b2:LT-NO | 5.23e-05 | 4 | 273 | ... | u | 2009-10-05T23:07:00Z | 0 | 0 | 0 | 90 | 273 | 4 | jm002b | jm002 |

| 1 | Cromwell | This study | None | 0.2 | 186.2 | 55.7 | jm002c1:LT-NO | 5.98e-05 | 4 | 273 | ... | u | 2009-10-05T22:54:00Z | 0 | 0 | 0 | 90 | 273 | 5 | jm002c | jm002 |

5 rows × 25 columns

There are several forms of intensity measurements with different normalizations.

We could hunt through the magn_* columns to see what is non-blank or we can use the tool contribution_builder.get_intensity_col() which returns the first non-zero column.

magn_col=cb.get_intensity_col(data)

print (magn_col)

magn_moment

Let’s look at what demagnetization data are available to us:

data.method_codes.unique()

array(['LT-NO', 'LT-AF-Z:LP-DIR-AF', 'LT-AF-Z:DE-VM:LP-DIR-AF',

'LT-NO:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-T-Z:LP-PI-TRM-ZI:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-T-I:LP-PI-TRM-ZI:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-PTRM-MD:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-T-I:LP-PI-TRM-IZ:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-T-Z:LP-PI-TRM-IZ:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-PTRM-I:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-T-I:DE-VM:LP-PI-TRM-IZ:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-T-I:DE-VM:LP-PI-TRM-ZI:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-T-Z:DE-VM:LP-PI-TRM-IZ:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

'LT-AF-Z:LP-AN-ARM', 'LT-AF-I:LP-AN-ARM', 'LT-T-Z:LP-DIR-T',

'LT-T-Z:DE-VM:LP-DIR-T',

'LT-T-Z:DE-VM:LP-PI-TRM-ZI:LP-PI-TRM:LP-PI-ALT-PTRM:LP-PI-BT-MD:LP-PI-BT-IZZI',

None], dtype=object)

Oops - at least one of our records has blank method_codes! so, let’s get rid of that one.

data=data.dropna(subset=['method_codes'])

We can make the plots in this way:

select the AF demagnetization data with method_codes = ‘LP-DIR-AF’

make a dataframe with these columns: ‘specimen’,’treat_ac_field’,magn_col,and ‘quality’

call ipmag.plot_dmag() to view the plot:

af_df=data[data.method_codes.str.contains('LP-DIR-AF')] # select the thermal demag data

af_df=af_df.dropna(subset=['treat_ac_field'])

df=af_df[['specimen','treat_ac_field',magn_col,'quality']]

df.head()

| specimen | treat_ac_field | magn_moment | quality | |

|---|---|---|---|---|

| measurement name | ||||

| 1 | jm002a1 | 0.005 | 4.55e-05 | g |

| 2 | jm002a1 | 0.01 | 2.08e-05 | g |

| 3 | jm002a1 | 0.015 | 1.47e-05 | g |

| 4 | jm002a1 | 0.02 | 1.15e-05 | g |

| 5 | jm002a1 | 0.03 | 8e-06 | g |

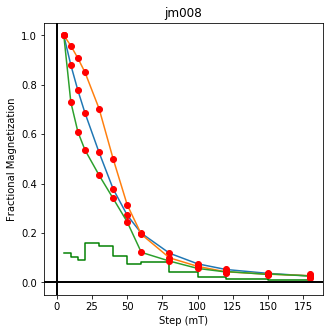

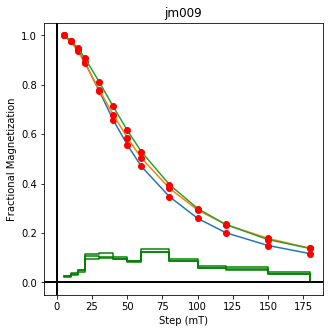

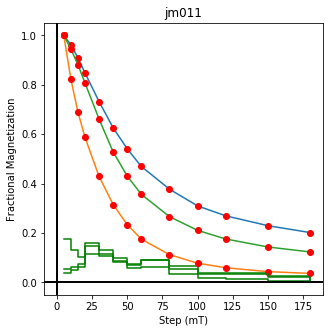

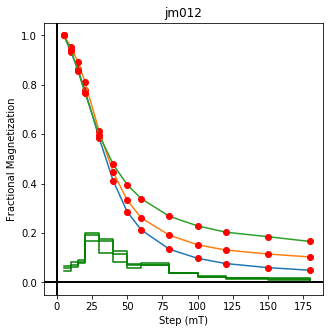

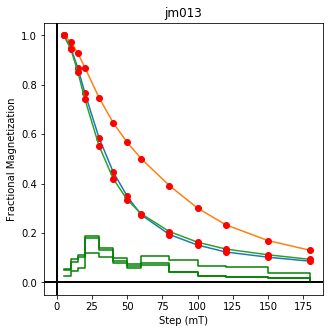

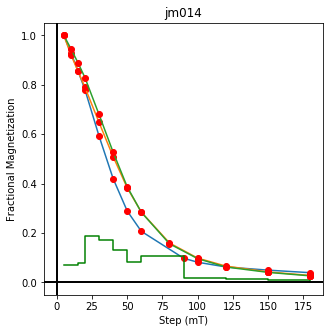

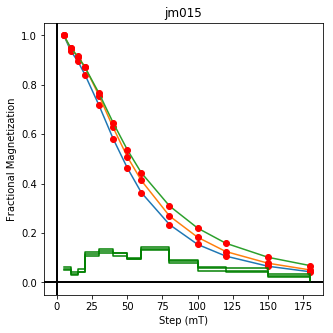

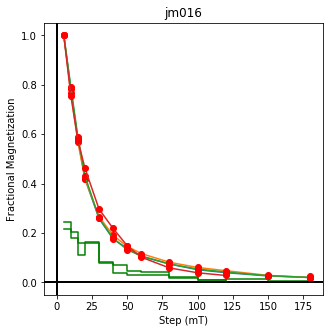

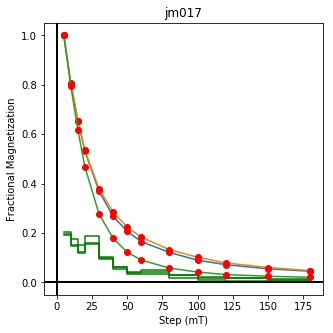

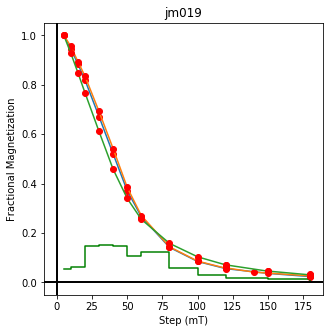

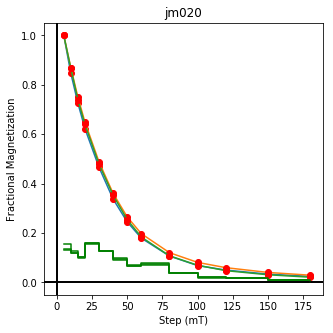

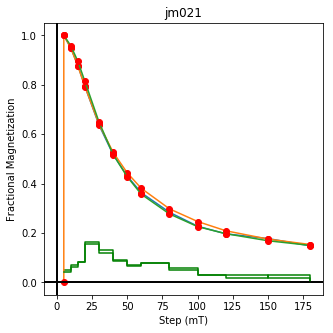

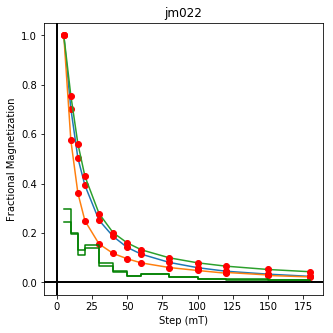

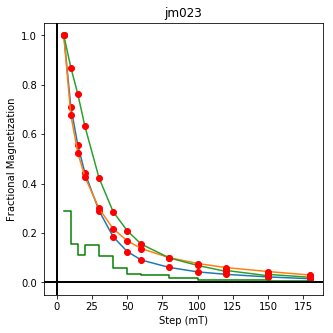

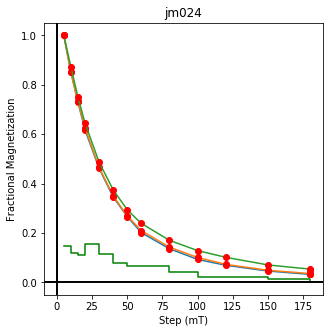

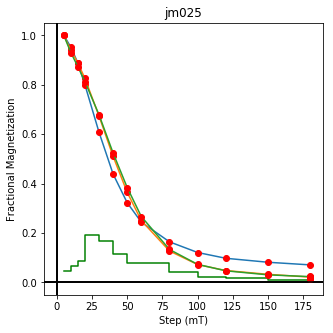

ipmag.plot_dmag(data=df,title="AF demag",fignum=1)

This plotted all the data in the file. we could also plot the data by site by getting a unique list of site names and then walk through them one by one

sites=af_df.site.unique()

cnt=1

for site in sites:

site_df=af_df[af_df.site==site] # fish out this site

# trim to only AF data.

site_df=site_df[['specimen','treat_ac_field',magn_col,'quality']]

ipmag.plot_dmag(data=site_df,title=site,fignum=cnt)

cnt+=1

We could repeat for thermal data if we felt like it using ‘LT-T-Z’ as the method_code key and treat_temp as the step. We could also save the plots using plt.savefig(‘FIGNAME.FMT’) where FIGNAME could be the site, location, demag type as you wish.

# alternatively, using ipmag.dmag_magic

ipmag.dmag_magic(dir_path='data_files/dmag_magic', LT="AF", save_plots=False)

5001 records read from measurements.txt

Jan Mayen plotting by: location

(True, [])

<Figure size 432x288 with 0 Axes>

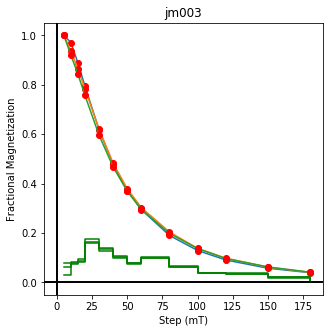

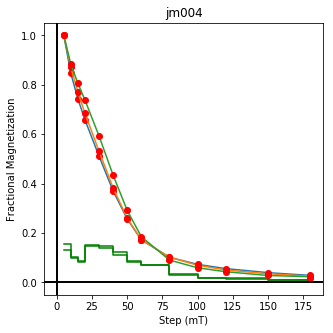

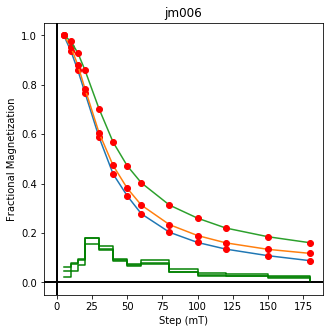

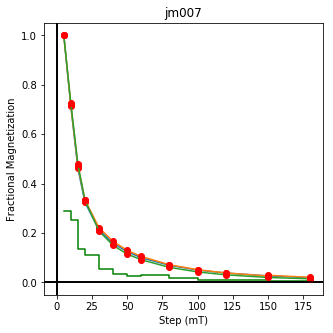

ipmag.dmag_magic(dir_path=".", input_dir_path="data_files/dmag_magic",

LT="AF", plot_by='sit', fmt="png", save_plots=False)

5001 records read from measurements.txt

jm002 plotting by: site

jm003 plotting by: site

jm004 plotting by: site

jm006 plotting by: site

jm007 plotting by: site

(True, [])

<Figure size 432x288 with 0 Axes>

dmag_magic with a downloaded file#

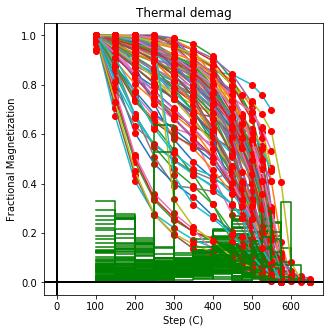

Now let’s look at a downloaded contribution using dmag_magic as before, but this time with thermal demagnetization.

ipmag.download_magic("magic_contribution_16533.txt", dir_path="data_files/download_magic",

input_dir_path="data_files/download_magic")

status,data=cb.add_sites_to_meas_table('data_files/download_magic')

df=data[data.method_codes.str.contains('LT-T-Z')] # select the thermal demag data

df=df[['specimen','treat_temp','magn_moment','quality']]

df=df.dropna(subset=['treat_temp','magn_moment'])

ipmag.plot_dmag(data=df,title="Thermal demag",fignum=1, dmag_key='treat_temp')

working on: 'contribution'

1 records written to file /Users/nebula/Python/PmagPy/data_files/download_magic/contribution.txt

contribution data put in /Users/nebula/Python/PmagPy/data_files/download_magic/contribution.txt

working on: 'locations'

3 records written to file /Users/nebula/Python/PmagPy/data_files/download_magic/locations.txt

locations data put in /Users/nebula/Python/PmagPy/data_files/download_magic/locations.txt

working on: 'sites'

52 records written to file /Users/nebula/Python/PmagPy/data_files/download_magic/sites.txt

sites data put in /Users/nebula/Python/PmagPy/data_files/download_magic/sites.txt

working on: 'samples'

271 records written to file /Users/nebula/Python/PmagPy/data_files/download_magic/samples.txt

samples data put in /Users/nebula/Python/PmagPy/data_files/download_magic/samples.txt

working on: 'specimens'

225 records written to file /Users/nebula/Python/PmagPy/data_files/download_magic/specimens.txt

specimens data put in /Users/nebula/Python/PmagPy/data_files/download_magic/specimens.txt

working on: 'measurements'

3072 records written to file /Users/nebula/Python/PmagPy/data_files/download_magic/measurements.txt

measurements data put in /Users/nebula/Python/PmagPy/data_files/download_magic/measurements.txt

working on: 'criteria'

20 records written to file /Users/nebula/Python/PmagPy/data_files/download_magic/criteria.txt

criteria data put in /Users/nebula/Python/PmagPy/data_files/download_magic/criteria.txt

working on: 'ages'

20 records written to file /Users/nebula/Python/PmagPy/data_files/download_magic/ages.txt

ages data put in /Users/nebula/Python/PmagPy/data_files/download_magic/ages.txt

dms2dd#

convert degrees minutes seconds to decimal degrees

help(pmag.dms2dd)

Help on function dms2dd in module pmagpy.pmag:

dms2dd(d)

Converts a list or array degree, minute, second locations to an array of decimal degrees.

Parameters

__________

d : list or array of [deg, min, sec]

Returns

_______

d : input list or array and its corresponding

dd : decimal degree

Examples

________

>>> pmag.dms2dd([60,35,15])

60 35 15

array(60.587500000000006)

pmag.dms2dd([70, 10, 0])

array(70.16666666666667)

eqarea#

[Essentials Chapter 2][Essentials Appendix B] [command line version]

The problem of plotting equal area projections in Jupyter notebooks was solved by Nick Swanson-Hysell who started the ipmag module just for this purpose! We use ipmag.plot_net() to plot the net, then ipmag.plot_di() to plot the directions.

help(ipmag.plot_di)

Help on function plot_di in module pmagpy.ipmag:

plot_di(dec=None, inc=None, di_block=None, color='k', marker='o', markersize=20, legend='no', label='', title='', edge='', alpha=1)

Plot declination, inclination data on an equal area plot.

Before this function is called a plot needs to be initialized with code that looks

something like:

>fignum = 1

>plt.figure(num=fignum,figsize=(10,10),dpi=160)

>ipmag.plot_net(fignum)

Required Parameters

-----------

dec : declination being plotted

inc : inclination being plotted

or

di_block: a nested list of [dec,inc,1.0]

(di_block can be provided instead of dec, inc in which case it will be used)

Optional Parameters (defaults are used if not specified)

-----------

color : the default color is black. Other colors can be chosen (e.g. 'r')

marker : the default marker is a circle ('o')

markersize : default size is 20

label : the default label is blank ('')

legend : the default is no legend ('no'). Putting 'yes' will plot a legend.

edge : marker edge color - if blank, is color of marker

alpha : opacity

di_block=np.loadtxt('data_files/eqarea/fishrot.out')

ipmag.plot_net(1)

ipmag.plot_di(di_block=di_block,color='red',edge='black')

eqarea_ell#

[Essentials Chapter 11] [Essentials Chapter 12] [Essentials Appendix B] [command line version]

There are several ways of plotting ellipses for directions. The first is to plot an alpha_95 for each direction (dec, inc).

To do that, we use ipmag.plot_di_mean( ) to plot each direction and its associated alpha_95.

vectors=np.loadtxt('data_files/eqarea_ell/eqarea_ell_example.txt')

vectors

array([[ 26.9, 15.5, 3.2],

[ 20.9, 14.7, 2.6],

[ 31.8, 16.4, 4.2],

[ 32.8, 6.3, 7.6],

[ 43.7, -4.7, 8.9],

[209.3, -26.1, 7.6],

[224.4, -21.1, 14.3],

[197. , -40.8, 10.3],

[209.4, -2.9, 10.8],

[202.7, -4.6, 4.7],

[220.7, -9. , 13.3],

[219.4, -10.2, 11.4],

[228.9, -17.3, 7.9],

[213.2, -14. , 7.7]])

plt.figure(1)

ipmag.plot_net(1)

for k in range(len(vectors)):

ipmag.plot_di_mean(vectors[k][0],vectors[k][1],vectors[k][2])

Alternatively, one can make a plot of all the directions and the mean and confidence ellipse for the ensemble.

We make the equal area projects with the ipmag.plot_net() and ipmag.plot_di() functions. The options in eqarea_ell are:

- Bingham mean and ellipse(s)

- Fisher mean(s) and alpha_95(s)

- Kent mean(s) - same as Fisher - and Kent ellipse(s)

- Bootstrapped mean(s) - same as Fisher - and ellipse(s)

- Bootstrapped eigenvectors

For Bingham mean, the N/R data are assumed antipodal and the procedure would be:

- plot the data

- calculate the bingham ellipse with pmag.dobingham()

- plot the ellipse using pmag.plot_di_mean_ellipse()

All others, the data are not assumed antipodal, and must be separated into normal and reverse modes. To do that you can either use pmag.separate_directions() to calculate ellipses for each mode, OR use pmag.flip() to flip the reverse mode to the normal mode. To calculate the ellipses:

- calculate the ellipses for each mode (or the flipped data set):

- Kent: use pmag.dokent(), setting NN to the number of data points

- Bootstrap : use pmag.di_boot() to generate the bootstrapped means

- either just plot the eigenvectors (ipmag.plot_di()) OR

- calcualate the bootstrapped ellipses with pmag.dokent() setting NN to 1

- Parametric bootstrap : you need a pandas data frame with the site mean directions, n and kappa. Then you can use pmag.dir_df_boot().

- plot the ellipses if desired.

#read in the data into an array

vectors=np.loadtxt('data_files/eqarea_ell/tk03.out').transpose()

di_block=vectors[0:2].transpose() # decs are di_block[0], incs are di_block[1]

di_block

array([[182.7, -64.7],

[354.7, 62.8],

[198.1, -68.1],

[344.8, 61.8],

[194. , -56.5],

[350. , 56.1],

[214.2, -55.3],

[344.9, 56.5],

[172.6, -70.7],

[ 3. , 60.9],

[155.2, -60.2],

[ 8.4, 65.1],

[183.5, -56.5],

[342.5, 56.1],

[175.5, -53.4],

[338.9, 73.3],

[169.8, -56.9],

[347.1, 45.9],

[183.2, -52.5],

[ 12.5, 57.5]])

Bingham ellipses#

help(pmag.dobingham)

Help on function dobingham in module pmagpy.pmag:

dobingham(di_block)

Calculates the Bingham mean and associated statistical parameters from

directions that are input as a di_block

Parameters

----------

di_block : a nested list of [dec,inc] or [dec,inc,intensity]

Returns

-------

bpars : dictionary containing the Bingham mean and associated statistics

dictionary keys

dec : mean declination

inc : mean inclination

n : number of datapoints

Eta : major ellipse

Edec : declination of major ellipse axis

Einc : inclination of major ellipse axis

Zeta : minor ellipse

Zdec : declination of minor ellipse axis

Zinc : inclination of minor ellipse axis

help(ipmag.plot_di_mean_ellipse)

Help on function plot_di_mean_ellipse in module pmagpy.ipmag:

plot_di_mean_ellipse(dictionary, fignum=1, color='k', marker='o', markersize=20, label='', legend='no')

Plot a mean direction (declination, inclination) confidence ellipse.

Parameters

-----------

dictionary : a dictionary generated by the pmag.dobingham or pmag.dokent funcitons

ipmag.plot_net(1)

ipmag.plot_di(di_block=di_block)

bpars=pmag.dobingham(di_block)

ipmag.plot_di_mean_ellipse(bpars,color='red',marker='^',markersize=50)

Fisher mean, a95#

help(pmag.separate_directions)

Help on function separate_directions in module pmagpy.pmag:

separate_directions(di_block)

Separates set of directions into two modes based on principal direction

Parameters

_______________

di_block : block of nested dec,inc pairs

Return

mode_1_block,mode_2_block : two lists of nested dec,inc pairs

vectors=np.loadtxt('data_files/eqarea_ell/tk03.out').transpose()

di_block=vectors[0:2].transpose() # decs are di_block[0], incs are di_block[1]

mode_1,mode_2=pmag.separate_directions(di_block)

help(ipmag.fisher_mean)

Help on function fisher_mean in module pmagpy.ipmag:

fisher_mean(dec=None, inc=None, di_block=None)

Calculates the Fisher mean and associated parameters from either a list of

declination values and a separate list of inclination values or from a

di_block (a nested list a nested list of [dec,inc,1.0]). Returns a

dictionary with the Fisher mean and statistical parameters.

Parameters

----------

dec : list of declinations or longitudes

inc : list of inclinations or latitudes

di_block : a nested list of [dec,inc,1.0]

A di_block can be provided instead of dec, inc lists in which case it

will be used. Either dec, inc lists or a di_block need to be provided.

Returns

-------

fisher_mean : dictionary containing the Fisher mean parameters

Examples

--------

Use lists of declination and inclination to calculate a Fisher mean:

>>> ipmag.fisher_mean(dec=[140,127,142,136],inc=[21,23,19,22])

{'alpha95': 7.292891411309177,

'csd': 6.4097743211340896,

'dec': 136.30838974272072,

'inc': 21.347784026899987,

'k': 159.69251473636305,

'n': 4,

'r': 3.9812138971889026}

Use a di_block to calculate a Fisher mean (will give the same output as the

example with the lists):

>>> ipmag.fisher_mean(di_block=[[140,21],[127,23],[142,19],[136,22]])

mode_1_fpars=ipmag.fisher_mean(di_block=mode_1)

mode_2_fpars=ipmag.fisher_mean(di_block=mode_2)

help(ipmag.plot_di_mean)

Help on function plot_di_mean in module pmagpy.ipmag:

plot_di_mean(dec, inc, a95, color='k', marker='o', markersize=20, label='', legend='no')

Plot a mean direction (declination, inclination) with alpha_95 ellipse on

an equal area plot.

Before this function is called, a plot needs to be initialized with code

that looks something like:

>fignum = 1

>plt.figure(num=fignum,figsize=(10,10),dpi=160)

>ipmag.plot_net(fignum)

Required Parameters

-----------

dec : declination of mean being plotted

inc : inclination of mean being plotted

a95 : a95 confidence ellipse of mean being plotted

Optional Parameters (defaults are used if not specified)

-----------

color : the default color is black. Other colors can be chosen (e.g. 'r').

marker : the default is a circle. Other symbols can be chosen (e.g. 's').

markersize : the default is 20. Other sizes can be chosen.

label : the default is no label. Labels can be assigned.

legend : the default is no legend ('no'). Putting 'yes' will plot a legend.

# plot the data

ipmag.plot_net(1)

ipmag.plot_di(di_block=di_block,color='red',edge='black')

# draw on the means and lpha95

ipmag.plot_di_mean(dec=mode_1_fpars['dec'],inc=mode_1_fpars['inc'],a95=mode_1_fpars['alpha95'],\

marker='*',color='blue',markersize=50)

ipmag.plot_di_mean(dec=mode_2_fpars['dec'],inc=mode_2_fpars['inc'],a95=mode_2_fpars['alpha95'],\

marker='*',color='blue',markersize=50)

Kent mean and ellipse#

help(pmag.dokent)

Help on function dokent in module pmagpy.pmag:

dokent(data, NN)

gets Kent parameters for data

Parameters

___________________

data : nested pairs of [Dec,Inc]

NN : normalization

NN is the number of data for Kent ellipse

NN is 1 for Kent ellipses of bootstrapped mean directions

Return

kpars dictionary keys

dec : mean declination

inc : mean inclination

n : number of datapoints

Eta : major ellipse

Edec : declination of major ellipse axis

Einc : inclination of major ellipse axis

Zeta : minor ellipse

Zdec : declination of minor ellipse axis

Zinc : inclination of minor ellipse axis

mode_1_kpars=pmag.dokent(mode_1,len(mode_1))

mode_2_kpars=pmag.dokent(mode_2,len(mode_2))

# plot the data

ipmag.plot_net(1)

ipmag.plot_di(di_block=di_block,color='red',edge='black')

# draw on the means and lpha95

ipmag.plot_di_mean_ellipse(mode_1_kpars,marker='*',color='cyan',markersize=20)

ipmag.plot_di_mean_ellipse(mode_2_kpars,marker='*',color='cyan',markersize=20)

Bootstrap eigenvectors#

help(pmag.di_boot)

Help on function di_boot in module pmagpy.pmag:

di_boot(DIs, nb=5000)

returns bootstrap means for Directional data

Parameters

_________________

DIs : nested list of Dec,Inc pairs

nb : number of bootstrap pseudosamples

Returns

-------

BDIs: nested list of bootstrapped mean Dec,Inc pairs

mode_1_BDIs=pmag.di_boot(mode_1)

mode_2_BDIs=pmag.di_boot(mode_2)

ipmag.plot_net(1)

ipmag.plot_di(di_block=mode_1_BDIs,color='cyan',markersize=1)

ipmag.plot_di(di_block=mode_2_BDIs,color='cyan',markersize=1)

ipmag.plot_di(di_block=di_block,color='red',edge='black')

Bootstrapped ellipses#

mode_1_bpars=pmag.dokent(mode_1_BDIs,1)

mode_2_bpars=pmag.dokent(mode_2_BDIs,1)

# plot the data

ipmag.plot_net(1)

ipmag.plot_di(di_block=di_block,color='red',edge='black')

# draw on the means and lpha95

ipmag.plot_di_mean_ellipse(mode_1_bpars,marker='*',color='cyan',markersize=20)

ipmag.plot_di_mean_ellipse(mode_2_bpars,marker='*',color='cyan',markersize=20)

eqarea_magic#

[Essentials Chapter 2] [MagIC Database] [command line version]

eqarea_magic takes MagIC data model 3 files and makes equal area projections of declination, inclination data for a variety of selections,

i.e. all the data, by site, by sample, or by specimen

It has the option to plot in different coordinate systems (if available) and various ellipses. It will also make a color contour plot if desired.

We will do this with ipmag.plot_net() and ipmag_plot_di() using Pandas filtering capability.

Let’s start with a simple plot of site mean directions, assuming that they were interpreted from measurements using pmag_gui.py or some such program and have all the required meta-data.

We want data in geographic coordinates (dir_tilt_correction=0). The keys for directions are dir_dec and dir_inc. One could add the ellipses using ipmag.plot_di_mean_ellipse().

whole study#

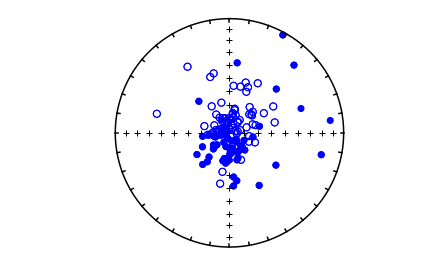

sites=pd.read_csv('data_files/eqarea_magic/sites.txt',sep='\t',header=1)

site_dirs=sites[sites['dir_tilt_correction']==0]

ipmag.plot_net(1)

di_block=sites[['dir_dec','dir_inc']].values

#ipmag.plot_di(sites['dir_dec'].values,sites['dir_inc'].values,color='blue',markersize=50)

ipmag.plot_di(di_block=di_block,color='blue',markersize=50)

# or, using ipmag.eqarea_magic:

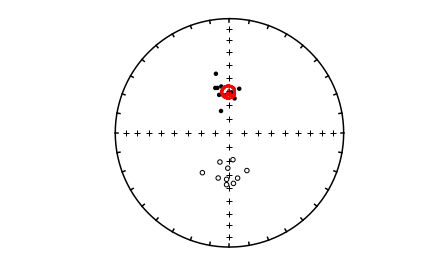

ipmag.eqarea_magic('data_files/eqarea_magic/sites.txt', save_plots=False)

388 sites records read in

All

(True, [])

# with a contribution

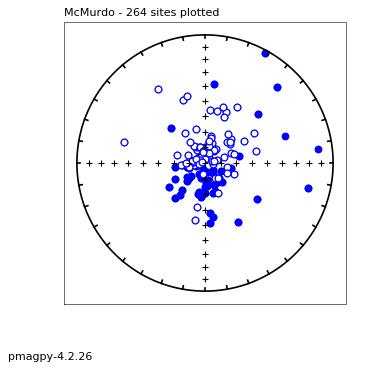

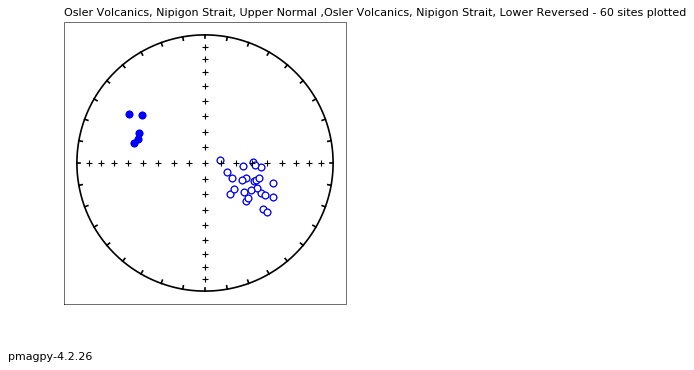

con = cb.Contribution("data_files/3_0/Osler")

ipmag.eqarea_magic(crd='t', fmt='png', contribution=con, source_table='sites', save_plots=False)

90 sites records read in

All

(True, [])

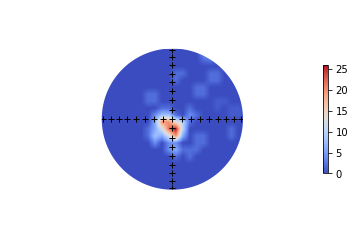

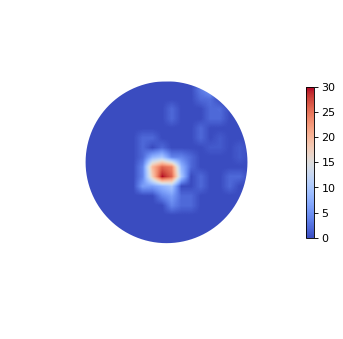

whole study with color contour option#

for this we can use the function pmagplotlib.plot_eq_cont() which makes a color contour of a dec, inc data

help(pmagplotlib.plot_eq_cont)

Help on function plot_eq_cont in module pmagpy.pmagplotlib:

plot_eq_cont(fignum, DIblock, color_map='coolwarm')

plots dec inc block as a color contour

Parameters

__________________

Input:

fignum : figure number

DIblock : nested pairs of [Declination, Inclination]

color_map : matplotlib color map [default is coolwarm]

Output:

figure

ipmag.plot_net(1)

pmagplotlib.plot_eq_cont(1,di_block)

# with ipmag.eqarea_magic

ipmag.eqarea_magic('data_files/eqarea_magic/sites.txt', save_plots=False, contour=True)

388 sites records read in

All

(True, [])

specimens by site#

This study averaged specimens (not samples) by site, so we would like to make plots of all the specimen data for each site. We can do things the in a similar way to what we did in the dmag_magic example.

A few particulars:

We will be plotting specimen interpetations in geographic coordinates (dir_tilt_correction=0)

We need to look at the method codes as there might be fisher means, principal components, great circles, etc. A complete list of method codes for Direction Estimation can be found here: https://www2.earthref.org/MagIC/method-codes

There might be ‘bad’ directions - ‘result_quality’=’b’ as opposed to ‘g’.

There are a lot of sites in this study, so let’s just look at the first 10…

# read in specimen table

spec_df=pd.read_csv('data_files/eqarea_magic/specimens.txt',sep='\t',header=1)

# read in sample table

samp_df=pd.read_csv('data_files/eqarea_magic/samples.txt',sep='\t',header=1)

# get only what we need from samples (sample to site mapping)

samp_df=samp_df[['sample','site']]

# merge site to specimen name in the specimen data frame

df_ext=pd.merge(spec_df,samp_df,how='inner',on='sample')

# truncate to the first 10 sites

sites=df_ext.site.unique()[0:11]

We need to filter specimen data for dir_tilt_correction=0 and separate into DE-BFP (best fit planes) and not.

# get the geographic coordinates

spec_df=df_ext[spec_df.dir_tilt_correction==0]

# filter to exclude planes

spec_lines=spec_df[spec_df.method_codes.str.contains('DE-BFP')==False]

# filter for planes

spec_df_gc=spec_df[spec_df.method_codes.str.contains('DE-BFP')==True]

# here's a new one:

help(ipmag.plot_gc)

Help on function plot_gc in module pmagpy.ipmag:

plot_gc(poles, color='g', fignum=1)

plots a great circle on an equal area projection

Parameters

____________________

Input

fignum : number of matplotlib object

poles : nested list of [Dec,Inc] pairs of poles

color : color of lower hemisphere dots for great circle - must be in form: 'g','r','y','k',etc.

upper hemisphere is always cyan

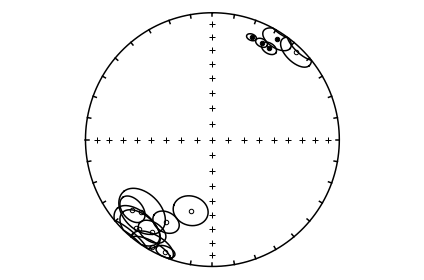

cnt=1

for site in sites:

plt.figure(cnt)

ipmag.plot_net(cnt)

plt.title(site)

site_lines=spec_lines[spec_lines.site==site] # fish out this site

ipmag.plot_di(site_lines.dir_dec.values,site_lines.dir_inc.values)

site_planes=spec_df_gc[spec_df_gc.site==site]

poles=site_planes[['dir_dec','dir_inc']].values

if poles.shape[0]>0:

ipmag.plot_gc(poles,fignum=cnt,color='r')

cnt+=1

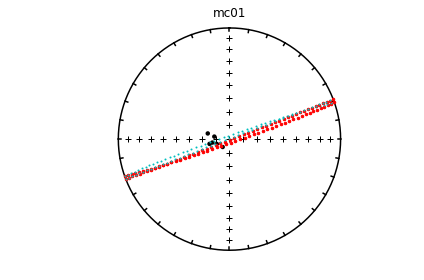

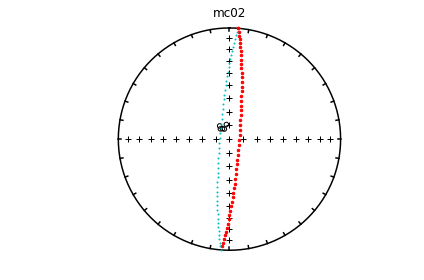

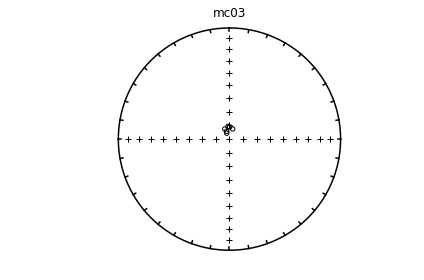

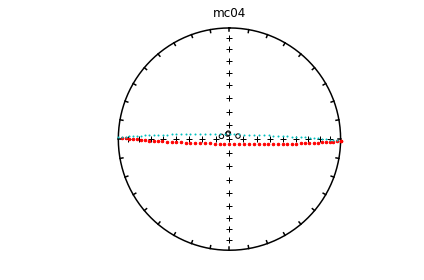

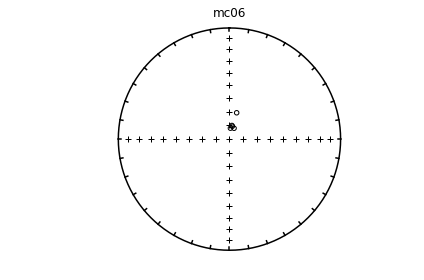

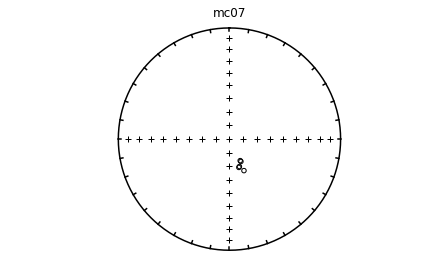

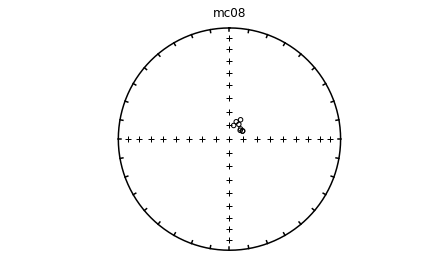

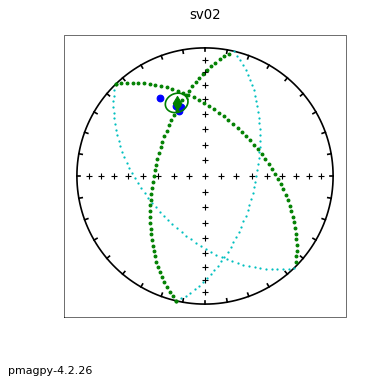

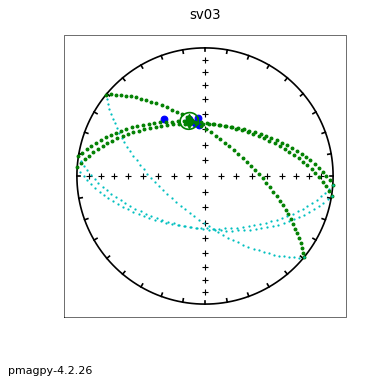

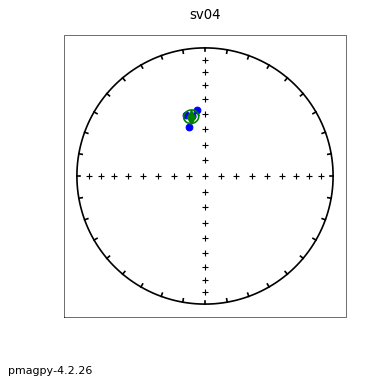

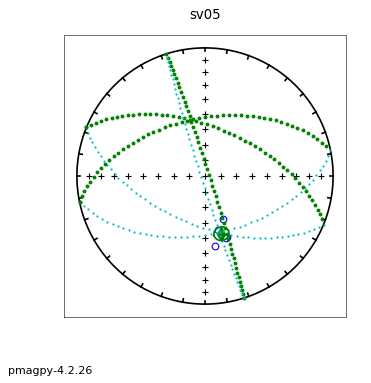

# using ipmag.eqarea_magic:

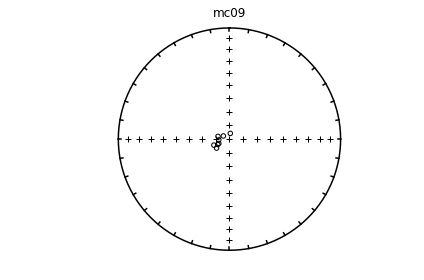

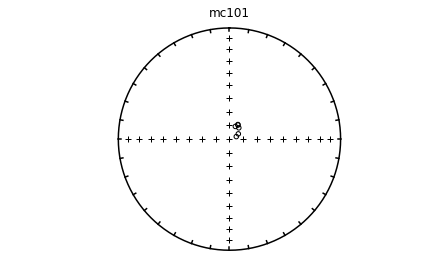

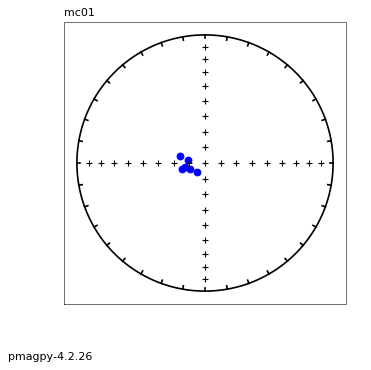

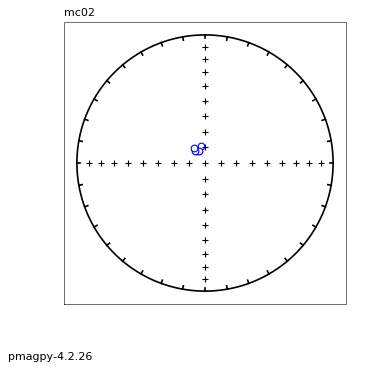

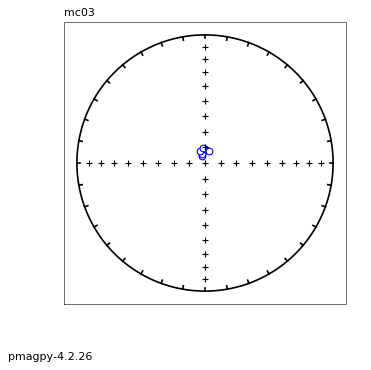

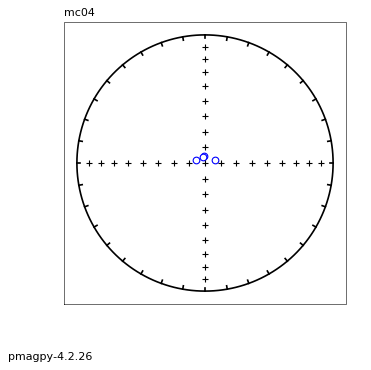

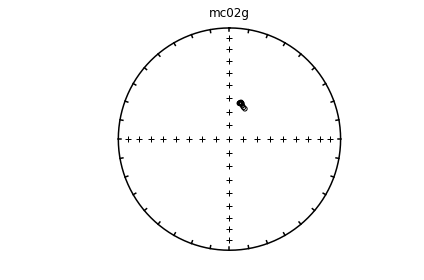

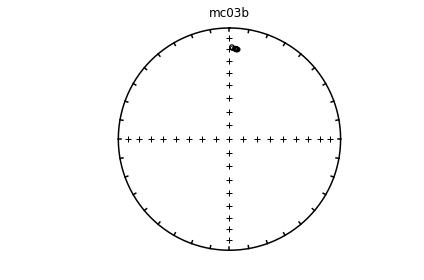

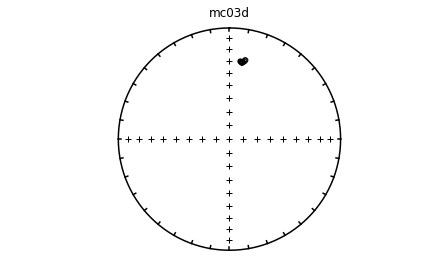

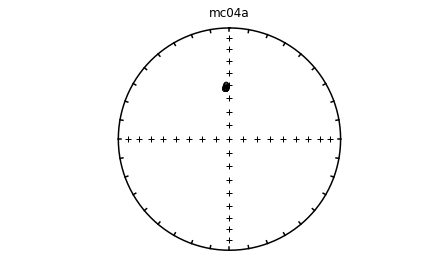

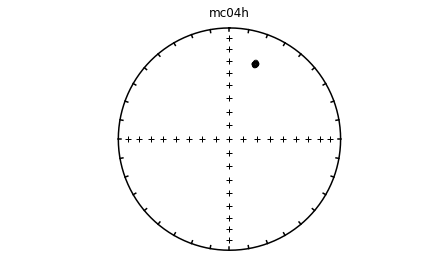

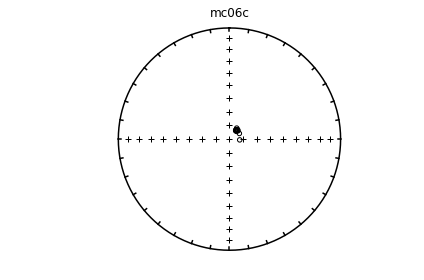

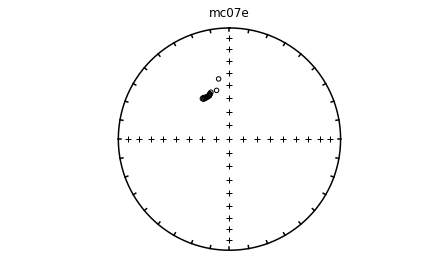

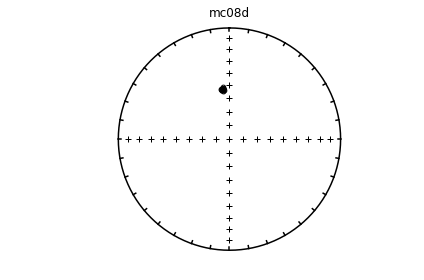

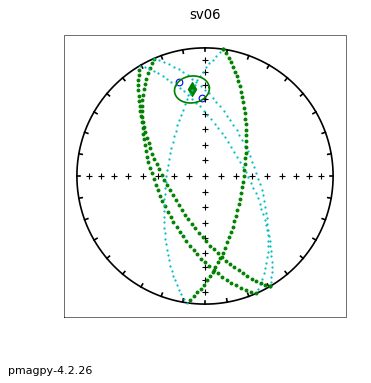

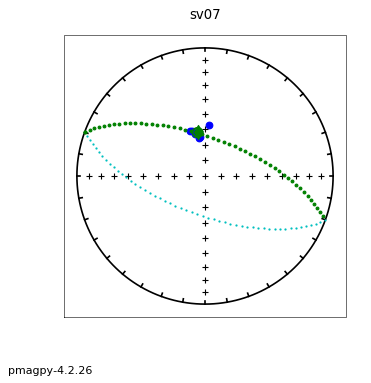

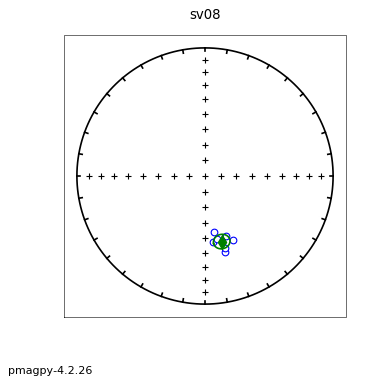

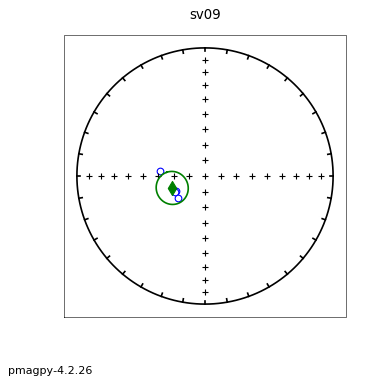

ipmag.eqarea_magic('specimens.txt', 'data_files/eqarea_magic', plot_by='sit', save_plots=False)

1374 specimens records read in

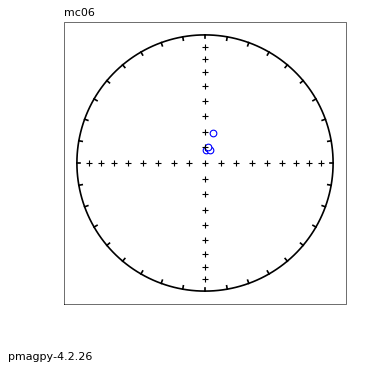

mc01

mc02

mc03

mc04

mc06

(True, [])

measurements by specimen#

We can do this like this:

read in the MagIC data model 3 measurements table into a Pandas data frame

get a list of unique specimen names

truncate this to the first 10 for this purpose

plot the dir_dec and dir_inc fields by specimen

# read in measurements table

meas_df=pd.read_csv('data_files/eqarea_magic/measurements.txt',sep='\t',header=1)

specimens=meas_df.specimen.unique()[0:11]

cnt=1

for spec in specimens:

meas_spc=meas_df[meas_df.specimen==spec]

plt.figure(cnt)

ipmag.plot_net(cnt)

plt.title(spec)

ipmag.plot_di(meas_spc.dir_dec.values,meas_spc.dir_inc.values)

cnt+=1

Individual specimens#

# using ipmag.eqarea_magic:

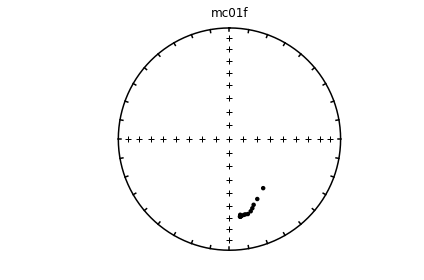

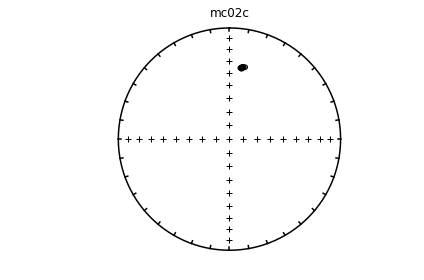

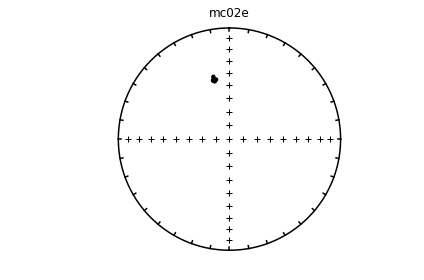

ipmag.eqarea_magic('specimens.txt', 'data_files/eqarea_magic', plot_by='spc', save_plots=False)

1374 specimens records read in

mc01a

no records for plotting

mc01b

mc01c

mc01d

mc01e

(True, [])

find_ei#

[Essentials Chapter 14] [MagIC Database] [command line version]

This program is meant to find the unflattening factor (see unsquish documentation) that brings a sedimentary data set into agreement with the statistical field model TK03 of Tauxe and Kent (2004, doi: 10.1029/145GM08). It has been implemented for notebooks as ipmag.find_ei().

A data file (data_files/find_EI/find_EI_example.dat) was prepared using the program tk03 to simulate directions at a latitude of 42\(^{\circ}\). with an expected inclination of 61\(^{\circ}\) (which could be gotten using dipole_pinc of course.

help(ipmag.find_ei)

Help on function find_ei in module pmagpy.ipmag:

find_ei(data, nb=1000, save=False, save_folder='.', fmt='svg', site_correction=False, return_new_dirs=False)

Applies series of assumed flattening factor and "unsquishes" inclinations assuming tangent function.

Finds flattening factor that gives elongation/inclination pair consistent with TK03;

or, if correcting by site instead of for study-level secular variation,

finds flattening factor that minimizes elongation and most resembles a

Fisherian distribution.

Finds bootstrap confidence bounds

Required Parameter

-----------

data: a nested list of dec/inc pairs

Optional Parameters (defaults are used unless specified)

-----------

nb: number of bootstrapped pseudo-samples (default is 1000)

save: Boolean argument to save plots (default is False)

save_folder: path to folder in which plots should be saved (default is current directory)

fmt: specify format of saved plots (default is 'svg')

site_correction: Boolean argument to specify whether to "unsquish" data to

1) the elongation/inclination pair consistent with TK03 secular variation model

(site_correction = False)

or

2) a Fisherian distribution (site_correction = True). Default is FALSE.

Note that many directions (~ 100) are needed for this correction to be reliable.

return_new_dirs: optional return of newly "unflattened" directions (default is False)

Returns

-----------

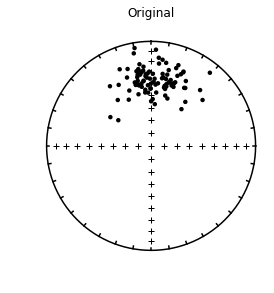

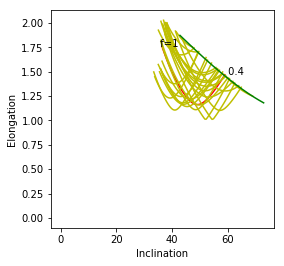

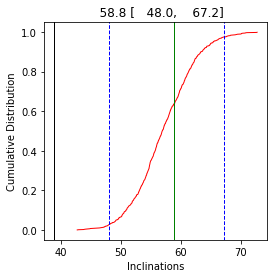

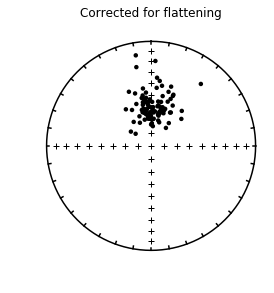

four plots: 1) equal area plot of original directions

2) Elongation/inclination pairs as a function of f, data plus 25 bootstrap samples

3) Cumulative distribution of bootstrapped optimal inclinations plus uncertainties.

Estimate from original data set plotted as solid line

4) Orientation of principle direction through unflattening

NOTE: If distribution does not have a solution, plot labeled: Pathological. Some bootstrap samples may have

valid solutions and those are plotted in the CDFs and E/I plot.

data=np.loadtxt('data_files/find_EI/find_EI_example.dat')

ipmag.find_ei(data)

Bootstrapping.... be patient

The original inclination was: 38.92904490925402

The corrected inclination is: 58.83246032206779

with bootstrapped confidence bounds of: 47.97995046611144 to 67.19209713147673

and elongation parameter of: 1.4678654859428288

The flattening factor is: 0.4249999999999995

In this example, the original expected inclination at paleolatitude of 42 (61\(^{\circ}\)) is recovered within the 95% confidence bounds.

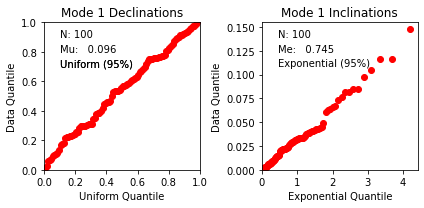

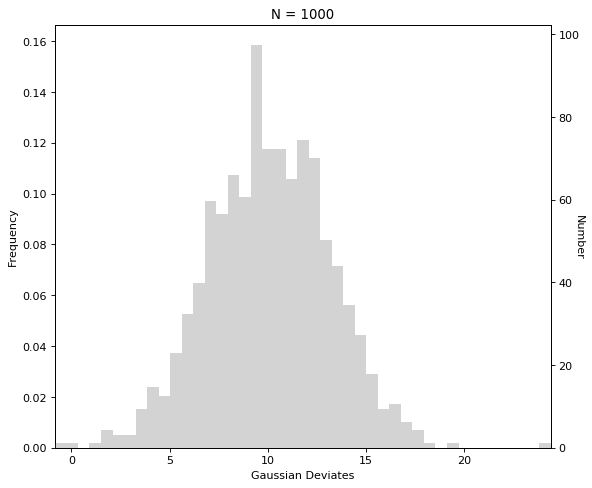

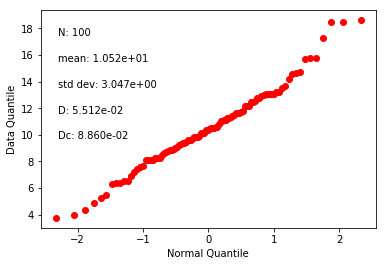

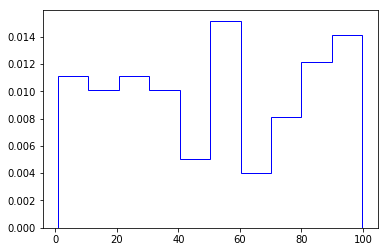

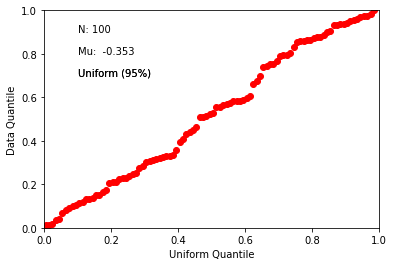

fishqq#

[Essentials Chapter 11] [command line version]

This program tests whether a given directional data set is Fisher distributed using a Quantile-Quantile plot (see also qqunf or qqplot for more on Quantile-Quantile plots).

Blessedly, fishqq has been incorporated into ipmag.fishqq() for use within notebooks.

help(ipmag.fishqq)

Help on function fishqq in module pmagpy.ipmag:

fishqq(lon=None, lat=None, di_block=None)

Test whether a distribution is Fisherian and make a corresponding Q-Q plot.

The Q-Q plot shows the data plotted against the value expected from a

Fisher distribution. The first plot is the uniform plot which is the

Fisher model distribution in terms of longitude (declination). The second

plot is the exponential plot which is the Fisher model distribution in terms

of latitude (inclination). In addition to the plots, the test statistics Mu

(uniform) and Me (exponential) are calculated and compared against the

critical test values. If Mu or Me are too large in comparision to the test

statistics, the hypothesis that the distribution is Fisherian is rejected

(see Fisher et al., 1987).

Parameters:

-----------

lon : longitude or declination of the data

lat : latitude or inclination of the data

or

di_block: a nested list of [dec,inc]

A di_block can be provided in which case it will be used instead of

dec, inc lists.

Output:

-----------

dictionary containing

lon : mean longitude (or declination)

lat : mean latitude (or inclination)

N : number of vectors

Mu : Mu test statistic value for the data

Mu_critical : critical value for Mu

Me : Me test statistic value for the data

Me_critical : critical value for Me

if the data has two modes with N >=10 (N and R)

two of these dictionaries will be returned

Examples

--------

In this example, directions are sampled from a Fisher distribution using

``ipmag.fishrot`` and then the ``ipmag.fishqq`` function is used to test

whether that distribution is Fisherian:

>>> directions = ipmag.fishrot(k=40, n=50, dec=200, inc=50)

>>> ipmag.fishqq(di_block = directions)

{'Dec': 199.73564290371894,

'Inc': 49.017612342358298,

'Me': 0.78330310031220352,

'Me_critical': 1.094,

'Mode': 'Mode 1',

'Mu': 0.69915926146177099,

'Mu_critical': 1.207,

'N': 50,

'Test_result': 'consistent with Fisherian model'}

The above example passed a di_block to the function as an input. Lists of

paired declination and inclination can also be used as inputs. Here the

directions di_block is unpacked to separate declination and inclination

lists using the ``ipmag.unpack_di_block`` functionwhich are then used as

input to fishqq:

>>> dec_list, inc_list = ipmag.unpack_di_block(directions)

>>> ipmag.fishqq(lon=dec_list, lat=inc_list)

di_block=np.loadtxt('data_files/fishqq/fishqq_example.txt')

ipmag.fishqq(di_block=di_block)

{'Mode': 'Mode 1',

'Dec': 305.2748079001374,

'Inc': 89.0552105546049,

'N': 100,

'Mu': 0.09595300000000008,

'Mu_critical': 1.207,

'Me': 0.744655603828423,

'Me_critical': 1.094,

'Test_result': 'consistent with Fisherian model'}

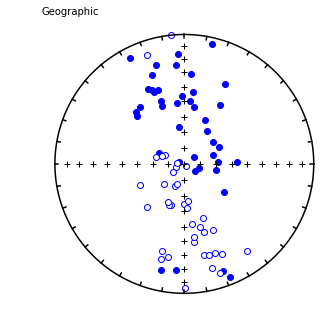

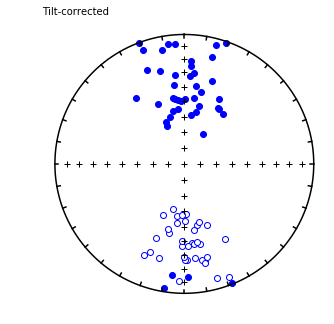

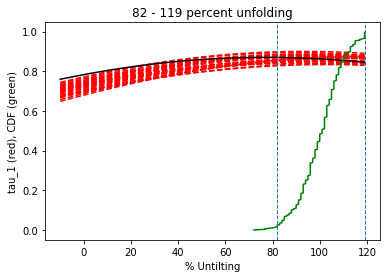

foldtest#

[Essentials Chapter 12] [command line version]

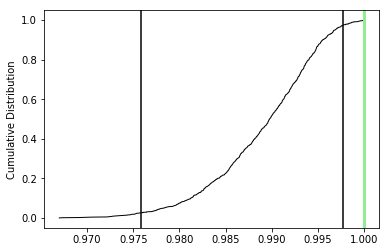

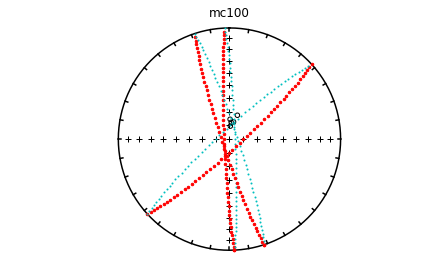

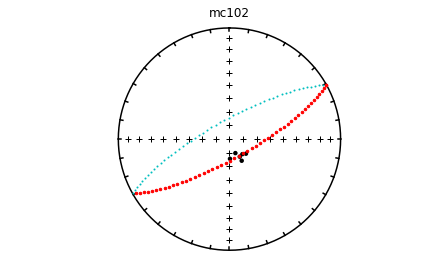

foldtest uses the fold test of Tauxe and Watson (1994, 10.1016/0012-821x(94)90006-x ) to find the degree of unfolding that produces the tightest distribution of directions (using the eigenvalue \(\tau_1\) as the criterion.

This can be done via pmag.bootstrap_fold_test(). Note that this can take several minutes.

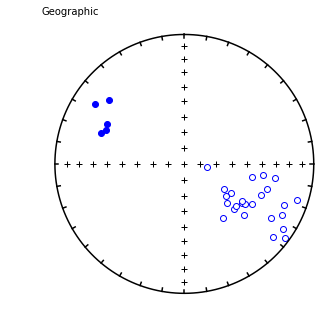

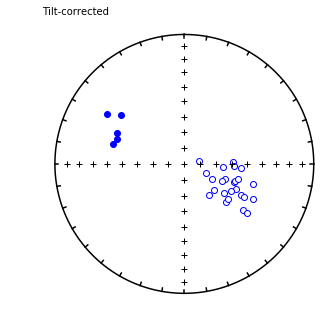

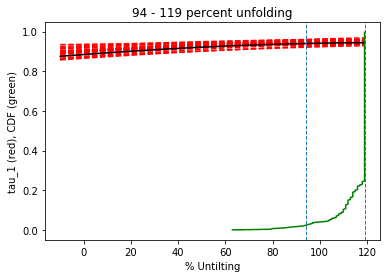

help(ipmag.bootstrap_fold_test)

Help on function bootstrap_fold_test in module pmagpy.ipmag:

bootstrap_fold_test(Data, num_sims=1000, min_untilt=-10, max_untilt=120, bedding_error=0, save=False, save_folder='.', fmt='svg', ninety_nine=False)

Conduct a bootstrap fold test (Tauxe and Watson, 1994)

Three plots are generated: 1) equal area plot of uncorrected data;

2) tilt-corrected equal area plot; 3) bootstrap results showing the trend

of the largest eigenvalues for a selection of the pseudo-samples (red

dashed lines), the cumulative distribution of the eigenvalue maximum (green

line) and the confidence bounds that enclose 95% of the pseudo-sample

maxima. If the confidence bounds enclose 100% unfolding, the data "pass"

the fold test.

Parameters

----------

Data : a numpy array of directional data [dec, inc, dip_direction, dip]

num_sims : number of bootstrap samples (default is 1000)

min_untilt : minimum percent untilting applied to the data (default is -10%)

max_untilt : maximum percent untilting applied to the data (default is 120%)

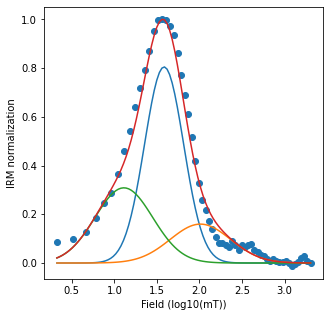

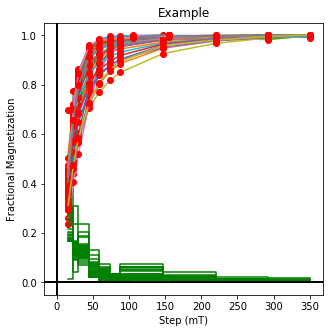

bedding_error : (circular standard deviation) for uncertainty on bedding poles

save : optional save of plots (default is False)

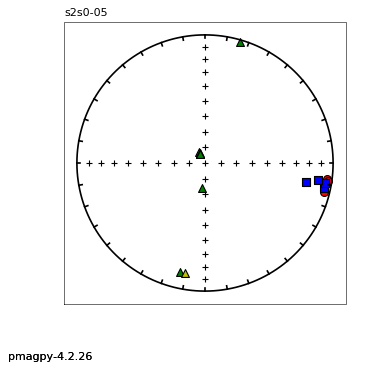

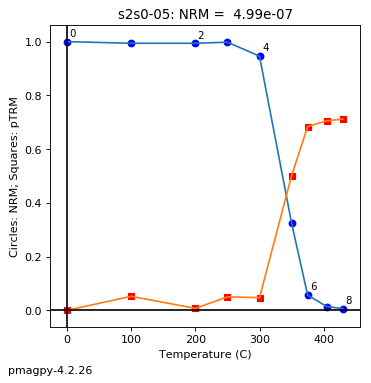

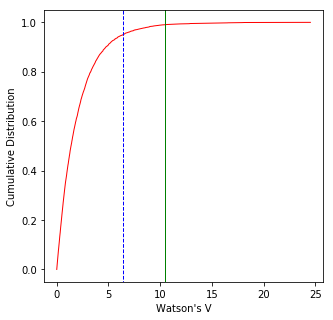

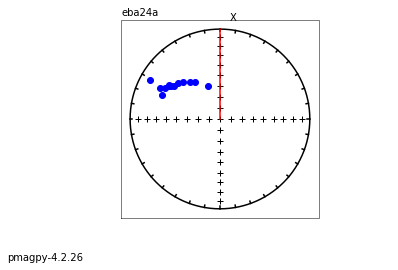

save_folder : path to directory where plots should be saved