Working with the MagIC database using PmagPy#

The Magnetics Information Consortium (MagIC) maintains a database of published rock and paleomagnetic data: https://www.earthref.org/MagIC

Many PmagPy scripts are designed to work with data in the MagIC format. This notebook uses Data Model 3.0: https://www.earthref.org/MagIC/data-models/3.0

Overview of MagIC#

The Magnetics Information Consortium (MagIC), hosted at http://earthref.org/MagIC is a database that serves as a Findable, Accessible, Interoperable, Reusable (FAIR) archive for paleomagnetic and rock magnetic data. Its data model is fully described here: https://www2.earthref.org/MagIC/data-models/3.0. Each contribution is associated with a publication via the DOI. There are nine data tables:

contribution: metadata of the associated publication.

locations: metadata for locations, which are groups of sites (e.g., stratigraphic section, region, etc.)

sites: metadata and derived data at the site level (units with a common expectation)

samples: metadata and derived data at the sample level.

specimens: metadata and derived data at the specimen level.

criteria: criteria by which data are deemed acceptable

ages: ages and metadata for sites/samples/specimens

images: associated images and plots.

MagIC .txt files all have the delimiter (tab) and the type of table in the first line and the column headers in the second line:

See the first few lines of a samples.txt table by clicking on and running the following cell:

with open('data_files/3_0/McMurdo/samples.txt') as f:

for line in f.readlines()[:3]:

print(line, end="")

tab samples

azimuth azimuth_dec_correction citations description dip geologic_classes geologic_types lat lithologies lon method_codes orientation_quality sample site

260 0 This study Archived samples from 1965, 66 expeditions. -57 Extrusive:Igneous Lava Flow -77.85 Trachyte 166.64 SO-SIGHT:FS-FD g mc01a mc01

Guide to PmagPy#

This notebook is one of a series of notebooks that demonstrate the functionality of PmagPy. The other notebooks are:

PmagPy_introduction.ipynb This notebook introduces PmagPy and lists the functions that are demonstrated in the other notebooks.

PmagPy_calculations.ipynb This notebook demonstrates many of the PmagPy calculation functions such as those that rotate directions, return statistical parameters, and simulate data from specified distributions.

PmagPy_plots_analysis.ipynb This notebook demonstrates PmagPy functions that can be used to visualize data as well as those that conduct statistical tests that have associated visualizations.

Customizing this notebook#

If you are running this notebook on JupyterHub and want to make changes, you should make a copy (see File menu). Otherwise, each time you update PmagPy, your changes will be overwritten.

Get started#

To use the functions in this notebook, we have to import the PmagPy modules pmagplotlib, pmag and ipmag and some other handy functions for use in the notebook. This is done in the following code block which must be executed before running any other code block. To execute, click on the code block and then click on the “Run” button in the menu.

In order to access the example data, this notebook is meant to be run in the PmagPy-data directory (PmagPy directory for developers).

Try it! Run the code block below (click on the cell and then click ‘Run’):

import pmagpy.pmag as pmag

import pmagpy.pmagplotlib as pmagplotlib

import pmagpy.ipmag as ipmag

import pmagpy.contribution_builder as cb

from pmagpy import convert_2_magic as convert

import matplotlib.pyplot as plt # our plotting buddy

import numpy as np # the fabulous NumPy package

import pandas as pd # and of course Pandas

# test if cartopy is installed

has_cartopy, Cartopy = pmag.import_cartopy()

# test if xlwt is installed (allows you to export to excel)

try:

import xlwt

has_xlwt = True

except ImportError:

has_xlwt = False

# This allows you to make matplotlib plots inside the notebook.

%matplotlib inline

from IPython.display import Image

import os # import some operating system utilities

import zipfile as zf # import the zipfile module for unpacking zip files

import shutil as shutil # another useful module with system utilities

import requests, pandas, getpass

api = 'https://api.earthref.org/v1/MagIC/{}'

try:

import wget

except:

!pip install wget

print('All modules imported!')

All modules imported!

Table of contents#

Functions in PmagPy_MagIC.ipynb

reading MagIC files : reading in MagIC formatted files

writing MagIC files : outputing MagIC formatted files

combine_magic : combines two MagIC formatted files of same type

convert_ages : convert ages in downloaded MagIC file to Ma

grab_magic_key : prints out a single column from a MagIC format file

magic_select : selects data from MagIC format file given conditions (e.g., method_codes contain string)

sites_extract : makes excel or latex files from sites.txt for publications

criteria_extract : makes excel or latex files from criteria.txt for publications

specimens_extract : makes excel or latex files from specimens.txt for publications

contributions work with data model 3.0 MagIC contributions

download_magic : how to download and unpack a contribution text file from the MagIC website

upload_magic : prepares a directory with a MagIC contribution for uploading to MagIC

cb.add_sites_to_meas_table : completes a measurements data frame with the information required for plotting by site.

cb.get_intensity_col : finds the first non-zero type of intensity data in a measurements dataframe.

Interacting with the MagIC database: downloads and uploads MagIC contributions.

conversion scripts : convert many laboratory measurement formats to the MagIC data model 3 format

_2g_asc_magic : converts 2G ascii files to MagIC

_2g_bin_magic : converts 2G binary files to MagIC

agm_magic : converts Princeton Measurements alternating gradient force magnetization (AGM) files to MagIC.

bgc_magic : convert Berkeley Geochronology Center files to MagIC.

cit_magic : convert Cal Tech format files to MagIC.

generic_magic : converts generic files to MagIC.

huji_magic : converts Hebrew University, Jerusalem, Israel files to MagIC.

huji_sample_magic : converts HUJI files to a MagIC format.

iodp_dscr_lore : converts IODP discrete measurement files to MagIC

iodp_jr6_lore : converts IODP JR6 measurement files to MagIC

iodp_samples_csv : converts IODP samples file to MagIC

iodp_srm_lore : converts IODP archive half measurement files to MagIC

jr6_jr6_magic : converts the AGICO JR6 spinner .jr6 files to MagIC

jr6_txt_magic : converts the AGICO JR6 .txt files to MagIC

k15_magic : converts 15 measurement anisotropy of magnetic susceptibility files to MagIC.

kly4s_magic : converts SIO KLY4S formatted files to MagIC.

ldeo_magic : converts Lamont-Doherty files to MagIC.

livdb_magic : converts Liverpool files to MagIC.

mst_magic : converts Curie Temperature experimental data to MagIC

sio_magic : converts Scripps Institution of Oceanography data files to MagIC

sufar4_magic : converts AGICO SUFAR program (ver.1.2.) ascii files to MagIC

tdt_magic : converts Thellier Tool files to MagIC

utrecht_magic : converts Fort Hoofddijk, Utrecht University Robot files to MagIC

orientation_magic : converts an “orient.txt” formatted file with field notebook information into MagIC formatted files

azdip_magic : converts an “azdip” formatted file to a samples.txt file format

other handy scripts

chartmaker : script for making chart to guide IZZI lab experiment

magic_read#

MagIC formatted data files can be imported to a notebook in one of two ways: a

importing to a Pandas DataFrame using the Pandas pd.read_csv() function

importing to a list of dictionaries using the pmag.magic_read() function.

In this notebook, we generally read MagIC tables into a Pandas Dataframe with a command like:

meas_df = pd.read_csv('MEASUREMENTS_FILE_PATH',sep='\t',header=1)

These data can then be manipulated with Pandas functions (https://pandas.pydata.org/)

meas_df=pd.read_csv('data_files/3_0/McMurdo/measurements.txt',sep='\t',header=1)

meas_df.head()

| experiment | specimen | measurement | dir_csd | dir_dec | dir_inc | hyst_charging_mode | hyst_loop | hyst_sweep_rate | treat_ac_field | ... | timestamp | magn_r2_det | magn_x_sigma | magn_xyz_sigma | magn_y_sigma | magn_z_sigma | susc_chi_mass | susc_chi_qdr_mass | susc_chi_qdr_volume | susc_chi_volume | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | mc01f-LP-DIR-AF | mc01f | mc01f-LP-DIR-AF1 | 0.4 | 171.9 | 31.8 | NaN | NaN | NaN | 0.0000 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 1 | mc01f-LP-DIR-AF | mc01f | mc01f-LP-DIR-AF2 | 0.4 | 172.0 | 30.1 | NaN | NaN | NaN | 0.0050 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 2 | mc01f-LP-DIR-AF | mc01f | mc01f-LP-DIR-AF3 | 0.5 | 172.3 | 30.4 | NaN | NaN | NaN | 0.0075 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 3 | mc01f-LP-DIR-AF | mc01f | mc01f-LP-DIR-AF4 | 0.4 | 172.1 | 30.4 | NaN | NaN | NaN | 0.0100 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 4 | mc01f-LP-DIR-AF | mc01f | mc01f-LP-DIR-AF5 | 0.5 | 171.9 | 30.8 | NaN | NaN | NaN | 0.0125 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

5 rows × 60 columns

Alternatively, the user may wish to use a list of dictionaries compatible with many pmag functions. For that, use the pmag.magic_read() function:

help (pmag.magic_read)

Help on function magic_read in module pmagpy.pmag:

magic_read(infile, data=None, return_keys=False, verbose=False)

Reads a Magic template file, returns data in a list of dictionaries.

Parameters

___________

Required:

infile : the MagIC formatted tab delimited data file

first line contains 'tab' in the first column and the data file type in the second (e.g., measurements, specimen, sample, etc.)

Optional:

data : data read in with, e.g., file.readlines()

Returns

_______

list of dictionaries, file type

meas_dict,file_type=pmag.magic_read('data_files/3_0/McMurdo/measurements.txt')

print (file_type)

print (meas_dict[0])

measurements

{'experiment': 'mc01f-LP-DIR-AF', 'specimen': 'mc01f', 'measurement': 'mc01f-LP-DIR-AF1', 'dir_csd': '0.4', 'dir_dec': '171.9', 'dir_inc': '31.8', 'hyst_charging_mode': '', 'hyst_loop': '', 'hyst_sweep_rate': '', 'treat_ac_field': '0.0', 'treat_ac_field_dc_off': '', 'treat_ac_field_dc_on': '', 'treat_ac_field_decay_rate': '', 'treat_dc_field': '0.0', 'treat_dc_field_ac_off': '', 'treat_dc_field_ac_on': '', 'treat_dc_field_decay_rate': '', 'treat_dc_field_phi': '0.0', 'treat_dc_field_theta': '0.0', 'treat_mw_energy': '', 'treat_mw_integral': '', 'treat_mw_power': '', 'treat_mw_time': '', 'treat_step_num': '1', 'treat_temp': '273', 'treat_temp_dc_off': '', 'treat_temp_dc_on': '', 'treat_temp_decay_rate': '', 'magn_mass': '', 'magn_moment': '2.7699999999999996e-05', 'magn_volume': '', 'citations': 'This study', 'instrument_codes': '', 'method_codes': 'LT-NO:LP-DIR-AF', 'quality': 'g', 'standard': 'u', 'meas_field_ac': '', 'meas_field_dc': '', 'meas_freq': '', 'meas_n_orient': '', 'meas_orient_phi': '', 'meas_orient_theta': '', 'meas_pos_x': '', 'meas_pos_y': '', 'meas_pos_z': '', 'meas_temp': '273', 'meas_temp_change': '', 'analysts': 'Jason Steindorf', 'description': '', 'software_packages': 'pmagpy-1.65b', 'timestamp': '', 'magn_r2_det': '', 'magn_x_sigma': '', 'magn_xyz_sigma': '', 'magn_y_sigma': '', 'magn_z_sigma': '', 'susc_chi_mass': '', 'susc_chi_qdr_mass': '', 'susc_chi_qdr_volume': '', 'susc_chi_volume': ''}

pmag.magic_write#

to write a dataframe out as a MagIC table, just set dataframe=True

help(pmag.magic_write)

Help on function magic_write in module pmagpy.pmag:

magic_write(ofile, Recs, file_type, dataframe=False)

Parameters

_________

ofile : path to output file

Recs : list of dictionaries in MagIC format

file_type : MagIC table type (e.g., specimens)

dataframe : boolean

if True, Recs is a pandas dataframe which must be converted

to a list of dictionaries

Return :

[True,False] : True if successful

ofile : same as input

Effects :

writes a MagIC formatted file from Recs

pmag.magic_write('my_measurements.txt', meas_df, 'measurements',dataframe=True)

25470 records written to file my_measurements.txt

(True, 'my_measurements.txt')

for a list of dictionaries, use this syntax

pmag.magic_write('my_measurements.txt', meas_dict, 'measurements')

25470 records written to file my_measurements.txt

(True, 'my_measurements.txt')

combine_magic#

[MagIC Database] [command line version]

MagIC tables have many columns only some of which are used in a particular instance. So combining files of the same type must be done carefully to ensure that the right data come under the right headings. The program combine_magic can be used to combine any number of MagIC files from a given type.

It reads in MagIC formatted files of a common type (e.g., sites.txt) and combines them into a single file, taking care that all the columns are preserved. For example, if there are both AF and thermal data from a study and we created a measurements.txt formatted file for each, we could use combine_magic.py on the command line to combine them together into a single measurements.txt file. In a notebook, we use ipmag.combine_magic().

help(ipmag.combine_magic)

Help on function combine_magic in module pmagpy.ipmag:

combine_magic(filenames, outfile='measurements.txt', data_model=3, magic_table='measurements', dir_path='.', input_dir_path='')

Takes a list of magic-formatted files, concatenates them, and creates a

single file. Returns output filename if the operation was successful.

Parameters

-----------

filenames : list of MagIC formatted files

outfile : name of output file [e.g., measurements.txt]

data_model : data model number (2.5 or 3), default 3

magic_table : name of magic table, default 'measurements'

dir_path : str

output directory, default "."

input_dir_path : str

input file directory (if different from dir_path), default ""

Returns

----------

outfile name if success, False if failure

Here we make a list of names of two MagIC formatted measurements.txt files and use ipmag.combine_magic() to put them together.

filenames=['data_files/combine_magic/af_measurements.txt','../combine_magic/therm_measurements.txt']

outfile='data_files/combine_magic/measurements.txt'

ipmag.combine_magic(filenames,outfile)

-I- Using online data model

-I- Getting method codes from earthref.org

-I- Importing controlled vocabularies from https://earthref.org

-I- overwriting /Users/nebula/Python/PmagPy/data_files/combine_magic/measurements.txt

-I- 14 records written to measurements file

'/Users/nebula/Python/PmagPy/data_files/combine_magic/measurements.txt'

convert_ages#

Files downloaded from the MagIC search interface have ages that are in the original units, but what is often desired is for them to be in a single unit. For example, if we searched the MagIC database for all absolute paleointensity data (records with method codes of ‘LP-PI-TRM’) from the last five million years, the data sets have a variety of age units. We can use pmag.convert_ages() to convert them all to millions of years.

First we follow the instructions for unpacking downloaded files in download_magic.

ipmag.download_magic('magic_downloaded_rows.txt',dir_path='data_files/convert_ages/',

input_dir_path='data_files/convert_ages/')

working on: 'contribution'

1 records written to file /Users/nebula/Python/PmagPy/data_files/convert_ages/contribution.txt

contribution data put in /Users/nebula/Python/PmagPy/data_files/convert_ages/contribution.txt

working on: 'sites'

14317 records written to file /Users/nebula/Python/PmagPy/data_files/convert_ages/sites.txt

sites data put in /Users/nebula/Python/PmagPy/data_files/convert_ages/sites.txt

True

After some minimal filtering using Pandas, we can convert a DataFrame to a list of dictionaries required by most PmagPy functions and use pmag.convert_ages() to convert all the ages. The converted list of dictionaries can then be turned back into a Pandas DataFrame and either plotted or filtered further as desired.

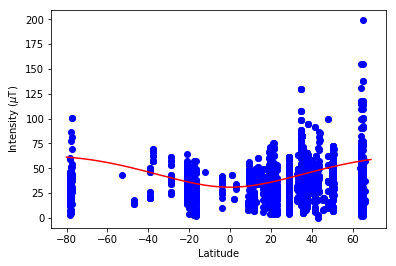

In this example, we filter for data older than the Brunhes (0.78 Ma) and younger than 5 Ma, then plot them against latitude. We can also use vdm_b to plot the intensities expected from the present dipole moment (~80 ZAm\(^2\)).

help(pmag.convert_ages)

Help on function convert_ages in module pmagpy.pmag:

convert_ages(Recs, data_model=3)

converts ages to Ma

Parameters

_________

Recs : list of dictionaries in data model by data_model

data_model : MagIC data model (default is 3)

# read in the sites.txt file as a dataframe

site_df=pd.read_csv('data_files/convert_ages/sites.txt',sep='\t',header=1)

# get rid aof any records without intensity data or latitude

site_df=site_df.dropna(subset=['int_abs','lat'])

# Pick out the sites with 'age' filled in

site_df_age=site_df.dropna(subset=['age'])

# pick out those with age_low and age_high filled in

site_df_lowhigh=site_df.dropna(subset=['age_low','age_high'])

# concatenate the two

site_all_ages=pd.concat([site_df_age,site_df_lowhigh])

# get rid of duplicates (records with age, age_high AND age_low)

site_all_ages.drop_duplicates(inplace=True)

# Pandas reads in blanks as NaN, which pmag.convert_ages hates

# this replaces all the NaNs with blanks

site_all_ages.fillna('',inplace=True)

# converts to a list of dictionaries

sites=site_all_ages.to_dict('records')

# converts the ages to Ma

converted_df=pmag.convert_ages(sites)

# turn it back into a DataFrame

site_ages=pd.DataFrame(converted_df)

# filter away

site_ages=site_ages[site_ages.age.astype(float) <= 5]

site_ages=site_ages[site_ages.age.astype(float) >=0.05]

Let’s plot them up and see what we get.

plt.plot(site_ages.lat,site_ages.int_abs*1e6,'bo')

# put on the expected values for the present dipole moment (~80 ZAm^2)

lats=np.arange(-80,70,1)

vdms=80e21*np.ones(len(lats))

bs=pmag.vdm_b(vdms,lats)*1e6

plt.plot(lats,bs,'r-')

plt.xlabel('Latitude')

plt.ylabel('Intensity ($\mu$T)')

plt.show()

That is pretty awful agreement. Someday we need to figure out what is wrong with the data or our GAD hypothesis.

grab_magic_key#

[MagIC Database] [command line version]

Sometimes you want to read in a MagIC file and print out the desired key. Pandas makes this easy! In this example, we will print out latitudes for each site record.

sites=pd.read_csv('data_files/download_magic/sites.txt',sep='\t',header=1)

print (sites.lat)

0 33.067800

1 33.067460

2 33.044630

3 33.043960

4 33.044170

...

86 32.848164

87 32.848164

88 32.848164

89 32.848164

90 32.848164

Name: lat, Length: 91, dtype: float64

magic_select#

[MagIC Database] [command line version]

This example demonstrates how to select MagIC records that meet a certain criterion, like having a particular method code.

Note: to output into a MagIC formatted file, we can change the DataFrame to a list of dictionaries (with df.to_dict(“records”)) and use pmag.magic_write()..

help(pmag.magic_write)

Help on function magic_write in module pmagpy.pmag:

magic_write(ofile, Recs, file_type)

Parameters

_________

ofile : path to output file

Recs : list of dictionaries in MagIC format

file_type : MagIC table type (e.g., specimens)

Return :

[True,False] : True if successful

ofile : same as input

Effects :

writes a MagIC formatted file from Recs

# read in the data file

spec_df=pd.read_csv('data_files/magic_select/specimens.txt',sep='\t',header=1)

# pick out the desired data

method_key='method_codes' # change to method_codes for data model 3

spec_df=spec_df[spec_df.method_codes.str.contains('LP-DIR-AF')]

specs=spec_df.to_dict('records') # export to list of dictionaries

success,ofile=pmag.magic_write('data_files/magic_select/AF_specimens.txt',specs,'pmag_specimens') # specimens for data model 3.0

76 records written to file data_files/magic_select/AF_specimens.txt

sites_extract#

It is frequently desirable to format tables for publications from the MagIC formatted files. This example is for the sites.txt formatted file. It will create a site information table with the location and age information, and directions and/or intenisty summary tables. The function to call is ipmag.sites_extract().

help(ipmag.sites_extract)

Help on function sites_extract in module pmagpy.ipmag:

sites_extract(site_file='sites.txt', directions_file='directions.xls', intensity_file='intensity.xls', info_file='site_info.xls', output_dir_path='.', input_dir_path='', latex=False)

Extracts directional and/or intensity data from a MagIC 3.0 format sites.txt file.

Default output format is an Excel file.

Optional latex format longtable file which can be uploaded to Overleaf or

typeset with latex on your own computer.

Parameters

___________

site_file : str

input file name

directions_file : str

output file name for directional data

intensity_file : str

output file name for intensity data

site_info : str

output file name for site information (lat, lon, location, age....)

output_dir_path : str

path for output files

input_dir_path : str

path for intput file if different from output_dir_path (default is same)

latex : boolean

if True, output file should be latex formatted table with a .tex ending

Return :

[True,False], error type : True if successful

Effects :

writes Excel or LaTeX formatted tables for use in publications

Here is an example for how to create Latex files:

#latex way:

ipmag.sites_extract(directions_file='directions.tex',intensity_file='intensities.tex',

output_dir_path='data_files/3_0/McMurdo',info_file='site_info.tex',latex=True)

(True,

['/Users/nebula/Python/PmagPy/data_files/3_0/McMurdo/site_info.tex',

'/Users/nebula/Python/PmagPy/data_files/3_0/McMurdo/intensities.tex',

'/Users/nebula/Python/PmagPy/data_files/3_0/McMurdo/directions.tex'])

And here is how to create Excel files:

#xls way:

if has_xlwt:

print(ipmag.sites_extract(output_dir_path='data_files/3_0/McMurdo'))

(True, ['/Users/nebula/Python/PmagPy/data_files/3_0/McMurdo/site_info.xls', '/Users/nebula/Python/PmagPy/data_files/3_0/McMurdo/intensity.xls', '/Users/nebula/Python/PmagPy/data_files/3_0/McMurdo/directions.xls'])

criteria_extract#

This example is for the criteria.txt formatted file. It will create a criteria table suitable for publication in either LaTex or .csv format. The function to call is ipmag.criteria_extract().

help(ipmag.criteria_extract)

Help on function criteria_extract in module pmagpy.ipmag:

criteria_extract(crit_file='criteria.txt', output_file='criteria.xls', output_dir_path='.', input_dir_path='', latex=False)

Extracts criteria from a MagIC 3.0 format criteria.txt file.

Default output format is an Excel file.

typeset with latex on your own computer.

Parameters

___________

crit_file : str, default "criteria.txt"

input file name

output_file : str, default "criteria.xls"

output file name

output_dir_path : str, default "."

output file directory

input_dir_path : str, default ""

path for intput file if different from output_dir_path (default is same)

latex : boolean, default False

if True, output file should be latex formatted table with a .tex ending

Return :

[True,False], data table error type : True if successful

Effects :

writes xls or latex formatted tables for use in publications

# latex way:

ipmag.criteria_extract(output_dir_path='data_files/3_0/Megiddo',

latex=True,output_file='criteria.tex',)

(True, ['/Users/nebula/Python/PmagPy/data_files/3_0/Megiddo/criteria.tex'])

#xls way:

if has_xlwt:

print(ipmag.criteria_extract(output_dir_path='data_files/3_0/Megiddo'))

(True, ['/Users/nebula/Python/PmagPy/data_files/3_0/Megiddo/criteria.xls'])

specimens_extract#

Similarly, it is useful to make tables for specimen (intensity) data to include in publications. Here are examples using a specimens.txt file.

help(ipmag.specimens_extract)

Help on function specimens_extract in module pmagpy.ipmag:

specimens_extract(spec_file='specimens.txt', output_file='specimens.xls', landscape=False, longtable=False, output_dir_path='.', input_dir_path='', latex=False)

Extracts specimen results from a MagIC 3.0 format specimens.txt file.

Default output format is an Excel file.

typeset with latex on your own computer.

Parameters

___________

spec_file : str, default "specimens.txt"

input file name

output_file : str, default "specimens.xls"

output file name

landscape : boolean, default False

if True output latex landscape table

longtable : boolean

if True output latex longtable

output_dir_path : str, default "."

output file directory

input_dir_path : str, default ""

path for intput file if different from output_dir_path (default is same)

latex : boolean, default False

if True, output file should be latex formatted table with a .tex ending

Return :

[True,False], data table error type : True if successful

Effects :

writes xls or latex formatted tables for use in publications

#latex way:

ipmag.specimens_extract(output_file='specimens.tex',landscape=True,

output_dir_path='data_files/3_0/Megiddo',latex=True,longtable=True)

(True, ['/Users/nebula/Python/PmagPy/data_files/3_0/Megiddo/specimens.tex'])

#xls way:

if has_xlwt:

print(ipmag.specimens_extract(output_dir_path='data_files/3_0/Megiddo'))

(True, ['/Users/nebula/Python/PmagPy/data_files/3_0/Megiddo/specimens.xls'])

Contributions#

Here are some useful functions for working with MagIC data model 3.0 contributions.

download_magic#

[MagIC Database] [command line version]

Working with a public contribution:#

The programs ipmag.download_magic_from_id() and ipmag.download_magic_from_doi() download files from the MagIC website and ipmag.download_magic( ) unpacks the downloaded .txt file into individual text files. This program has an option to also separate the contribution into separate folders for each location.

As an example, download the cotribution with the DOI of 10.1029/2019GC008479. Make a folder into which you should put the downloaded txt file (called “magic_contribution.txt”) into it. Then use ipmag.download_magic to unpack the .txt file (magic_contribution.txt).

help(ipmag.download_magic_from_doi)

Help on function download_magic_from_doi in module pmagpy.ipmag:

download_magic_from_doi(doi)

Download a public contribution matching the provided DOI

from earthref.org/MagIC.

Parameters

----------

doi : str

DOI for a MagIC

Returns

---------

result : bool

message : str

Error message if download didn't succeed

help(ipmag.download_magic)

Help on function download_magic in module pmagpy.ipmag:

download_magic(infile=None, dir_path='.', input_dir_path='', overwrite=False, print_progress=True, data_model=3.0, separate_locs=False, txt='', excel=False)

takes the name of a text file downloaded from the MagIC database and

unpacks it into magic-formatted files. by default, download_magic assumes

that you are doing everything in your current directory. if not, you may

provide optional arguments dir_path (where you want the results to go) and

input_dir_path (where the downloaded file is IF that location is different from

dir_path).

Parameters

----------

infile : str

MagIC-format file to unpack

dir_path : str

output directory (default ".")

input_dir_path : str, default ""

path for intput file if different from output_dir_path (default is same)

overwrite: bool

overwrite current directory (default False)

print_progress: bool

verbose output (default True)

data_model : float

MagIC data model 2.5 or 3 (default 3)

separate_locs : bool

create a separate directory for each location (Location_*)

(default False)

txt : str, default ""

if infile is not provided, you may provide a string with file contents instead

(useful for downloading MagIC file directly from earthref)

excel : bool

input file is an excel spreadsheet (as downloaded from MagIC)

And here we go…

dir_path='data_files/download_magic' # set the path to the correct working directory

reference_doi='10.1029/2019GC008479' # set the reference DOI

magic_contribution='magic_contribution.txt' # default filename for downloaded file

ipmag.download_magic_from_doi(reference_doi)

os.rename(magic_contribution, dir_path+'/'+magic_contribution)

ipmag.download_magic(magic_contribution,dir_path=dir_path,print_progress=False)

16961/magic_contribution_16961.txt extracted to magic_contribution.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/contribution.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/locations.txt

91 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/sites.txt

611 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/samples.txt

676 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/specimens.txt

6297 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/measurements.txt

2 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/criteria.txt

91 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/ages.txt

True

help(ipmag.download_magic_from_id)

Help on function download_magic_from_id in module pmagpy.ipmag:

download_magic_from_id(con_id)

Download a public contribution matching the provided

contribution ID from earthref.org/MagIC.

Parameters

----------

doi : str

DOI for a MagIC

Returns

---------

result : bool

message : str

Error message if download didn't succeed

dir_path='data_files/download_magic' # set the path to the correct working directory

magic_id='16961' # set the magic ID number

magic_contribution='magic_contribution_'+magic_id+'.txt' # set the file name string

ipmag.download_magic_from_id(magic_id) # download the contribution from MagIC

os.rename(magic_contribution, dir_path+'/'+magic_contribution) # move the contribution to the directory

ipmag.download_magic(magic_contribution,dir_path=dir_path,print_progress=False) # unpack the file

1 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/contribution.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/locations.txt

91 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/sites.txt

611 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/samples.txt

676 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/specimens.txt

6297 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/measurements.txt

2 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/criteria.txt

91 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/ages.txt

True

You could look at these data with dmag_magic for example… (see the PmagPy_plots_analysis notebook).

Working with a private contribution#

private contributions are data sets within MagIC that have been uploaded but are not yet attached to a peer reviewed publication. The purpose is to allow reviewers and publishers to ensure the data quality.

Once in the private contribution it is possible to download it with a ‘private key’ associated with a MagIC contribution ID number.

We can download, unpack and examine the contribution if the contribution_id and private_key are known. The URL for the api is also required.

For this example, we can use the contribution prepared for MagIC in the upload_magic example below and saved as a private contribution.

api = 'https://api.earthref.org/v1/MagIC/{}'

contribution_id = 16960

private_key = 'fa06255d-ec45-473f-9f33-fa2a9bb6d8d8'

dir_path='data_files/download_magic'

shared_contribution_response = requests.get(api.format('data'), params={'id': contribution_id, 'key': private_key})

if (shared_contribution_response.status_code == 200):

shared_contribution_text = shared_contribution_response.text

print(shared_contribution_text[0:200], '\n')

elif (shared_contribution_response.status_code == 204): # bad file

print('Contribution ID and/or private key do not match any contributions in MagIC.', '\n')

else:

print('Error:', shared_contribution_response.json()['err'][0]['message'], '\n')

# save and unpack downloaded data

magic_contribution='magic_contribution_'+str(contribution_id)+'.txt'

magic_out=open(dir_path+'/'+magic_contribution, 'w', errors="backslashreplace")

magic_out.write(shared_contribution_text)

ipmag.download_magic(magic_contribution,dir_path=dir_path,print_progress=False) # unpack the file

tab delimited contribution

id timestamp contributor data_model_version reference

16960 2019-08-28T20:20:19.374Z @ltauxe 3.0 10.1029/2019GC008479

>>>>>>>>>>

tab delimited locations

location location_ty

1 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/contribution.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/locations.txt

91 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/sites.txt

611 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/samples.txt

676 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/specimens.txt

6297 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/measurements.txt

2 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/criteria.txt

91 records written to file /Users/ltauxe/PmagPy/data_files/download_magic/ages.txt

True

upload_magic#

[MagIC Database] [command line version]

We can just turn around and try to upload the file downloaded in download_magic. For this we use ipmag.upload_magic() in the same directory as for the download. You can try to upload the file you create to the MagIC data base as a private contribution here: https://www2.earthref.org/MagIC/upload

help(ipmag.upload_magic)

Help on function upload_magic in module pmagpy.ipmag:

upload_magic(concat=False, dir_path='.', dmodel=None, vocab='', contribution=None, input_dir_path='')

Finds all magic files in a given directory, and compiles them into an

upload.txt file which can be uploaded into the MagIC database.

Parameters

----------

concat : boolean where True means do concatenate to upload.txt file in dir_path,

False means write a new file (default is False)

dir_path : string for input/output directory (default ".")

dmodel : pmagpy data_model.DataModel object,

if not provided will be created (default None)

vocab : pmagpy controlled_vocabularies3.Vocabulary object,

if not provided will be created (default None)

contribution : pmagpy contribution_builder.Contribution object, if not provided will be created

in directory (default None)

input_dir_path : str, default ""

path for intput files if different from output dir_path (default is same)

Returns

----------

tuple of either: (False, error_message, errors, all_failing_items)

if there was a problem creating/validating the upload file

or: (filename, '', None, None) if the file creation was fully successful.

ipmag.upload_magic(dir_path='data_files/download_magic',concat=True)

-I- Removing old error files from /Users/ltauxe/PmagPy/data_files/download_magic: locations_errors.txt, samples_errors.txt, specimens_errors.txt, sites_errors.txt, ages_errors.txt, measurements_errors.txt, criteria_errors.txt, contribution_errors.txt, images_errors.txt

-W- Column 'core_depth' isn't in samples table, skipping it

-W- Column 'composite_depth' isn't in samples table, skipping it

-W- Invalid or missing column names, could not propagate columns

-I- ages file successfully read in

-I- Validating ages

-I- No row errors found!

-I- appending ages data to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- 91 records written to ages file

-I- ages written to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- contribution file successfully read in

-I- Validating contribution

-I- No row errors found!

-I- appending contribution data to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- 1 records written to contribution file

-I- contribution written to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- criteria file successfully read in

-I- Validating criteria

-I- No row errors found!

-I- appending criteria data to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- 2 records written to criteria file

-I- criteria written to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- locations file successfully read in

-I- Validating locations

-I- No row errors found!

-I- appending locations data to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- 1 records written to locations file

-I- locations written to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- measurements file successfully read in

-I- Validating measurements

-I- No row errors found!

-I- appending measurements data to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- 6297 records written to measurements file

-I- measurements written to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- samples file successfully read in

-I- Validating samples

-I- No row errors found!

-I- appending samples data to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- 611 records written to samples file

-I- samples written to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- sites file successfully read in

-I- Validating sites

-I- No row errors found!

-I- appending sites data to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- 91 records written to sites file

-I- sites written to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- specimens file successfully read in

-I- Validating specimens

-I- No row errors found!

-I- appending specimens data to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

-I- 676 records written to specimens file

-I- specimens written to /Users/ltauxe/PmagPy/data_files/download_magic/upload.txt

Finished preparing upload file: /Users/ltauxe/PmagPy/data_files/download_magic/Golan-Heights_04.Sep.2020.txt

-I- Your file has passed validation. You should be able to upload it to the MagIC database without trouble!

('/Users/ltauxe/PmagPy/data_files/download_magic/Golan-Heights_04.Sep.2020.txt',

'',

None,

None)

If this were your own study, you could now go to https://earthref.org/MagIC and upload your contribution to a Private Workspace, validate, assign a DOI and activate!

cb.add_sites_to_meas_table#

MagIC data model 3 took out redundant columns in the MagIC tables so the hierarchy of specimens (in the measurements and specimens tables) up to samples, sites and locations is lost. To put these back into the measurement table, we have the function cb.add_sites_to_meas_table(), which is super handy when data analysis requires it.

help(cb.add_sites_to_meas_table)

Help on function add_sites_to_meas_table in module pmagpy.contribution_builder:

add_sites_to_meas_table(dir_path)

Add site columns to measurements table (e.g., to plot intensity data),

or generate an informative error message.

Parameters

----------

dir_path : str

directory with data files

Returns

----------

status : bool

True if successful, else False

data : pandas DataFrame

measurement data with site/sample

status,meas_df=cb.add_sites_to_meas_table('data_files/3_0/McMurdo')

meas_df.columns

Index(['experiment', 'specimen', 'measurement', 'dir_csd', 'dir_dec',

'dir_inc', 'hyst_charging_mode', 'hyst_loop', 'hyst_sweep_rate',

'treat_ac_field', 'treat_ac_field_dc_off', 'treat_ac_field_dc_on',

'treat_ac_field_decay_rate', 'treat_dc_field', 'treat_dc_field_ac_off',

'treat_dc_field_ac_on', 'treat_dc_field_decay_rate',

'treat_dc_field_phi', 'treat_dc_field_theta', 'treat_mw_energy',

'treat_mw_integral', 'treat_mw_power', 'treat_mw_time',

'treat_step_num', 'treat_temp', 'treat_temp_dc_off', 'treat_temp_dc_on',

'treat_temp_decay_rate', 'magn_mass', 'magn_moment', 'magn_volume',

'citations', 'instrument_codes', 'method_codes', 'quality', 'standard',

'meas_field_ac', 'meas_field_dc', 'meas_freq', 'meas_n_orient',

'meas_orient_phi', 'meas_orient_theta', 'meas_pos_x', 'meas_pos_y',

'meas_pos_z', 'meas_temp', 'meas_temp_change', 'analysts',

'description', 'software_packages', 'timestamp', 'magn_r2_det',

'magn_x_sigma', 'magn_xyz_sigma', 'magn_y_sigma', 'magn_z_sigma',

'susc_chi_mass', 'susc_chi_qdr_mass', 'susc_chi_qdr_volume',

'susc_chi_volume', 'sequence', 'sample', 'site'],

dtype='object')

cb.get_intensity_col#

The MagIC data model has several different forms of magnetization with different normalizations (moment, volume, or mass). So to find the one used in a particular measurements table we can use this handy function.

help(cb.get_intensity_col)

Help on function get_intensity_col in module pmagpy.contribution_builder:

get_intensity_col(data)

Check measurement dataframe for intensity columns 'magn_moment', 'magn_volume', 'magn_mass','magn_uncal'.

Return the first intensity column that is in the dataframe AND has data.

Parameters

----------

data : pandas DataFrame

Returns

---------

str

intensity method column or ""

magn_col=cb.get_intensity_col(meas_df)

print (magn_col)

magn_moment

Interacting with the MagIC database#

Downloading contributions from the MagIC database Contributions can be downloaded from the MagIC database in two ways, using either the contribution ID or the publication’s DOI.

downloading using the contribution ID. For this we use the function ipmag.download_magic_from_id().

help(ipmag.download_magic_from_id)

Help on function download_magic_from_id in module pmagpy.ipmag:

download_magic_from_id(con_id)

Download a public contribution matching the provided

contribution ID from earthref.org/MagIC.

Parameters

----------

doi : str

DOI for a MagIC

Returns

---------

result : bool

message : str

Error message if download didn't succeed

magic_id='16676' # set the magic ID number

magic_contribution='magic_contribution_'+magic_id+'.txt' # set the file name string

ipmag.download_magic_from_id(magic_id) # download the contribution from MagIC

(True, 'magic_contribution_16676 (1).txt')

Then we can unpack it with ipmag.download_magic() as above.

ipmag.download_magic(magic_contribution,print_progress=False) # unpack the file

1 records written to file /Users/ltauxe/PmagPy/contribution.txt

1 records written to file /Users/ltauxe/PmagPy/locations.txt

91 records written to file /Users/ltauxe/PmagPy/sites.txt

611 records written to file /Users/ltauxe/PmagPy/samples.txt

676 records written to file /Users/ltauxe/PmagPy/specimens.txt

6297 records written to file /Users/ltauxe/PmagPy/measurements.txt

1 records written to file /Users/ltauxe/PmagPy/criteria.txt

91 records written to file /Users/ltauxe/PmagPy/ages.txt

True

downloading using the publication DOI. For this we use the function ipmag.download_magic_from_doi().

help(ipmag.download_magic_from_doi)

Help on function download_magic_from_doi in module pmagpy.ipmag:

download_magic_from_doi(doi)

Download a public contribution matching the provided DOI

from earthref.org/MagIC.

Parameters

----------

doi : str

DOI for a MagIC

Returns

---------

result : bool

message : str

Error message if download didn't succeed

reference_doi='10.1029/2019GC008479'

magic_contribution='magic_contribution.txt'

ipmag.download_magic_from_doi(reference_doi)

ipmag.download_magic(magic_contribution,print_progress=False)

16961/magic_contribution_16961.txt extracted to magic_contribution.txt

1 records written to file /Users/ltauxe/PmagPy/contribution.txt

1 records written to file /Users/ltauxe/PmagPy/locations.txt

91 records written to file /Users/ltauxe/PmagPy/sites.txt

611 records written to file /Users/ltauxe/PmagPy/samples.txt

676 records written to file /Users/ltauxe/PmagPy/specimens.txt

6297 records written to file /Users/ltauxe/PmagPy/measurements.txt

2 records written to file /Users/ltauxe/PmagPy/criteria.txt

91 records written to file /Users/ltauxe/PmagPy/ages.txt

True

To go the other way (upload to a Private Contribution in the MagIC database, we must first create a Private Contribution, then upload to it.

To create a Private Contribution, we use the API commands requests.post() and requests.put(). You will need an account on Earthref, which requires an ORCID id. So, once you have that, you can fill in your username and password below.

First let’s download a file which in this example, we can just re-upload.

# repeat this step from the previous example:

reference_doi='10.1029/2019GC008479'

ipmag.download_magic_from_doi(reference_doi)

16961/magic_contribution_16961.txt extracted to magic_contribution.txt

(True, '')

Now we can create the Private Contribution in MagIC. Run each of the next two cells and fill in your own username and password in MagIC.

username=input()

ltauxe

password=getpass.getpass()

········

To create the contribution, run the following code:

response=ipmag.create_private_contribution( username=username,password=password)

if response['status_code']:

contribution_id = response['id']

print('Created private contribution with ID', contribution_id, '\n')

else:

print('Create Private Contribution Error:', response['errors'])

Created private contribution with ID 19241

And upload what we just downloaded (or your own upload file)

response=ipmag.upload_to_private_contribution(contribution_id,magic_contribution,

username=username,password=password)

if response['status_code']:

print('Uploaded a text file to private contribution with ID', contribution_id, '\n')

else:

print('Upload Private Contribution Error:', response['errors'])

Uploaded a text file to private contribution with ID 19241

To validate the file we just uploaded….

response=ipmag.validate_private_contribution(contribution_id,

username=username,password=password)

print(response)

Validated contribution with ID 19241 :

[{'table': 'contribution', 'column': 'reference', 'message': 'The contribution table is missing required column "reference".', 'rows': [1]}, {'table': 'contribution', 'column': 'lab_names', 'message': 'The contribution table is missing required column "lab_names".', 'rows': [1]}]

{'status_code': True, 'errors': 'None', 'method': 'POST', 'contribution_id': 19241, 'url': 'https://api.earthref.org/v1/MagIC/private/validate?id=19241', 'validation_results': [{'table': 'contribution', 'column': 'reference', 'message': 'The contribution table is missing required column "reference".', 'rows': [1]}, {'table': 'contribution', 'column': 'lab_names', 'message': 'The contribution table is missing required column "lab_names".', 'rows': [1]}]}

There are some errors, because of the new requirement of ‘lab_names’ in the contribution.txt file - This could be fixed, re-uploaded and re-validated if desired.

And cleanup!

remove contribution file from this directory and from Earthref

remove the downloaded files!

# delete a private contribution

response=ipmag.delete_private_contribution(contribution_id,username=username,password=password)

print (response)

{'status_code': True, 'errors': 'None', 'method': 'DELETE', 'url': 'https://api.earthref.org/v1/MagIC/private?id=19241', 'id': 19241}

# delete the downloaded files!

remove_these=['contribution.txt','locations.txt','sites.txt','samples.txt',

'specimens.txt','measurements.txt','criteria.txt','ages.txt',

'magic_contribution.txt','magic_contribution','magic_contribution.txt']

for file in remove_these:

try:

os.remove(file)

except:

print (file, 'no such file')

contribution.txt no such file

locations.txt no such file

sites.txt no such file

samples.txt no such file

specimens.txt no such file

measurements.txt no such file

criteria.txt no such file

ages.txt no such file

magic_contribution.txt no such file

magic_contribution no such file

magic_contribution.txt no such file

Conversion Scripts#

convert_2_magic#

We imported this module as convert. It provides many functions for creating MagIC format files from non-MagIC formats. The MagIC formatted files can then be used with PmagPy programs and uploaded to the MagIC database. Let’s take a look at the options:

_2g_asc_magic#

To convert the ascii file exported from 2G Enterprises measurement files we use convert._2g_asc() function in the convert_2_magic module (inported as convert).

help(convert._2g_asc)

Help on function _2g_asc in module pmagpy.convert_2_magic:

_2g_asc(dir_path='.', mag_file='', meas_file='measurements.txt', spec_file='specimens.txt', samp_file='samples.txt', site_file='sites.txt', loc_file='locations.txt', or_con='3', specnum=0, samp_con='2', gmeths='FS-FD:SO-POM', location='Not Specified', inst='', user='', noave=False, input_dir='', savelast=False, experiment='Demag')

Convert 2G ascii format file to MagIC file(s)

Parameters

----------

dir_path : str

output directory, default "."

mag_file : str

input file name

meas_file : str

output measurement file name, default "measurements.txt"

spec_file : str

output specimen file name, default "specimens.txt"

samp_file: str

output sample file name, default "samples.txt"

site_file : str

output site file name, default "sites.txt"

loc_file : str

output location file name, default "locations.txt"

or_con : number

orientation convention, default '3', see info below

specnum : int

number of characters to designate a specimen, default 0

samp_con : str

(specimen/)sample/site naming convention, default '2', see info below

gmeths : str

sampling method codes, default "FS-FD:SO-POM", see info below

location : str

location name, default "unknown"

inst : str

instrument, default ""

user : str

user name, default ""

noave : bool

do not average duplicate measurements, default False (so by default, DO average)

savelast : bool

take the last measurement if replicates at treatment step, default is False

input_dir : str

input file directory IF different from dir_path, default ""

experiment : str

experiment type, see info below; default is Demag

cooling_times_list : list

cooling times in [K/minutes] seperated by comma,

ordered at the same order as XXX.10,XXX.20 ...XX.70

Returns

---------

Tuple : (True or False indicating if conversion was successful, meas_file name written)

Info

----------

Orientation convention:

[1] Lab arrow azimuth= mag_azimuth; Lab arrow dip=-field_dip

i.e., field_dip is degrees from vertical down - the hade [default]

[2] Lab arrow azimuth = mag_azimuth-90; Lab arrow dip = -field_dip

i.e., mag_azimuth is strike and field_dip is hade

[3] Lab arrow azimuth = mag_azimuth; Lab arrow dip = 90-field_dip

i.e., lab arrow same as field arrow, but field_dip was a hade.

[4] lab azimuth and dip are same as mag_azimuth, field_dip

[5] lab azimuth is same as mag_azimuth,lab arrow dip=field_dip-90

[6] Lab arrow azimuth = mag_azimuth-90; Lab arrow dip = 90-field_dip

[7] all others you will have to either customize your

self or e-mail ltauxe@ucsd.edu for help.

Sample naming convention:

[1] XXXXY: where XXXX is an arbitrary length site designation and Y

is the single character sample designation. e.g., TG001a is the

first sample from site TG001. [default]

[2] XXXX-YY: YY sample from site XXXX (XXX, YY of arbitrary length)

[3] XXXX.YY: YY sample from site XXXX (XXX, YY of arbitrary length)

[4-Z] XXXX[YYY]: YYY is sample designation with Z characters from site XXX

[5] site name = sample name

[6] site name entered in site_name column in the orient.txt format input file -- NOT CURRENTLY SUPPORTED

[7-Z] [XXX]YYY: XXX is site designation with Z characters from samples XXXYYY

[8] siteName_sample_specimen: the three are differentiated with '_'

Sampling method codes:

FS-FD field sampling done with a drill

FS-H field sampling done with hand samples

FS-LOC-GPS field location done with GPS

FS-LOC-MAP field location done with map

SO-POM a Pomeroy orientation device was used

SO-ASC an ASC orientation device was used

SO-MAG orientation with magnetic compass

SO-SUN orientation with sun compass

Experiment type:

Demag:

AF and/or Thermal

NOTE: no other experiment types supported yet - please post request on github.org/PmagPy/PmagPy

# set input directory

input_dir='data_files/convert_2_magic/2g_asc_magic/_2g_asc'

dir_path='data_files/convert_2_magic/2g_asc_magic' # output directory

files=os.listdir(input_dir) # get a list of files

# make containers for output files

measfiles,specfiles,sampfiles,sitefiles,locfiles=[],[],[],[],[]

for file in sorted(files):

try:

os.remove(file+'_samples.txt') # remove existing files if any

except:

pass

if '.DS_Store' not in file and 'README' not in file:

print ('working on ',file)

# set output file names

meas_file,spec_file,samp_file,site_file=file+'.magic',file+'_specimens.txt',file+'_samples.txt',file+'_sites.txt'

loc_file=file+'_locations.txt'

# convert the ascii file to magic format

convert._2g_asc(mag_file=file,dir_path=dir_path,input_dir=input_dir,meas_file=meas_file,spec_file=spec_file,

samp_file=samp_file,site_file=site_file,loc_file=loc_file,samp_con='1',or_con='5')

measfiles.append(meas_file) # add output file to list of output files

specfiles.append(spec_file)

sampfiles.append(samp_file)

sitefiles.append(site_file)

locfiles.append(loc_file)

ipmag.combine_magic(measfiles,dir_path=dir_path) # combine the output files together

ipmag.combine_magic(specfiles,dir_path=dir_path,outfile='specimens.txt')

ipmag.combine_magic(sampfiles,dir_path=dir_path,outfile='samples.txt')

ipmag.combine_magic(sitefiles,dir_path=dir_path,outfile='sites.txt')

ipmag.combine_magic(locfiles,dir_path=dir_path,outfile='locations.txt')

working on DR3B.asc

importing DR3B

21 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc.magic

1 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc_specimens.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc_samples.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc_sites.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc_locations.txt

working on OK3_15af.asc

importing OK3_15af

12 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc.magic

1 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc_specimens.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc_samples.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc_sites.txt

1 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc_locations.txt

['/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc.magic', '/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc.magic']

-I- writing measurements records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/measurements.txt

-I- 33 records written to measurements file

['/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc_specimens.txt', '/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc_specimens.txt']

-I- writing specimens records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/specimens.txt

-I- 2 records written to specimens file

['/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc_samples.txt', '/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc_samples.txt']

-I- writing samples records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/samples.txt

-I- 2 records written to samples file

['/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc_sites.txt', '/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc_sites.txt']

-I- writing sites records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/sites.txt

-I- 2 records written to sites file

['/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/DR3B.asc_locations.txt', '/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/OK3_15af.asc_locations.txt']

-I- writing locations records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/locations.txt

-I- 2 records written to locations file

'/Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_asc_magic/locations.txt'

_2g_bin_magic#

[MagIC Database] [command line version]

To convert the binary formatted 2G Enterprises measurement files, we can use the function convert._2g_bin() in the convert_2_magic module (imported as convert).

help(convert._2g_bin)

Help on function _2g_bin in module pmagpy.convert_2_magic:

_2g_bin(dir_path='.', mag_file='', meas_file='measurements.txt', spec_file='specimens.txt', samp_file='samples.txt', site_file='sites.txt', loc_file='locations.txt', or_con='3', specnum=0, samp_con='2', corr='1', gmeths='FS-FD:SO-POM', location='unknown', inst='', user='', noave=False, input_dir='', lat='', lon='')

Convert 2G binary format file to MagIC file(s)

Parameters

----------

dir_path : str

output directory, default "."

mag_file : str

input file name

meas_file : str

output measurement file name, default "measurements.txt"

spec_file : str

output specimen file name, default "specimens.txt"

samp_file: str

output sample file name, default "samples.txt"

site_file : str

output site file name, default "sites.txt"

loc_file : str

output location file name, default "locations.txt"

or_con : number

orientation convention, default '3', see info below

specnum : int

number of characters to designate a specimen, default 0

samp_con : str

sample/site naming convention, default '2', see info below

corr: str

default '1'

gmeths : str

sampling method codes, default "FS-FD:SO-POM", see info below

location : str

location name, default "unknown"

inst : str

instrument, default ""

user : str

user name, default ""

noave : bool

do not average duplicate measurements, default False (so by default, DO average)

input_dir : str

input file directory IF different from dir_path, default ""

lat : float

latitude, default ""

lon : float

longitude, default ""

Returns

---------

Tuple : (True or False indicating if conversion was sucessful, meas_file name written)

Info

----------

Orientation convention:

[1] Lab arrow azimuth= mag_azimuth; Lab arrow dip=-field_dip

i.e., field_dip is degrees from vertical down - the hade [default]

[2] Lab arrow azimuth = mag_azimuth-90; Lab arrow dip = -field_dip

i.e., mag_azimuth is strike and field_dip is hade

[3] Lab arrow azimuth = mag_azimuth; Lab arrow dip = 90-field_dip

i.e., lab arrow same as field arrow, but field_dip was a hade.

[4] lab azimuth and dip are same as mag_azimuth, field_dip

[5] lab azimuth is same as mag_azimuth,lab arrow dip=field_dip-90

[6] Lab arrow azimuth = mag_azimuth-90; Lab arrow dip = 90-field_dip

[7] all others you will have to either customize your

self or e-mail ltauxe@ucsd.edu for help.

Sample naming convention:

[1] XXXXY: where XXXX is an arbitrary length site designation and Y

is the single character sample designation. e.g., TG001a is the

first sample from site TG001. [default]

[2] XXXX-YY: YY sample from site XXXX (XXX, YY of arbitary length)

[3] XXXX.YY: YY sample from site XXXX (XXX, YY of arbitary length)

[4-Z] XXXX[YYY]: YYY is sample designation with Z characters from site XXX

[5] site name = sample name

[6] site name entered in site_name column in the orient.txt format input file -- NOT CURRENTLY SUPPORTED

[7-Z] [XXX]YYY: XXX is site designation with Z characters from samples XXXYYY

Sampling method codes:

FS-FD field sampling done with a drill

FS-H field sampling done with hand samples

FS-LOC-GPS field location done with GPS

FS-LOC-MAP field location done with map

SO-POM a Pomeroy orientation device was used

SO-ASC an ASC orientation device was used

SO-MAG orientation with magnetic compass

SO-SUN orientation with sun compass

# set the input directory

input_dir='data_files/convert_2_magic/2g_bin_magic/mn1/'

dir_path='data_files/convert_2_magic/2g_bin_magic'

mag_file='mn001-1a.dat'

convert._2g_bin(mag_file=mag_file,input_dir=input_dir,dir_path=dir_path)

importing mn001-1a

24 records written to file /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_bin_magic/measurements.txt

-I- overwriting /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_bin_magic/specimens.txt

-I- 1 records written to specimens file

-I- overwriting /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_bin_magic/samples.txt

-I- 1 records written to samples file

-I- overwriting /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_bin_magic/sites.txt

-I- 1 records written to sites file

-I- overwriting /Users/ltauxe/PmagPy/data_files/convert_2_magic/2g_bin_magic/locations.txt

-I- 1 records written to locations file

(True, 'measurements.txt')

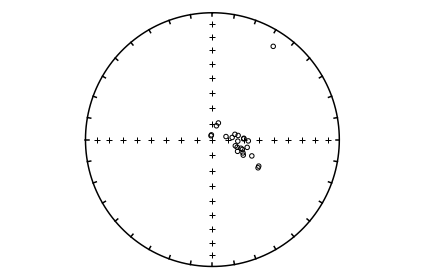

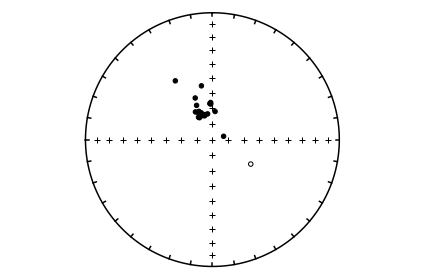

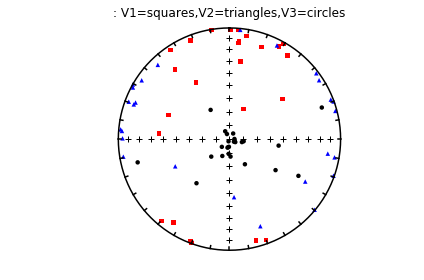

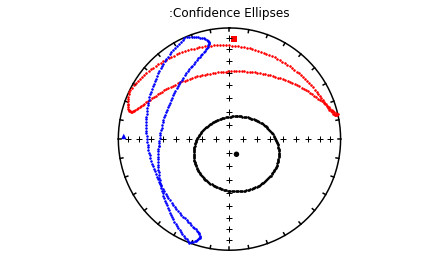

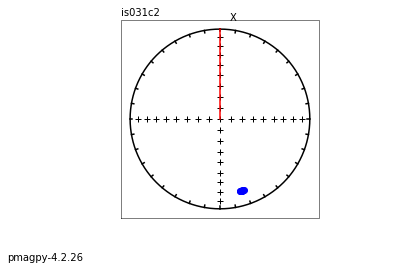

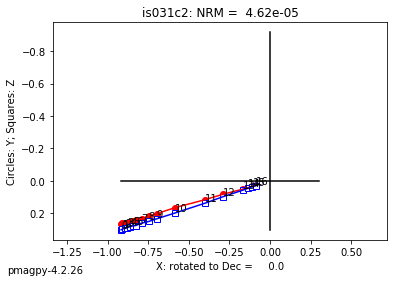

These are measurement data for a single specimen, so we can take a quickie look at the data in an equal area projection.

help(ipmag.plot_di)

Help on function plot_di in module pmagpy.ipmag:

plot_di(dec=None, inc=None, di_block=None, color='k', marker='o', markersize=20, legend='no', label='', title='', edge='', alpha=1)

Plot declination, inclination data on an equal area plot.

Before this function is called a plot needs to be initialized with code that looks

something like:

>fignum = 1

>plt.figure(num=fignum,figsize=(10,10),dpi=160)

>ipmag.plot_net(fignum)

Required Parameters

-----------

dec : declination being plotted

inc : inclination being plotted

or

di_block: a nested list of [dec,inc,1.0]

(di_block can be provided instead of dec, inc in which case it will be used)

Optional Parameters (defaults are used if not specified)

-----------

color : the default color is black. Other colors can be chosen (e.g. 'r')

marker : the default marker is a circle ('o')

markersize : default size is 20

label : the default label is blank ('')

legend : the default is no legend ('no'). Putting 'yes' will plot a legend.

edge : marker edge color - if blank, is color of marker

alpha : opacity

meas_df=pd.read_csv(dir_path+'/measurements.txt',sep='\t',header=1)

ipmag.plot_net(1)

ipmag.plot_di(dec=meas_df['dir_dec'],inc=meas_df['dir_inc'])

agm_magic#

[MagIC Database] [command line version]

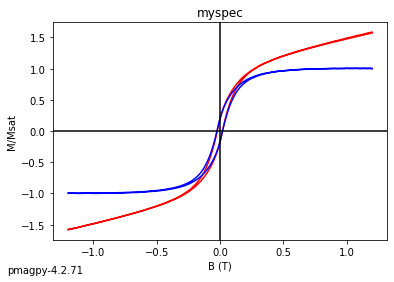

This program converts Micromag hysteresis files into MagIC formatted files. Because this program creates files for uploading to the MagIC database, specimens should also have sample/site/location information, which can be provided on the command line. If this information is not available, for example if this is a synthetic specimen, specify syn= True for synthetic name.

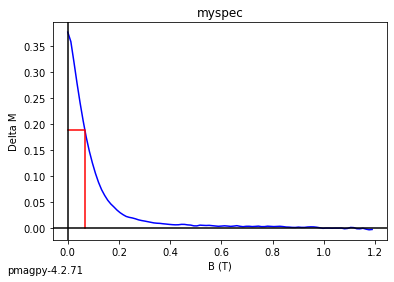

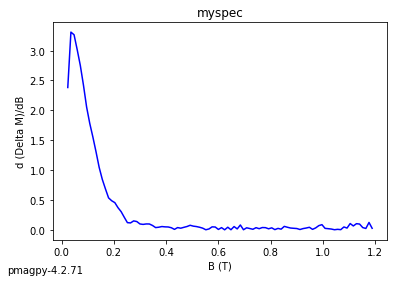

Someone named Lima Tango has measured a synthetic specimen named myspec for hysteresis and saved the data in a file named agm_magic_example.agm in the agm_magic/agm_directory folder. The backfield IRM curve for the same specimen was saved in same directory as agm_magic_example.irm. Use the function convert.agm() to convert the data into a measurements.txt output file. For the backfield IRM file, set the keyword “bak” to True. These were measured using cgs units, so be sure to set the units key word argument properly. Combine the two output files together using the instructions for combine_magic. The agm files can be plotted using hysteresis_magic but the back-field plots are broken.

help(convert.agm)

Help on function agm in module pmagpy.convert_2_magic:

agm(agm_file, dir_path='.', input_dir_path='', meas_outfile='', spec_outfile='', samp_outfile='', site_outfile='', loc_outfile='', spec_infile='', samp_infile='', site_infile='', specimen='', specnum=0, samp_con='1', location='unknown', instrument='', institution='', bak=False, syn=False, syntype='', units='cgs', fmt='new', user='')

Convert AGM format file to MagIC file(s)

Parameters

----------

agm_file : str

input file name

dir_path : str

working directory, default "."

input_dir_path : str

input file directory IF different from dir_path, default ""

meas_outfile : str

output measurement file name, default ""

(default output is SPECNAME.magic)

spec_outfile : str

output specimen file name, default ""

(default output is SPEC_specimens.txt)

samp_outfile: str

output sample file name, default ""

(default output is SPEC_samples.txt)

site_outfile : str

output site file name, default ""

(default output is SPEC_sites.txt)

loc_outfile : str

output location file name, default ""

(default output is SPEC_locations.txt)

samp_infile : str

existing sample infile (not required), default ""

site_infile : str

existing site infile (not required), default ""

specimen : str

specimen name, default ""

(default is to take base of input file name, e.g. SPEC.agm)

specnum : int

number of characters to designate a specimen, default 0

samp_con : str

sample/site naming convention, default '1', see info below

location : str

location name, default "unknown"

instrument : str

instrument name, default ""

institution : str

institution name, default ""

bak : bool

IRM backfield curve, default False

syn : bool

synthetic, default False

syntype : str

synthetic type, default ""

units : str

units, default "cgs"

fmt: str

input format, options: ('new', 'old', 'xy', default 'new')

user : user name

Returns

---------

Tuple : (True or False indicating if conversion was sucessful, meas_file name written)

Info

--------

Sample naming convention:

[1] XXXXY: where XXXX is an arbitrary length site designation and Y

is the single character sample designation. e.g., TG001a is the

first sample from site TG001. [default]

[2] XXXX-YY: YY sample from site XXXX (XXX, YY of arbitary length)

[3] XXXX.YY: YY sample from site XXXX (XXX, YY of arbitary length)

[4-Z] XXXX[YYY]: YYY is sample designation with Z characters from site XXX

[5] site name = sample name

[6] site name entered in site_name column in the orient.txt format input file -- NOT CURRENTLY SUPPORTED

[7-Z] [XXX]YYY: XXX is site designation with Z characters from samples XXXYYY

convert.agm('agm_magic_example.agm',dir_path='data_files/convert_2_magic/agm_magic/',

specimen='myspec',fmt='old',meas_outfile='agm.magic')

-I- writing specimens records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/myspec_specimens.txt

-I- 1 records written to specimens file

-I- writing samples records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/samples.txt

-I- 1 records written to samples file

-I- writing sites records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/sites.txt

-I- 1 records written to sites file

-I- writing locations records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/myspec_locations.txt

-I- 1 records written to locations file

-I- writing measurements records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/agm.magic

-I- 284 records written to measurements file

(True, 'agm.magic')

convert.agm('agm_magic_example.irm',dir_path='data_files/convert_2_magic/agm_magic/',

specimen='myspec',fmt='old',meas_outfile='irm.magic')

-I- overwriting /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/myspec_specimens.txt

-I- 1 records written to specimens file

-I- overwriting /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/samples.txt

-I- 1 records written to samples file

-I- overwriting /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/sites.txt

-I- 1 records written to sites file

-I- overwriting /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/myspec_locations.txt

-I- 1 records written to locations file

-I- writing measurements records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/agm_magic/irm.magic

-I- 41 records written to measurements file

(True, 'irm.magic')

infiles=['data_files/convert_2_magic/agm_magic/agm.magic','data_files/convert_2_magic/agm_magic/irm.magic']

ipmag.combine_magic(infiles,'data_files/convert_2_magic/agm_magic/measurements.txt')

-I- writing measurements records to /Users/nebula/Python/PmagPy/data_files/convert_2_magic/agm_magic/measurements.txt

-I- 325 records written to measurements file

'/Users/nebula/Python/PmagPy/data_files/convert_2_magic/agm_magic/measurements.txt'

We can look at these data using hysteresis_magic:

# read in the measurements data

meas_data=pd.read_csv('data_files/convert_2_magic/agm_magic/agm.magic',sep='\t',header=1)

# pick out the hysteresis data using the method code for hysteresis lab protocol

hyst_data=meas_data[meas_data.method_codes.str.contains('LP-HYS')]

# make the dictionary for figures that pmagplotlib likes

# make a list of specimens

specimens=hyst_data.specimen.unique()

cnt=1

for specimen in specimens:

HDD={'hyst':cnt,'deltaM':cnt+1,'DdeltaM':cnt+2}

spec_data=hyst_data[hyst_data.specimen==specimen]

# make a list of the field data

B=spec_data.meas_field_dc.tolist()

# make a list o the magnetizaiton data

M=spec_data.magn_moment.tolist()

# call the plotting function

hpars=pmagplotlib.plot_hdd(HDD,B,M,specimen)

hpars['specimen']=specimen

# print out the hysteresis parameters

print (specimen,': \n',hpars)

cnt+=3

myspec :

{'hysteresis_xhf': '1.77e-05', 'hysteresis_ms_moment': '2.914e+01', 'hysteresis_mr_moment': '5.493e+00', 'hysteresis_bc': '2.195e-02', 'hysteresis_bcr': '6.702e-02', 'magic_method_codes': 'LP-BCR-HDM', 'specimen': 'myspec'}

bgc_magic#

[MagIC Database] [command line version]

Here we convert the Berkeley Geochronology Center’s AutoCore format to MagIC use convert.bgc().

help(convert.bgc)

Help on function bgc in module pmagpy.convert_2_magic:

bgc(mag_file, dir_path='.', input_dir_path='', meas_file='measurements.txt', spec_file='specimens.txt', samp_file='samples.txt', site_file='sites.txt', loc_file='locations.txt', append=False, location='unknown', site='', samp_con='1', specnum=0, meth_code='LP-NO', volume=12, user='', timezone='US/Pacific', noave=False)

Convert BGC format file to MagIC file(s)

Parameters

----------

mag_file : str

input file name

dir_path : str

working directory, default "."

input_dir_path : str

input file directory IF different from dir_path, default ""

meas_file : str

output measurement file name, default "measurements.txt"

spec_file : str

output specimen file name, default "specimens.txt"

samp_file: str

output sample file name, default "samples.txt"

site_file : str

output site file name, default "sites.txt"

loc_file : str

output location file name, default "locations.txt"

append : bool

append output files to existing files instead of overwrite, default False

location : str

location name, default "unknown"

site : str

site name, default ""

samp_con : str

sample/site naming convention, default '1', see info below

specnum : int

number of characters to designate a specimen, default 0

meth_code : str

orientation method codes, default "LP-NO"

e.g. [SO-MAG, SO-SUN, SO-SIGHT, ...]

volume : float

volume in ccs, default 12.

user : str

user name, default ""

timezone : str

timezone in pytz library format, default "US/Pacific"

list of timezones can be found at http://pytz.sourceforge.net/

noave : bool

do not average duplicate measurements, default False (so by default, DO average)

Returns

---------

Tuple : (True or False indicating if conversion was sucessful, meas_file name written)

Info

--------

Sample naming convention:

[1] XXXXY: where XXXX is an arbitrary length site designation and Y

is the single character sample designation. e.g., TG001a is the

first sample from site TG001. [default]

[2] XXXX-YY: YY sample from site XXXX (XXX, YY of arbitary length)

[3] XXXX.YY: YY sample from site XXXX (XXX, YY of arbitary length)

[4-Z] XXXX[YYY]: YYY is sample designation with Z characters from site XXX

[5] site name same as sample

[6] site is entered under a separate column -- NOT CURRENTLY SUPPORTED

[7-Z] [XXXX]YYY: XXXX is site designation with Z characters with sample name XXXXYYYY

dir_path='data_files/convert_2_magic/bgc_magic/'

convert.bgc('15HHA1-2A',dir_path=dir_path)

mag_file in bgc_magic /Users/ltauxe/PmagPy/data_files/convert_2_magic/bgc_magic/15HHA1-2A

-I- writing specimens records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/bgc_magic/specimens.txt

-I- 1 records written to specimens file

-I- writing samples records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/bgc_magic/samples.txt

-I- 1 records written to samples file

-I- writing sites records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/bgc_magic/sites.txt

-I- 1 records written to sites file

-I- writing locations records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/bgc_magic/locations.txt

-I- 1 records written to locations file

-I- writing measurements records to /Users/ltauxe/PmagPy/data_files/convert_2_magic/bgc_magic/measurements.txt

-I- 24 records written to measurements file

(True,

'/Users/ltauxe/PmagPy/data_files/convert_2_magic/bgc_magic/measurements.txt')

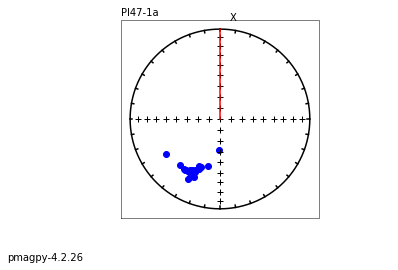

And let’s take a look

meas_df=pd.read_csv(dir_path+'measurements.txt',sep='\t',header=1)

ipmag.plot_net(1)